The Alibaba Qwen team has recently released the Qwen 3 Series, including two standout models: the 235B parameter Moe model (with 22B active parameters) and a lightweight 30B version (3B active).

The Qwen3-235b-A22B model competes with giants like DeepSeek R1, Grok-3, and Gemini 2.5 Pro, but it does so with fewer active parameters, faster inference, and open-source accessibility with Apache 2.0 license. Apparently, every lab suffers from the same affliction: an uncanny talent for outrageously long model names.

Anyway there is also a 30b model, the Qwen3-30 B-A3B outcompetes the previous Qwq-32 B with 10 times the activated parameters, and a small model like the Qwen3-4B can rival the performance of Qwen2.5-72 B-Instruct.

This is such a good time for open-source model enthusiasts.

Best part, takes fraction of cost per million input and output token, compared to SOTA models.

Let’s look at some of the key details of new Qwen 3 and then put it to test!

TL;DR

Qwen 3 consistently produced better, more functional, and user-friendly code for tasks.

Both Qwen 3 and DeepSeek R1 correctly solved logic puzzles but it had better output structure.

While both performed well on simpler math, DeepSeek R1 more accurately solved a complex multi-step calculation problem where Qwen 3 slightly missed, suggesting varied results.

Qwen 3 excelled at researching, summarizing and structuring text.

Overall, Qwen3 is a highly capable, efficient open-source choice, strong in coding/writing, while DeepSeek R1 holds an edge in complex math and reasoning speed.

Qwen3 Key Details

Here is all the essential key information for Qwen 3 you need to know:

Feature | Highlights |

|---|---|

Motto | Think Deeper, Act Faster |

Variants | MoE models: Qwen 3-235B-A22B (22 B active) • Qwen 3-30B-A3B (3 B active) |

Architecture | Mixture-of-Experts for the two flagship models (≈ 10 % of parameters active per forward pass → big inference savings) • standard dense transformer for the smaller set |

Benchmarks where it’s on top | HumanEval (code), MATH, GSM8K, Big-Bench Hard, BoolQ, ARC-Challenge |

“Hybrid Thinking” mode | Toggle between instant answers and step-by-step reasoning for tasks that need more chain-of-thought |

Language coverage | 119 languages & dialects (huge leap for multilingual apps) |

MCP (Model Context Protocol) | Everything except the 235 B giant is practical on beefy local hardware or multi-GPU rigs; official guides name Ollama, LM Studio, llama.cpp, etc. |

Pre-training corpus size | 36 trillion tokens—about 2× Qwen 2.5 |

License | Fully open-weight under Apache 2.0 (commercial-friendly) |

Local deployment | Everything except the 235 B giant is practical on beefy local hardware or multi-GPU rigs; official guides name Ollama, LM Studio, llama.cpp, etc. |

Additionally, Qwen3-235b-A22 B outperformed others on tasks like:

Coding – beat models like DeepSeek R1, Gemini 2.5 Pro, OpenAI O3 Mini, and 01. Though highly doubtful.

Mathematics – surpassed all major competitors in problem-solving and step-by-step accuracy

General Reasoning – outcompeted top-tier models in logical and multi-step reasoning tasks

Reading Comprehension – excelled in integrating multi-section ideas and inferencing

Logical Deduction (Puzzles) – solved complex deduction tasks better than many others

& Qwen3-30b-A3B performed competitively on tasks like:

Coding & Math – matched or exceeded lighter models like GPT-4 Omni, Gemma 3, DCV3.

Efficiency Benchmarks – achieved strong performance with only 3B active params.

General Use Benchmarks – handled various tasks comparably to larger models.

With such impressive results, benchmarks, and task support, it is easy to get lost.

So, let’s evaluate the model’s performance for real-world use cases across different domains and compare results against SOTAS like Deepseek R1.

So, let’s begin!

CODING

The first task we will check the model on is coding, nothing fancy here, let’s get straight to testing.

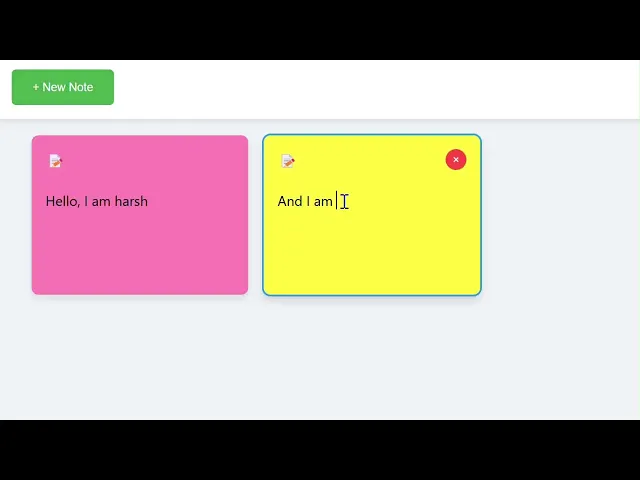

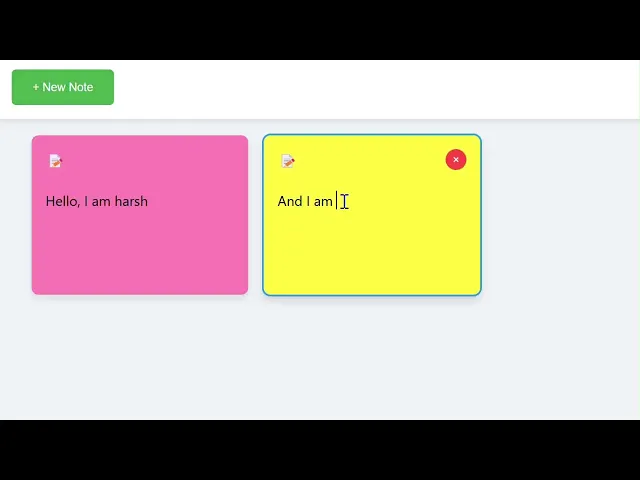

Functional Sticky Note App

(Skill Tested: Front-end UI development, DOM manipulation, interactivity)

Stick notes are a perfect productivity tool, and I use them quite often, so let’s see how both models perform when generating a functional stick note web app.

Prompt: Create a front-end for a modern note-taking app. Make it so that you can add sticky notes. Add snip/notes drag functionality, too.

Just added functionality to drag and drop. Let’s check the result.

Qwen 3

Code: note_taker_qwen.html

Output: (From artefacts window)

Deep Seek R1

Code: note_taker_deepseek.html

Output

Comparing, both models did a fine job, but the output of Qwen3 was faster, consistent and user-friendly.

So, I would like to go for Qwen 3 for simple tasks as it understands nuances and requirements well.

Now on to the next test

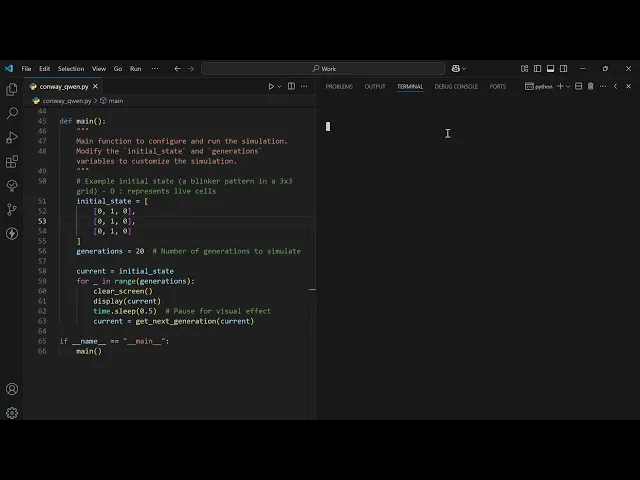

Conway Game of Life (Code)

(Skill Tested: Matrix logic, algorithm implementation, terminal rendering)

Conway’s Game of Life is a cellular automation game with the following rules:

Any live cell with fewer than two live neighbours dies (underpopulation).

Any live cell with more than three live neighbours dies (overpopulation).

Any live cell with two or three live neighbours continues to live.

Any dead cell with exactly three live neighbours comes to life.

Game Workings:

The starting layout is called the seed of the system.

The first generation is created by simultaneously applying the rules to every cell in the seed, whether it is alive or dead.

All changes—cells being born or dying—happen at once in a step called a tick.

Each new generation depends only on the one before it.

The same rules are then used repeatedly to create the next generation until the number of iterations is met.

Seeing the complexity and decision making in the game, it suited me as a good question to test the model’s coding and decision-making in code ability.

Prompt: Create a Python implementation of Conway’s Game of Life that runs in the terminal. The program should accept an initial state, run for a specified number of generations, and display each generation in the terminal.

No specific mention of testing the code, let’s check the results

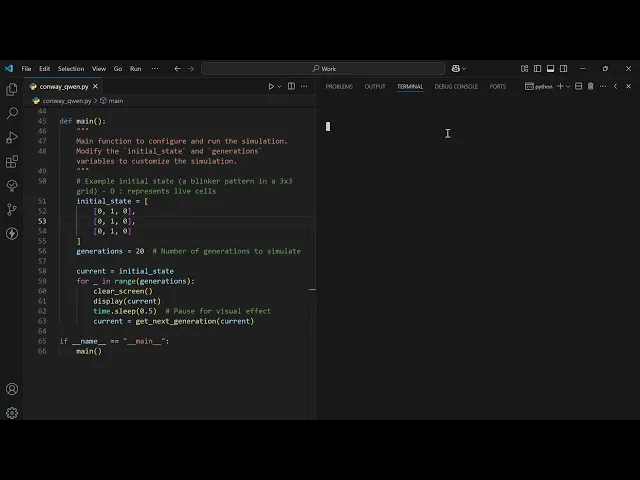

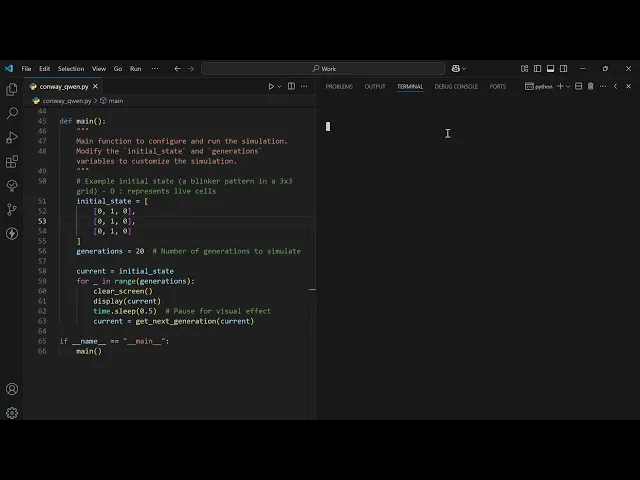

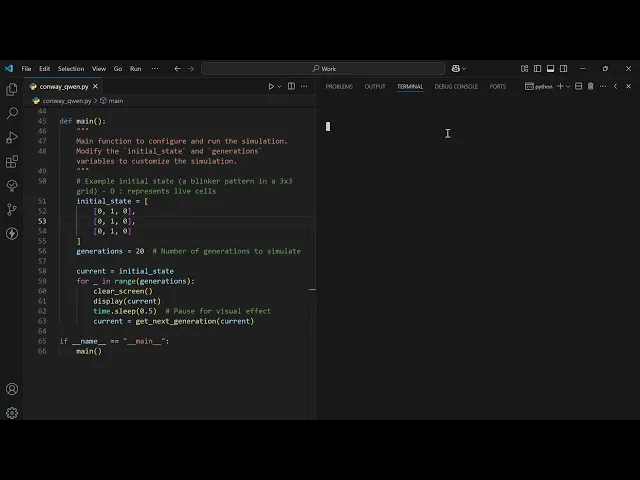

Qwen3

Code: game_of_live_qwen.py

Output

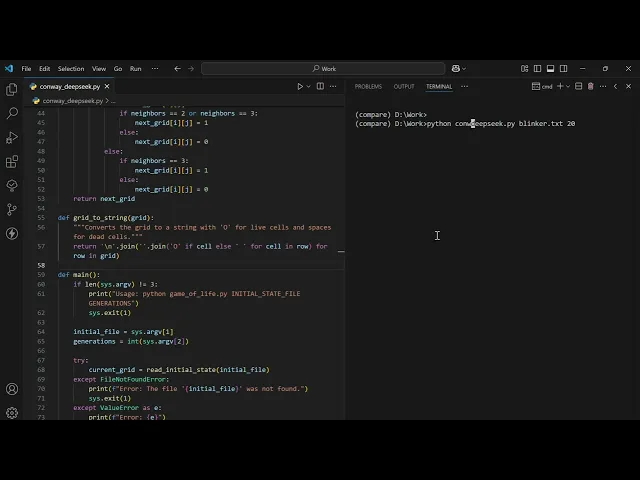

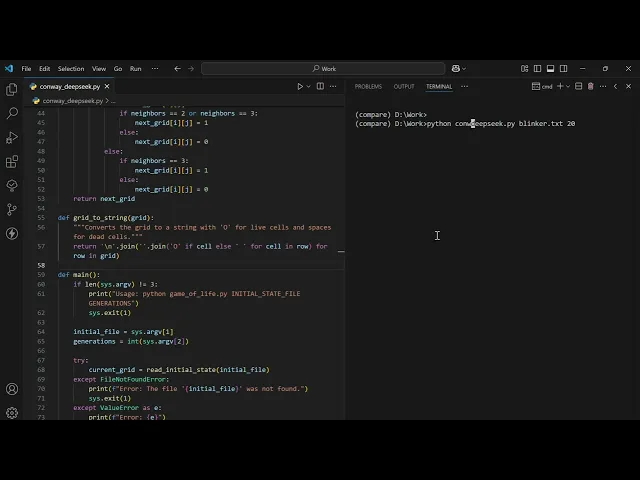

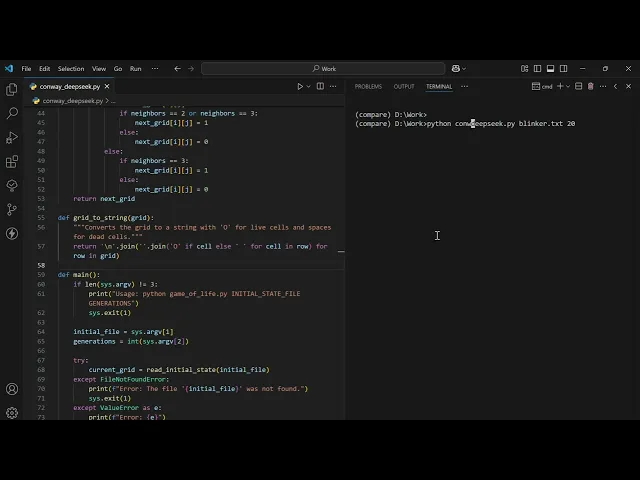

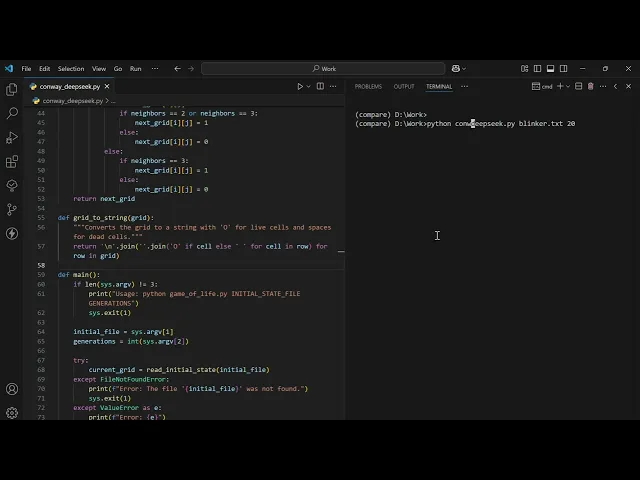

Deep Seek R1

Code: game_of_life_deepseek.py , test_file**: blinker.txt ,** terminal_command : terminal_command.bash

Output

I liked straightaway about Qwen3 because it provided me with the sample test case, making it easier to test the code. It also has a simple implementation—just a single script, which is good for prototyping things.

This was not the case with Deep Seek R1. It provided two files, one main and another test (after asking how to run—assuming it was Vibe coding). Also, the implementation was not similar to Qwen3, and performance issues were present.

Again, I would go to Qwen3 for the coding tasks as its code is fast, reliable, more accurate, and optimised.

Butterfly SVG Generation

(Skill Tested: SVG generation, geometric reasoning, visual symmetry)

SVG stands for Standard Vector Graphics and is quite prominent in graphics design and the core development field. So, let’s code a butterfly one for ourselves.

Prompt

Generate SVG code for a simple butterfly shape. The butterfly should have symmetrical wings and basic body parts (head, thorax, abdomen). Use basic SVG shapes and keep the design relatively recognisable but straightforward.

Specific mention of symmetry in the body part to check visual symmetry, let’s check the results.

Qwen 3

Code: svg_butterfly_qwen3.xml

Output

DeepSeek R1

Code: svg_butterfly_deepseek.xml

Output

Both models produced symmetric and part-specific results

However, Qwen3 produced better output than Deep Seek R1 and was more nuanced in following the instruction, as visible in the Code.

The Deep Seek output looks more cartoonish, and the code also shows that the model skips the following instruction.

Honestly, never expected Qwen3 to generate output better than Grok3, and it beat it for good. Proof (Grok3 Results – even worse than Deep Seek R1) 👇

This marks the end of the coding, mathematical, and graphical analysis ability test, and Qwen3 took the lead.

Now let’s test the model’s performance on the Reasoning Task, where Deep Seek R1 truly shone!

REASONING

One of the top reasons for Deep Seek R1’s success was its reasoning ability. I even wrote a blog post on analysing its CoT Reasoning.

Let’s see if Qwen3 can beat the Deep Seek at its own game.

Find The Guilty Person

(Skill Testing: Deductive logic, truth-table consistency)

A puzzle that many open-source models failed to answer correctly in past tests. Cracking this one requires high deductive reasoning, making it ideal for the test.

Prompt

A detective investigates a case involving five suspects: Alice, Ben, Carla, David, and Eva. Only one of them is guilty. The detective receives the following statements: Alice says: “Ben is guilty.” Ben says, “Carla is lying.” Carla says, “Eva is guilty.” David says: “I didn’t do it.” Eva says, “Carla is telling the truth.” Exactly one person is telling the truth. Who is guilty, and how do you know?

Ans: David

For fairness, only the question was provided. Let’s check the results.

Qwen 3

Conclusion The only consistent scenario is when Ben is the only truth-teller , and David is the guilty person . This satisfies both conditions: Only one person is telling the truth (Ben). Only one person is guilty (David). All other statements are false, consistent with their being lies. Final Answer: David

Conclusion The only consistent scenario is when Ben is the only truth-teller , and David is the guilty person . This satisfies both conditions: Only one person is telling the truth (Ben). Only one person is guilty (David). All other statements are false, consistent with their being lies. Final Answer: David

Conclusion The only consistent scenario is when Ben is the only truth-teller , and David is the guilty person . This satisfies both conditions: Only one person is telling the truth (Ben). Only one person is guilty (David). All other statements are false, consistent with their being lies. Final Answer: David

DeepSeek R1

Why Other Suspects Don’t Fit: If Alice, Ben, Carla, or Eva were guilty, it would lead to more than one truthful statement, violating the rule. Only David's guilt results in exactly one truthful person (Ben) while keeping all others false. Final Answer: David is guilty, and Ben is the only one telling the truth

Why Other Suspects Don’t Fit: If Alice, Ben, Carla, or Eva were guilty, it would lead to more than one truthful statement, violating the rule. Only David's guilt results in exactly one truthful person (Ben) while keeping all others false. Final Answer: David is guilty, and Ben is the only one telling the truth

Why Other Suspects Don’t Fit: If Alice, Ben, Carla, or Eva were guilty, it would lead to more than one truthful statement, violating the rule. Only David's guilt results in exactly one truthful person (Ben) while keeping all others false. Final Answer: David is guilty, and Ben is the only one telling the truth

Both models performed and followed the same truth-table consistency checks to arrive at the correct answer.

However, Deep Seek’s results were the fastest, taking around 80 seconds, in contrast to Qwen’s 120+ seconds, which generated a more structured answer, following a four-step deduction process.

As for me, I would go for Deep Seek R1 as I want a quick solution.

Now let’s move on to the next test.

Museum Field Trip Planning

(Skill Tested: Constraint satisfaction, arithmetic reasoning, real-world planning)

I often like to go on field trips with my friends, and I had to do a lot of real-world planning, such as booking a bus, booking a hotel, managing money, and so on.

This reasoning task comes from a similar ground and requires real-world planning, ideal for model ability testing.

Prompt:

A group of students plan to go on a field trip to a museum. They need to decide how many buses to rent and how to divide the students among the buses. Each bus can hold up to 40 students, but the museum only accommodates 120 students. The group has a budget of $800 for the bus rental, and each bus costs $200 per day. How many buses should the group rent, and how many students should go on each bus, and what is the cost associated with the day? Explain your reasoning.

Ans: 3 buses, 40 each, $200 each

Again, for fairness, only the question was provided. Let’s check the results.

Qwen 3

Final Answer The group should rent 3 buses with 40 students on each bus.<br

Final Answer The group should rent 3 buses with 40 students on each bus.<br

Final Answer The group should rent 3 buses with 40 students on each bus.<br

Deep Seek R1

The group should rent 3 buses, and each bus should carry 40 students.<br

The group should rent 3 buses, and each bus should carry 40 students.<br

The group should rent 3 buses, and each bus should carry 40 students.<br

On the first try, both models failed to include the last requirement—the cost associated with the day. However, on the second try, they gave the total cost instead of the cost/day, possibly due to the unclear prompt.

Again, DeepSeek was the fastest (95 seconds) compared to Qwen (105 seconds), but as for output structuring, Qwen3 takes the lead.

So, in essence, reasoning tasks are just a matter of preferences. Both models performed equally well and stumbled upon the same prompt.

So, if you prefer speed, go for deepseekr1; otherwise, Qwen 3 is preferred for output quality.

Anyway, this marks the end of the Reasoning test with a tie, now let’s move on to the next test – Math’s

MATHS

The official Qwen3 documents state that its flagship model beats other models on many math-related benchmarks, such as MATH, GSM8K, and more.

So, let’s test its mathematical ability and check whether it remains up to its statements or not?

Train Timing Problem

(Skill Tested: Multi-step calculations, algebra, word problem reasoning)

This problem tackles calculation related to relative motion (focus: distance = speed * time) in physics and needs to be solved through a multi-step calculation.

Prompt

A train leaves City A at 9:00 AM, travelling at 60 km/h. Another train leaves City B at 11:00 AM, travelling toward City A at 90 km/h. The distance between City A and City B is 450 km. At what time do the two trains meet? Show all steps clearly.

Trust me, I had to solve this question variant in my application development exam, and though easy, one can often get tricked in conversion. Let’s check how the model performs.

Qwen3 (Shorten)

Train A travels 60 km/h × 2 h = 120 km by 11:00 AM, leaving 330 km between the trains. They close the gap at a combined speed of 150 km/h (60 + 90), meeting in 330/150 = 2.2 h (2 hours 12 minutes) after 11:00 AM, which results in a meeting time of 1:12 PM . Calculations: - Head start: 60×2=120 km - Remaining distance: 450−120=330 km - Relative speed: 60+90=150 km/h - Time to meet: 150330=2.2 hours = 2 h 12 min Meeting time: 11:00 AM + 2 h 12 min = 1:12 PM </aside>

Train A travels 60 km/h × 2 h = 120 km by 11:00 AM, leaving 330 km between the trains. They close the gap at a combined speed of 150 km/h (60 + 90), meeting in 330/150 = 2.2 h (2 hours 12 minutes) after 11:00 AM, which results in a meeting time of 1:12 PM . Calculations: - Head start: 60×2=120 km - Remaining distance: 450−120=330 km - Relative speed: 60+90=150 km/h - Time to meet: 150330=2.2 hours = 2 h 12 min Meeting time: 11:00 AM + 2 h 12 min = 1:12 PM </aside>

Train A travels 60 km/h × 2 h = 120 km by 11:00 AM, leaving 330 km between the trains. They close the gap at a combined speed of 150 km/h (60 + 90), meeting in 330/150 = 2.2 h (2 hours 12 minutes) after 11:00 AM, which results in a meeting time of 1:12 PM . Calculations: - Head start: 60×2=120 km - Remaining distance: 450−120=330 km - Relative speed: 60+90=150 km/h - Time to meet: 150330=2.2 hours = 2 h 12 min Meeting time: 11:00 AM + 2 h 12 min = 1:12 PM </aside>

DeepSeek R1 (Shorten)

The first train (Train A) departs at 9:00 AM at 60 km/h and covers 120 km by 11:00 AM, leaving a remaining distance of 330 km. The second train (Train B) departs at 11:00 AM at 90 km/h, and the two trains approach each other at a combined speed of 150 km/h (60 + 90). They meet after 2.2 hours (2 hours and 12 minutes), at 1:12 PM. Key Calculations: 1. Distance covered by Train A by 11:00 AM: 60 km/h * 2 = 120 km 2. Remaining distance: 450 km - 120 km = 330 km 3. Relative speed: 60 km/h + 90 km/h = 150 km/h 4. Time to meet: 330 km / 150 km/h= 2.2 h = 2 hours and 12 minutes Meeting time: 11:00 AM + 2h 12 min = 1:12 PM

The first train (Train A) departs at 9:00 AM at 60 km/h and covers 120 km by 11:00 AM, leaving a remaining distance of 330 km. The second train (Train B) departs at 11:00 AM at 90 km/h, and the two trains approach each other at a combined speed of 150 km/h (60 + 90). They meet after 2.2 hours (2 hours and 12 minutes), at 1:12 PM. Key Calculations: 1. Distance covered by Train A by 11:00 AM: 60 km/h * 2 = 120 km 2. Remaining distance: 450 km - 120 km = 330 km 3. Relative speed: 60 km/h + 90 km/h = 150 km/h 4. Time to meet: 330 km / 150 km/h= 2.2 h = 2 hours and 12 minutes Meeting time: 11:00 AM + 2h 12 min = 1:12 PM

The first train (Train A) departs at 9:00 AM at 60 km/h and covers 120 km by 11:00 AM, leaving a remaining distance of 330 km. The second train (Train B) departs at 11:00 AM at 90 km/h, and the two trains approach each other at a combined speed of 150 km/h (60 + 90). They meet after 2.2 hours (2 hours and 12 minutes), at 1:12 PM. Key Calculations: 1. Distance covered by Train A by 11:00 AM: 60 km/h * 2 = 120 km 2. Remaining distance: 450 km - 120 km = 330 km 3. Relative speed: 60 km/h + 90 km/h = 150 km/h 4. Time to meet: 330 km / 150 km/h= 2.2 h = 2 hours and 12 minutes Meeting time: 11:00 AM + 2h 12 min = 1:12 PM

Excellent, both models aced it. They not only returned the output but also showed detailed calculation steps.

However, Qwen3 was faster and generated a more step-by-step solution; on the other hand, Deep Seek R1 started by tackling the problem in 2 different ways.

It started with number-based calculation, shifted to algebra, got misguided, and used the pure calculation approach. This also explains why it took so long.

So, I would prefer to go with Qwen as it tackles the problem as it is and derives a solution using pure number calculation.

2. Fuel Calculation for 20-Minute Race

(Skill Tested: Multi-step calculations, unit conversions, practical application in a racing context)

I saw this question during quite a lot of interaction on Reddit. Per the poster claims and my own test, models failed to solve the question due to rounding off error, which is ideal for our use case.

Prompt: I’m playing Assetto Corsa Competizione, and I would be grateful if you could tell me how many litres of fuel to take in a race. The qualifying time was 2:04.317, the race is 20 minutes long, and the car uses 2.73 litres per lap

As per the post owner:

This is actually really hard. It requires the model compute the number of laps (9.x) then round up because a partial lap isn’t possible (10) then multiply by the liters/lap to get the correct answer of 27.3L, with bonus points for suggesting an extra liter or two.

The most common failures I see are in forgetting to round up and then doing the final multiply totally wrong.

One common thing to do in all models for this question is adding a safety buffer in some form, leading to misguided steps and a wrong answer. Let’s see if our models can overcome them or not

Qwen 3

To determine the required fuel load for a 20-minute race in Assetto Corsa Competizione, follow these steps: 1. Convert Lap Time to Seconds : Qualifying time = 2:04.317 = 2×60+4.317=124.317 seconds per lap. 2. Calculate Total Race Duration in Seconds : 20 minutes×60=1200 seconds. 3. Estimate Number of Laps in the Race : 124.3171200≈9.65 laps. 4. Compute Fuel Requirement: Multiply the number of laps (including the partial lap) by fuel consumption per lap: 9.65 laps×2.73 liters/lap≈26.35 liters. 5. Safety Margin (Optional) : To account for unexpected variations (e.g., off-track moments, traffic), round up to 27 litres. Final Recommendation: • Precise Calculation: 26.4 litres (exact amount for ~9.65 laps). • With Safety Margin: 27 litres (ensures completion of 10 laps). In ACC, fuel can be set with decimal precision, so 26.4 liters is sufficient for the race duration. However, 27 liters is safer to avoid running dry

To determine the required fuel load for a 20-minute race in Assetto Corsa Competizione, follow these steps: 1. Convert Lap Time to Seconds : Qualifying time = 2:04.317 = 2×60+4.317=124.317 seconds per lap. 2. Calculate Total Race Duration in Seconds : 20 minutes×60=1200 seconds. 3. Estimate Number of Laps in the Race : 124.3171200≈9.65 laps. 4. Compute Fuel Requirement: Multiply the number of laps (including the partial lap) by fuel consumption per lap: 9.65 laps×2.73 liters/lap≈26.35 liters. 5. Safety Margin (Optional) : To account for unexpected variations (e.g., off-track moments, traffic), round up to 27 litres. Final Recommendation: • Precise Calculation: 26.4 litres (exact amount for ~9.65 laps). • With Safety Margin: 27 litres (ensures completion of 10 laps). In ACC, fuel can be set with decimal precision, so 26.4 liters is sufficient for the race duration. However, 27 liters is safer to avoid running dry

To determine the required fuel load for a 20-minute race in Assetto Corsa Competizione, follow these steps: 1. Convert Lap Time to Seconds : Qualifying time = 2:04.317 = 2×60+4.317=124.317 seconds per lap. 2. Calculate Total Race Duration in Seconds : 20 minutes×60=1200 seconds. 3. Estimate Number of Laps in the Race : 124.3171200≈9.65 laps. 4. Compute Fuel Requirement: Multiply the number of laps (including the partial lap) by fuel consumption per lap: 9.65 laps×2.73 liters/lap≈26.35 liters. 5. Safety Margin (Optional) : To account for unexpected variations (e.g., off-track moments, traffic), round up to 27 litres. Final Recommendation: • Precise Calculation: 26.4 litres (exact amount for ~9.65 laps). • With Safety Margin: 27 litres (ensures completion of 10 laps). In ACC, fuel can be set with decimal precision, so 26.4 liters is sufficient for the race duration. However, 27 liters is safer to avoid running dry

Deep Seek R1

To determine the fuel needed for your 20-minute race in Assetto Corsa Competizione: 1. Convert Qualifying Time to Seconds: 2 minutes+4.317 seconds=124.317 seconds/lap2 minutes+4.317 seconds=124.317 seconds/lap. 2. Convert Race Duration to Seconds: 20 minutes×60=1200 seconds20 minutes×60=1200 seconds. 3. Calculate Total Laps: 1200 seconds124.317 seconds/lap≈9.65 laps124.317 seconds/lap1200 seconds≈9.65 laps. Round up to 10 laps (since you can’t complete a partial lap). 4. Base Fuel Requirement: 10 laps×2.73 litres/lap=27.3 liters10 laps×2.73 liters/27.3 litres. 5. Add a Safety Buffer: ACC races often require an extra lap due to timing rules or unexpected situations (e.g., slow laps). Add 1–2 laps of fuel: 27.3 L+2.73 L=30.03 L. Final Recommendation: Take 30–31 litres to ensure you finish the race without running out. This includes 10–11 laps and minor pace or race format variatio

To determine the fuel needed for your 20-minute race in Assetto Corsa Competizione: 1. Convert Qualifying Time to Seconds: 2 minutes+4.317 seconds=124.317 seconds/lap2 minutes+4.317 seconds=124.317 seconds/lap. 2. Convert Race Duration to Seconds: 20 minutes×60=1200 seconds20 minutes×60=1200 seconds. 3. Calculate Total Laps: 1200 seconds124.317 seconds/lap≈9.65 laps124.317 seconds/lap1200 seconds≈9.65 laps. Round up to 10 laps (since you can’t complete a partial lap). 4. Base Fuel Requirement: 10 laps×2.73 litres/lap=27.3 liters10 laps×2.73 liters/27.3 litres. 5. Add a Safety Buffer: ACC races often require an extra lap due to timing rules or unexpected situations (e.g., slow laps). Add 1–2 laps of fuel: 27.3 L+2.73 L=30.03 L. Final Recommendation: Take 30–31 litres to ensure you finish the race without running out. This includes 10–11 laps and minor pace or race format variatio

To determine the fuel needed for your 20-minute race in Assetto Corsa Competizione: 1. Convert Qualifying Time to Seconds: 2 minutes+4.317 seconds=124.317 seconds/lap2 minutes+4.317 seconds=124.317 seconds/lap. 2. Convert Race Duration to Seconds: 20 minutes×60=1200 seconds20 minutes×60=1200 seconds. 3. Calculate Total Laps: 1200 seconds124.317 seconds/lap≈9.65 laps124.317 seconds/lap1200 seconds≈9.65 laps. Round up to 10 laps (since you can’t complete a partial lap). 4. Base Fuel Requirement: 10 laps×2.73 litres/lap=27.3 liters10 laps×2.73 liters/27.3 litres. 5. Add a Safety Buffer: ACC races often require an extra lap due to timing rules or unexpected situations (e.g., slow laps). Add 1–2 laps of fuel: 27.3 L+2.73 L=30.03 L. Final Recommendation: Take 30–31 litres to ensure you finish the race without running out. This includes 10–11 laps and minor pace or race format variatio

What surprises me is that Deepseek R1 solved the problem and created a variation of its own, without explicit prompting and giving it as recommendations.

On the other hand, Qwen3 failed to generate the correct output 27.3, but it was not off by much.

So, I would still like to use Deep Seek R1 for complex math. It’s better, and it solved 2/2 challenges correctly.

WRITING

Most models perform reasonably well in this domain, so I have prepared a complex multistep task prompt to test models’ writing ability.

1. Summarizing Research of Climate Modeling Article

(Skill Tested: Multi-section synthesis, causal inference, reasoning)

I like to read research papers/articles, but being so long, I often divide them into sections, reading them section by section and summarising them later.

Let’s replicate the same method but for LLM and see what it generates.

Prompt:

Imagine a research article is split into three sections, provided below. After reading them, answer the final question.

Section A (Introduction): Describes the problem of climate modelling in arid regions and the limitations of previous approaches.

Section B (Methodology): Introduces a hybrid model combining satellite data with on-the-ground sensors using a deep ensemble neural network.

Section C (Results & Conclusion): The hybrid model outperforms all baselines, especially in extreme temperature prediction.

Question: Based on the full article, explain why the hybrid approach was more effective than traditional models, and summarise the primary advantage it offered in the context of arid regions.

This prompt tests the model’s causal inferencing, reasoning, and summarisation capabilities. Let’s check the results!

Deep Seek R1: climate_modelling_summary_deepseek.txt

I expected both models to generate three separate section summaries (reader-friendly), but only Qwen3 did that, showing the strong comprehension and inference capabilities.

On the other hand, Deep Seek R1 provided similar text, but it took me a long time to read and figure out the details. It is not reader-friendly unless instructed.

So, if I had to go for a writing-related task, Qwen3 would be my open-source choice.

This marks the end of the test, and Qwen 3 takes the lead.

Final Thoughts

Qwen 3, especially the 235B flagship, is often beaten by models like GPT-4o, Deepseek r1, Grok 3, etc.

With strong coding and math skills, and a Mixture of Experts design, it delivers top-tier results at a much lower inference cost.

The 30B version is also impressive. It is lightweight, local and dev-friendly, and more capable than other midsize models like Gemma 3 or DCV3.

The Alibaba Qwen team has recently released the Qwen 3 Series, including two standout models: the 235B parameter Moe model (with 22B active parameters) and a lightweight 30B version (3B active).

The Qwen3-235b-A22B model competes with giants like DeepSeek R1, Grok-3, and Gemini 2.5 Pro, but it does so with fewer active parameters, faster inference, and open-source accessibility with Apache 2.0 license. Apparently, every lab suffers from the same affliction: an uncanny talent for outrageously long model names.

Anyway there is also a 30b model, the Qwen3-30 B-A3B outcompetes the previous Qwq-32 B with 10 times the activated parameters, and a small model like the Qwen3-4B can rival the performance of Qwen2.5-72 B-Instruct.

This is such a good time for open-source model enthusiasts.

Best part, takes fraction of cost per million input and output token, compared to SOTA models.

Let’s look at some of the key details of new Qwen 3 and then put it to test!

TL;DR

Qwen 3 consistently produced better, more functional, and user-friendly code for tasks.

Both Qwen 3 and DeepSeek R1 correctly solved logic puzzles but it had better output structure.

While both performed well on simpler math, DeepSeek R1 more accurately solved a complex multi-step calculation problem where Qwen 3 slightly missed, suggesting varied results.

Qwen 3 excelled at researching, summarizing and structuring text.

Overall, Qwen3 is a highly capable, efficient open-source choice, strong in coding/writing, while DeepSeek R1 holds an edge in complex math and reasoning speed.

Qwen3 Key Details

Here is all the essential key information for Qwen 3 you need to know:

Feature | Highlights |

|---|---|

Motto | Think Deeper, Act Faster |

Variants | MoE models: Qwen 3-235B-A22B (22 B active) • Qwen 3-30B-A3B (3 B active) |

Architecture | Mixture-of-Experts for the two flagship models (≈ 10 % of parameters active per forward pass → big inference savings) • standard dense transformer for the smaller set |

Benchmarks where it’s on top | HumanEval (code), MATH, GSM8K, Big-Bench Hard, BoolQ, ARC-Challenge |

“Hybrid Thinking” mode | Toggle between instant answers and step-by-step reasoning for tasks that need more chain-of-thought |

Language coverage | 119 languages & dialects (huge leap for multilingual apps) |

MCP (Model Context Protocol) | Everything except the 235 B giant is practical on beefy local hardware or multi-GPU rigs; official guides name Ollama, LM Studio, llama.cpp, etc. |

Pre-training corpus size | 36 trillion tokens—about 2× Qwen 2.5 |

License | Fully open-weight under Apache 2.0 (commercial-friendly) |

Local deployment | Everything except the 235 B giant is practical on beefy local hardware or multi-GPU rigs; official guides name Ollama, LM Studio, llama.cpp, etc. |

Additionally, Qwen3-235b-A22 B outperformed others on tasks like:

Coding – beat models like DeepSeek R1, Gemini 2.5 Pro, OpenAI O3 Mini, and 01. Though highly doubtful.

Mathematics – surpassed all major competitors in problem-solving and step-by-step accuracy

General Reasoning – outcompeted top-tier models in logical and multi-step reasoning tasks

Reading Comprehension – excelled in integrating multi-section ideas and inferencing

Logical Deduction (Puzzles) – solved complex deduction tasks better than many others

& Qwen3-30b-A3B performed competitively on tasks like:

Coding & Math – matched or exceeded lighter models like GPT-4 Omni, Gemma 3, DCV3.

Efficiency Benchmarks – achieved strong performance with only 3B active params.

General Use Benchmarks – handled various tasks comparably to larger models.

With such impressive results, benchmarks, and task support, it is easy to get lost.

So, let’s evaluate the model’s performance for real-world use cases across different domains and compare results against SOTAS like Deepseek R1.

So, let’s begin!

CODING

The first task we will check the model on is coding, nothing fancy here, let’s get straight to testing.

Functional Sticky Note App

(Skill Tested: Front-end UI development, DOM manipulation, interactivity)

Stick notes are a perfect productivity tool, and I use them quite often, so let’s see how both models perform when generating a functional stick note web app.

Prompt: Create a front-end for a modern note-taking app. Make it so that you can add sticky notes. Add snip/notes drag functionality, too.

Just added functionality to drag and drop. Let’s check the result.

Qwen 3

Code: note_taker_qwen.html

Output: (From artefacts window)

Deep Seek R1

Code: note_taker_deepseek.html

Output

Comparing, both models did a fine job, but the output of Qwen3 was faster, consistent and user-friendly.

So, I would like to go for Qwen 3 for simple tasks as it understands nuances and requirements well.

Now on to the next test

Conway Game of Life (Code)

(Skill Tested: Matrix logic, algorithm implementation, terminal rendering)

Conway’s Game of Life is a cellular automation game with the following rules:

Any live cell with fewer than two live neighbours dies (underpopulation).

Any live cell with more than three live neighbours dies (overpopulation).

Any live cell with two or three live neighbours continues to live.

Any dead cell with exactly three live neighbours comes to life.

Game Workings:

The starting layout is called the seed of the system.

The first generation is created by simultaneously applying the rules to every cell in the seed, whether it is alive or dead.

All changes—cells being born or dying—happen at once in a step called a tick.

Each new generation depends only on the one before it.

The same rules are then used repeatedly to create the next generation until the number of iterations is met.

Seeing the complexity and decision making in the game, it suited me as a good question to test the model’s coding and decision-making in code ability.

Prompt: Create a Python implementation of Conway’s Game of Life that runs in the terminal. The program should accept an initial state, run for a specified number of generations, and display each generation in the terminal.

No specific mention of testing the code, let’s check the results

Qwen3

Code: game_of_live_qwen.py

Output

Deep Seek R1

Code: game_of_life_deepseek.py , test_file**: blinker.txt ,** terminal_command : terminal_command.bash

Output

I liked straightaway about Qwen3 because it provided me with the sample test case, making it easier to test the code. It also has a simple implementation—just a single script, which is good for prototyping things.

This was not the case with Deep Seek R1. It provided two files, one main and another test (after asking how to run—assuming it was Vibe coding). Also, the implementation was not similar to Qwen3, and performance issues were present.

Again, I would go to Qwen3 for the coding tasks as its code is fast, reliable, more accurate, and optimised.

Butterfly SVG Generation

(Skill Tested: SVG generation, geometric reasoning, visual symmetry)

SVG stands for Standard Vector Graphics and is quite prominent in graphics design and the core development field. So, let’s code a butterfly one for ourselves.

Prompt

Generate SVG code for a simple butterfly shape. The butterfly should have symmetrical wings and basic body parts (head, thorax, abdomen). Use basic SVG shapes and keep the design relatively recognisable but straightforward.

Specific mention of symmetry in the body part to check visual symmetry, let’s check the results.

Qwen 3

Code: svg_butterfly_qwen3.xml

Output

DeepSeek R1

Code: svg_butterfly_deepseek.xml

Output

Both models produced symmetric and part-specific results

However, Qwen3 produced better output than Deep Seek R1 and was more nuanced in following the instruction, as visible in the Code.

The Deep Seek output looks more cartoonish, and the code also shows that the model skips the following instruction.

Honestly, never expected Qwen3 to generate output better than Grok3, and it beat it for good. Proof (Grok3 Results – even worse than Deep Seek R1) 👇

This marks the end of the coding, mathematical, and graphical analysis ability test, and Qwen3 took the lead.

Now let’s test the model’s performance on the Reasoning Task, where Deep Seek R1 truly shone!

REASONING

One of the top reasons for Deep Seek R1’s success was its reasoning ability. I even wrote a blog post on analysing its CoT Reasoning.

Let’s see if Qwen3 can beat the Deep Seek at its own game.

Find The Guilty Person

(Skill Testing: Deductive logic, truth-table consistency)

A puzzle that many open-source models failed to answer correctly in past tests. Cracking this one requires high deductive reasoning, making it ideal for the test.

Prompt

A detective investigates a case involving five suspects: Alice, Ben, Carla, David, and Eva. Only one of them is guilty. The detective receives the following statements: Alice says: “Ben is guilty.” Ben says, “Carla is lying.” Carla says, “Eva is guilty.” David says: “I didn’t do it.” Eva says, “Carla is telling the truth.” Exactly one person is telling the truth. Who is guilty, and how do you know?

Ans: David

For fairness, only the question was provided. Let’s check the results.

Qwen 3

Conclusion The only consistent scenario is when Ben is the only truth-teller , and David is the guilty person . This satisfies both conditions: Only one person is telling the truth (Ben). Only one person is guilty (David). All other statements are false, consistent with their being lies. Final Answer: David

DeepSeek R1

Why Other Suspects Don’t Fit: If Alice, Ben, Carla, or Eva were guilty, it would lead to more than one truthful statement, violating the rule. Only David's guilt results in exactly one truthful person (Ben) while keeping all others false. Final Answer: David is guilty, and Ben is the only one telling the truth

Both models performed and followed the same truth-table consistency checks to arrive at the correct answer.

However, Deep Seek’s results were the fastest, taking around 80 seconds, in contrast to Qwen’s 120+ seconds, which generated a more structured answer, following a four-step deduction process.

As for me, I would go for Deep Seek R1 as I want a quick solution.

Now let’s move on to the next test.

Museum Field Trip Planning

(Skill Tested: Constraint satisfaction, arithmetic reasoning, real-world planning)

I often like to go on field trips with my friends, and I had to do a lot of real-world planning, such as booking a bus, booking a hotel, managing money, and so on.

This reasoning task comes from a similar ground and requires real-world planning, ideal for model ability testing.

Prompt:

A group of students plan to go on a field trip to a museum. They need to decide how many buses to rent and how to divide the students among the buses. Each bus can hold up to 40 students, but the museum only accommodates 120 students. The group has a budget of $800 for the bus rental, and each bus costs $200 per day. How many buses should the group rent, and how many students should go on each bus, and what is the cost associated with the day? Explain your reasoning.

Ans: 3 buses, 40 each, $200 each

Again, for fairness, only the question was provided. Let’s check the results.

Qwen 3

Final Answer The group should rent 3 buses with 40 students on each bus.<br

Deep Seek R1

The group should rent 3 buses, and each bus should carry 40 students.<br

On the first try, both models failed to include the last requirement—the cost associated with the day. However, on the second try, they gave the total cost instead of the cost/day, possibly due to the unclear prompt.

Again, DeepSeek was the fastest (95 seconds) compared to Qwen (105 seconds), but as for output structuring, Qwen3 takes the lead.

So, in essence, reasoning tasks are just a matter of preferences. Both models performed equally well and stumbled upon the same prompt.

So, if you prefer speed, go for deepseekr1; otherwise, Qwen 3 is preferred for output quality.

Anyway, this marks the end of the Reasoning test with a tie, now let’s move on to the next test – Math’s

MATHS

The official Qwen3 documents state that its flagship model beats other models on many math-related benchmarks, such as MATH, GSM8K, and more.

So, let’s test its mathematical ability and check whether it remains up to its statements or not?

Train Timing Problem

(Skill Tested: Multi-step calculations, algebra, word problem reasoning)

This problem tackles calculation related to relative motion (focus: distance = speed * time) in physics and needs to be solved through a multi-step calculation.

Prompt

A train leaves City A at 9:00 AM, travelling at 60 km/h. Another train leaves City B at 11:00 AM, travelling toward City A at 90 km/h. The distance between City A and City B is 450 km. At what time do the two trains meet? Show all steps clearly.

Trust me, I had to solve this question variant in my application development exam, and though easy, one can often get tricked in conversion. Let’s check how the model performs.

Qwen3 (Shorten)

Train A travels 60 km/h × 2 h = 120 km by 11:00 AM, leaving 330 km between the trains. They close the gap at a combined speed of 150 km/h (60 + 90), meeting in 330/150 = 2.2 h (2 hours 12 minutes) after 11:00 AM, which results in a meeting time of 1:12 PM . Calculations: - Head start: 60×2=120 km - Remaining distance: 450−120=330 km - Relative speed: 60+90=150 km/h - Time to meet: 150330=2.2 hours = 2 h 12 min Meeting time: 11:00 AM + 2 h 12 min = 1:12 PM </aside>

DeepSeek R1 (Shorten)

The first train (Train A) departs at 9:00 AM at 60 km/h and covers 120 km by 11:00 AM, leaving a remaining distance of 330 km. The second train (Train B) departs at 11:00 AM at 90 km/h, and the two trains approach each other at a combined speed of 150 km/h (60 + 90). They meet after 2.2 hours (2 hours and 12 minutes), at 1:12 PM. Key Calculations: 1. Distance covered by Train A by 11:00 AM: 60 km/h * 2 = 120 km 2. Remaining distance: 450 km - 120 km = 330 km 3. Relative speed: 60 km/h + 90 km/h = 150 km/h 4. Time to meet: 330 km / 150 km/h= 2.2 h = 2 hours and 12 minutes Meeting time: 11:00 AM + 2h 12 min = 1:12 PM

Excellent, both models aced it. They not only returned the output but also showed detailed calculation steps.

However, Qwen3 was faster and generated a more step-by-step solution; on the other hand, Deep Seek R1 started by tackling the problem in 2 different ways.

It started with number-based calculation, shifted to algebra, got misguided, and used the pure calculation approach. This also explains why it took so long.

So, I would prefer to go with Qwen as it tackles the problem as it is and derives a solution using pure number calculation.

2. Fuel Calculation for 20-Minute Race

(Skill Tested: Multi-step calculations, unit conversions, practical application in a racing context)

I saw this question during quite a lot of interaction on Reddit. Per the poster claims and my own test, models failed to solve the question due to rounding off error, which is ideal for our use case.

Prompt: I’m playing Assetto Corsa Competizione, and I would be grateful if you could tell me how many litres of fuel to take in a race. The qualifying time was 2:04.317, the race is 20 minutes long, and the car uses 2.73 litres per lap

As per the post owner:

This is actually really hard. It requires the model compute the number of laps (9.x) then round up because a partial lap isn’t possible (10) then multiply by the liters/lap to get the correct answer of 27.3L, with bonus points for suggesting an extra liter or two.

The most common failures I see are in forgetting to round up and then doing the final multiply totally wrong.

One common thing to do in all models for this question is adding a safety buffer in some form, leading to misguided steps and a wrong answer. Let’s see if our models can overcome them or not

Qwen 3

To determine the required fuel load for a 20-minute race in Assetto Corsa Competizione, follow these steps: 1. Convert Lap Time to Seconds : Qualifying time = 2:04.317 = 2×60+4.317=124.317 seconds per lap. 2. Calculate Total Race Duration in Seconds : 20 minutes×60=1200 seconds. 3. Estimate Number of Laps in the Race : 124.3171200≈9.65 laps. 4. Compute Fuel Requirement: Multiply the number of laps (including the partial lap) by fuel consumption per lap: 9.65 laps×2.73 liters/lap≈26.35 liters. 5. Safety Margin (Optional) : To account for unexpected variations (e.g., off-track moments, traffic), round up to 27 litres. Final Recommendation: • Precise Calculation: 26.4 litres (exact amount for ~9.65 laps). • With Safety Margin: 27 litres (ensures completion of 10 laps). In ACC, fuel can be set with decimal precision, so 26.4 liters is sufficient for the race duration. However, 27 liters is safer to avoid running dry

Deep Seek R1

To determine the fuel needed for your 20-minute race in Assetto Corsa Competizione: 1. Convert Qualifying Time to Seconds: 2 minutes+4.317 seconds=124.317 seconds/lap2 minutes+4.317 seconds=124.317 seconds/lap. 2. Convert Race Duration to Seconds: 20 minutes×60=1200 seconds20 minutes×60=1200 seconds. 3. Calculate Total Laps: 1200 seconds124.317 seconds/lap≈9.65 laps124.317 seconds/lap1200 seconds≈9.65 laps. Round up to 10 laps (since you can’t complete a partial lap). 4. Base Fuel Requirement: 10 laps×2.73 litres/lap=27.3 liters10 laps×2.73 liters/27.3 litres. 5. Add a Safety Buffer: ACC races often require an extra lap due to timing rules or unexpected situations (e.g., slow laps). Add 1–2 laps of fuel: 27.3 L+2.73 L=30.03 L. Final Recommendation: Take 30–31 litres to ensure you finish the race without running out. This includes 10–11 laps and minor pace or race format variatio

What surprises me is that Deepseek R1 solved the problem and created a variation of its own, without explicit prompting and giving it as recommendations.

On the other hand, Qwen3 failed to generate the correct output 27.3, but it was not off by much.

So, I would still like to use Deep Seek R1 for complex math. It’s better, and it solved 2/2 challenges correctly.

WRITING

Most models perform reasonably well in this domain, so I have prepared a complex multistep task prompt to test models’ writing ability.

1. Summarizing Research of Climate Modeling Article

(Skill Tested: Multi-section synthesis, causal inference, reasoning)

I like to read research papers/articles, but being so long, I often divide them into sections, reading them section by section and summarising them later.

Let’s replicate the same method but for LLM and see what it generates.

Prompt:

Imagine a research article is split into three sections, provided below. After reading them, answer the final question.

Section A (Introduction): Describes the problem of climate modelling in arid regions and the limitations of previous approaches.

Section B (Methodology): Introduces a hybrid model combining satellite data with on-the-ground sensors using a deep ensemble neural network.

Section C (Results & Conclusion): The hybrid model outperforms all baselines, especially in extreme temperature prediction.

Question: Based on the full article, explain why the hybrid approach was more effective than traditional models, and summarise the primary advantage it offered in the context of arid regions.

This prompt tests the model’s causal inferencing, reasoning, and summarisation capabilities. Let’s check the results!

Deep Seek R1: climate_modelling_summary_deepseek.txt

I expected both models to generate three separate section summaries (reader-friendly), but only Qwen3 did that, showing the strong comprehension and inference capabilities.

On the other hand, Deep Seek R1 provided similar text, but it took me a long time to read and figure out the details. It is not reader-friendly unless instructed.

So, if I had to go for a writing-related task, Qwen3 would be my open-source choice.

This marks the end of the test, and Qwen 3 takes the lead.

Final Thoughts

Qwen 3, especially the 235B flagship, is often beaten by models like GPT-4o, Deepseek r1, Grok 3, etc.

With strong coding and math skills, and a Mixture of Experts design, it delivers top-tier results at a much lower inference cost.

The 30B version is also impressive. It is lightweight, local and dev-friendly, and more capable than other midsize models like Gemma 3 or DCV3.

Recommended Blogs

Recommended Blogs

Connect AI agents to SaaS apps in Minutes

Connect AI agents to SaaS apps in Minutes

We handle auth, tools, triggers, and logs, so you build what matters.

Stay updated.

Stay updated.