OpenAI finally unveiled their much expensive O3, the O3-Pro. The model is available in their API and Pro plan, which costs $200 per month. The O1-pro is still one of the best models I have used in terms of raw reasoning. But lately I have been a fan of Claude 4 Opus and Gemini 2.5 Pro, some of the best cosing models.

I so badly wanted to see how the o3-Pro is, and my curiosity got the better of me, leading me to spend $10 on OpenRouter, so you can decide which one to go for without much ambiguity.

TL;DR

Claude 4 Opus has been the best model on all tests.

Claude 4 Opus excels in prompt following, output, and understanding user intention.

Gemini 2.5 Pro is the most economical with the best price-to-performance ratio; it's not even close.

You will not miss O3-pro ever. It's expensive, slow and not worth it, unless your interest is research.

I didn't get the O1-pro vibes.

SWE Benchmark and Pricing Comparison

Model | Context window | SWE-bench accuracy | Hallucination rate* | Price (per M tokens) |

|---|---|---|---|---|

Claude Opus 4 | 200 K | 72.5 % → 79.4 %† | Low (–65 % vs. earlier) | $15 in / $75 out |

OpenAI o3-Pro | 200 K | > 69.1 % (exact TBD) | ~18 % | $20 in / $80 out |

Gemini 2.5 Pro | 1 M (→ 2 M) | 67.2 % | 8.5 % | ~$1.25 in / $10 out |

* Hallucination figures are those mentioned by the respective vendors and may vary in practice.

† 79.4 % achievable with extra parallel compute at inference time.

Coding Comparison

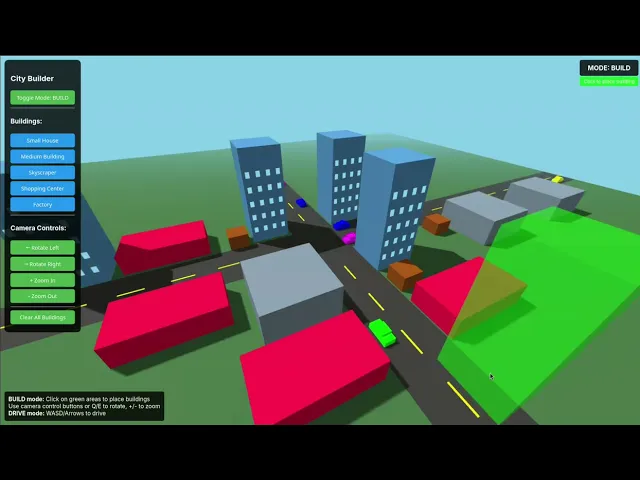

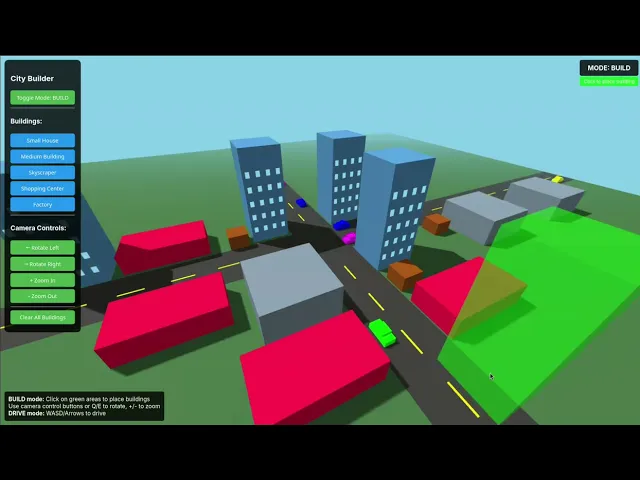

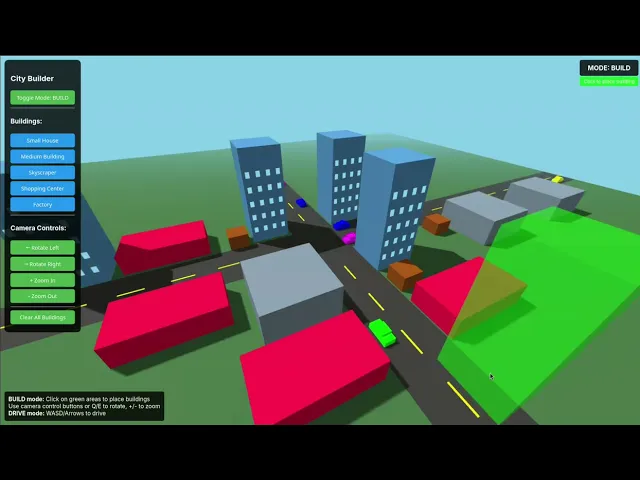

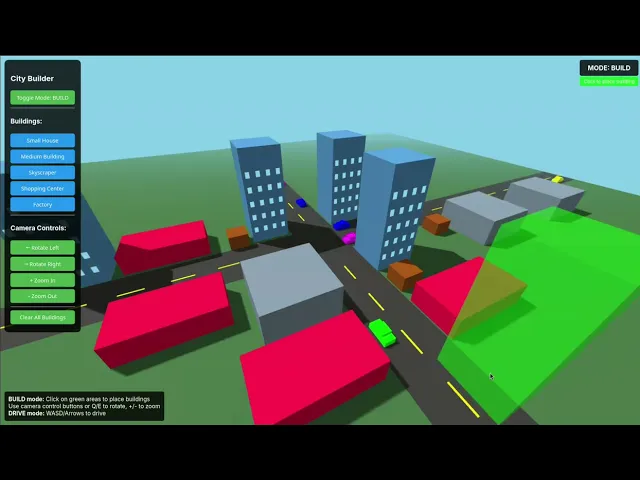

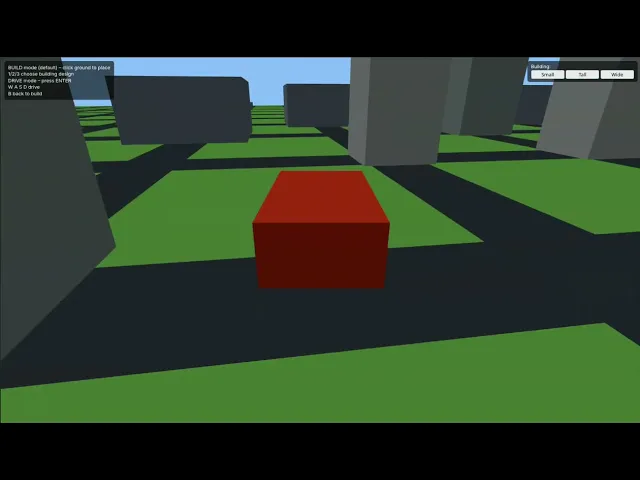

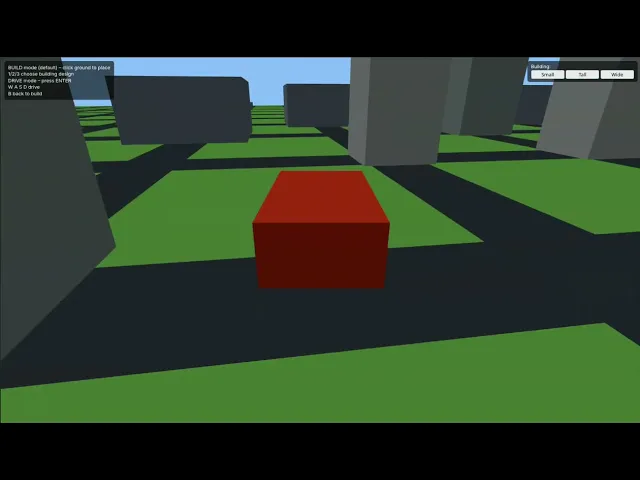

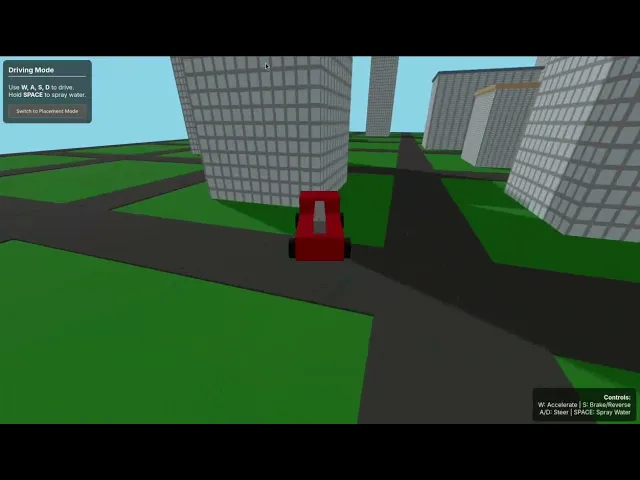

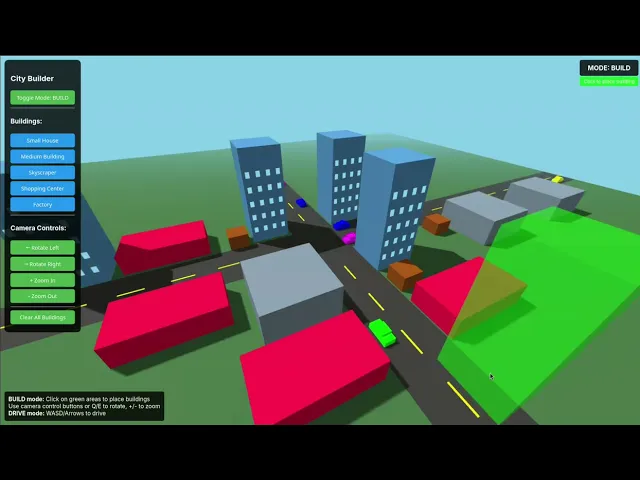

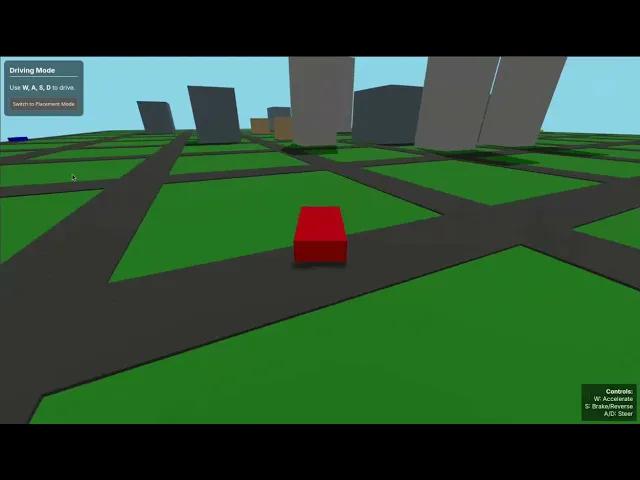

1. 3D Town Simulation

Prompt: Create a 3D game where I can place buildings of various designs and sizes, and drive through the town I've created. Add traffic to the road as well.

Response from Claude Opus 4

You can find the code it generated here: Link

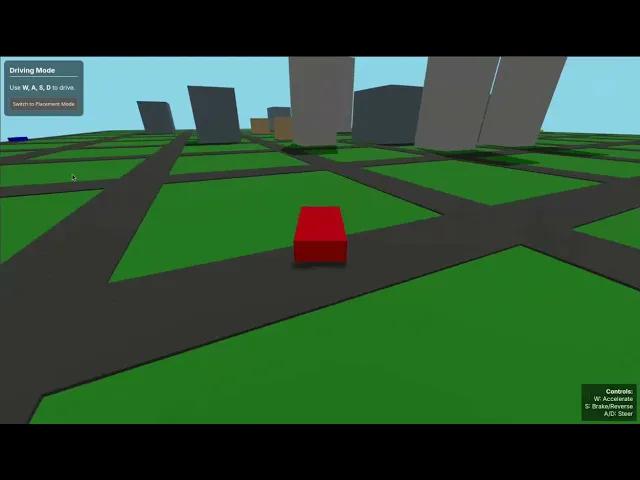

Here’s the output of the program:

Look how beautiful this is for one shot. Everything works, and it has a nicer UI and shadows. I can switch from build mode to drive mode, add buildings, and continue driving. There are no logical issues in any part of it.

One thing that could be improved is the collision detection between the vehicle and the buildings, but I didn't ask for it, so it's fine.

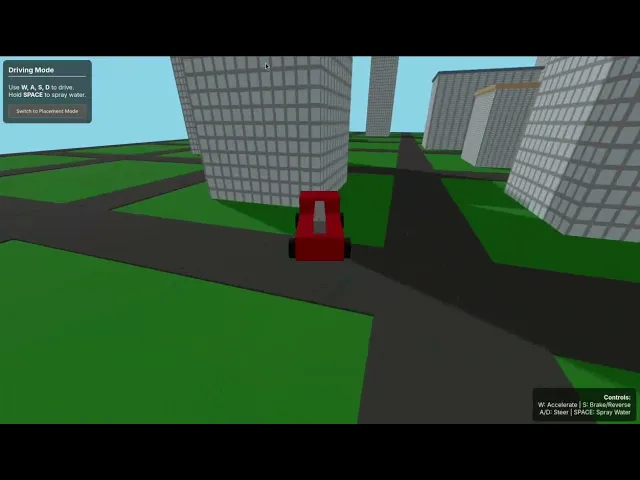

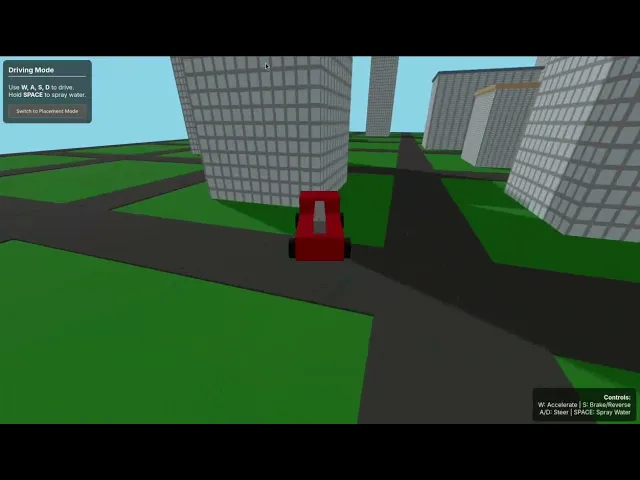

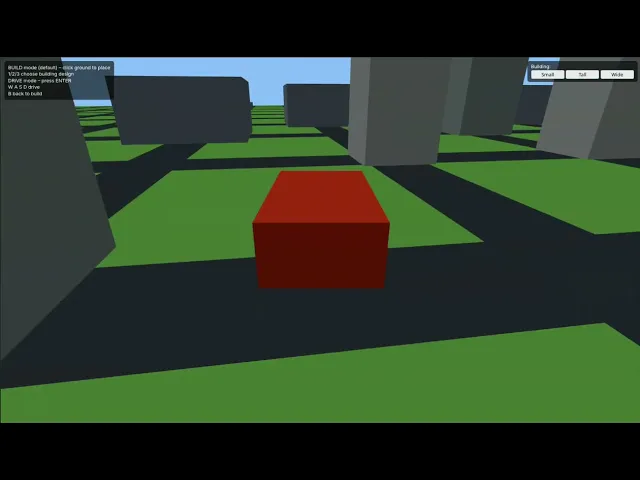

Response from Gemini 2.5 Pro

You can find the code it generated here: Link

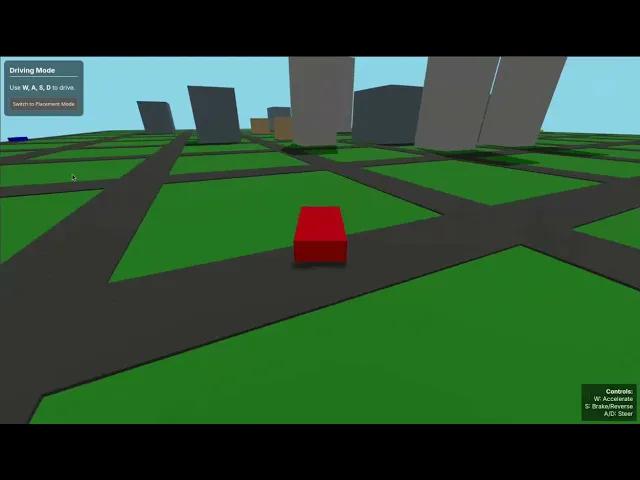

Here’s the output of the program:

This is great too. You can place buildings in the right spot and not on the road. The overall user interface and feel are somewhat average.

One thing I love that it did, which the Claude Opus 4 didn't, is the collision detection. The response was quite quick, within seconds, and for a model that's this affordable and performs so much better than most, what more could you ask for, right?

Response from OpenAI o3-Pro

You can find the code it generated here: Link

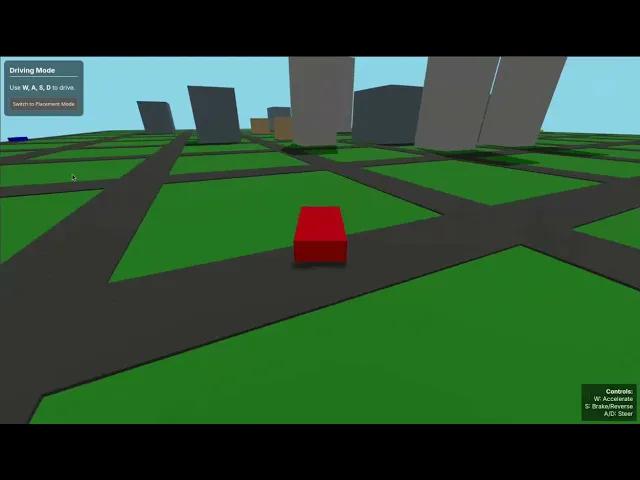

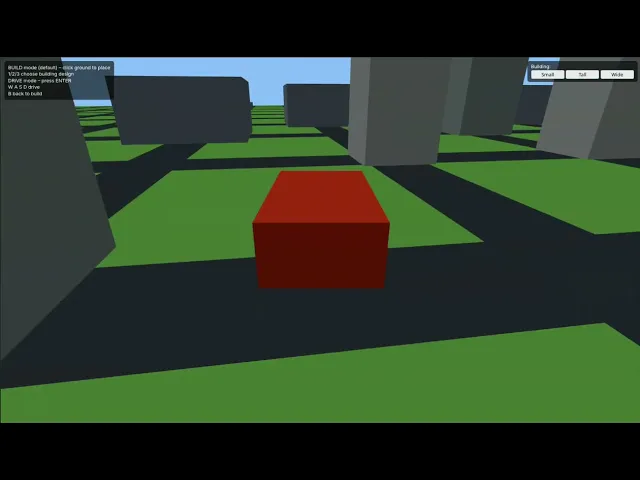

Here’s the output of the program:

As you can see, this is a much worse result than both models. There is no validation for where you can place the buildings, and even when you try to put them in the correct spot, they end up on the road.

The overall UI and feel are terrible, flickering everywhere. The controls are completely inverted, and the colours are bizarre.

Overall, this is a very disappointing result from this model for such a simple question.

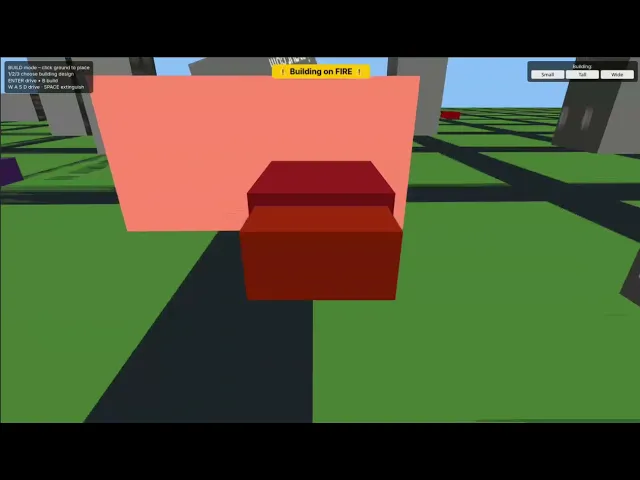

Follow-up prompt: Now, please create a firetruck and randomly set some buildings on fire. I must be able to extinguish the fire and add an alert in the UI when a building is on fire. Also, add a rival helicopter that detects the fire and attempts to extinguish it before me. Additionally, make the buildings look a bit more realistic and add fire effects to the affected buildings.

Now, let's see how well these models can understand their own code and add some features on top.

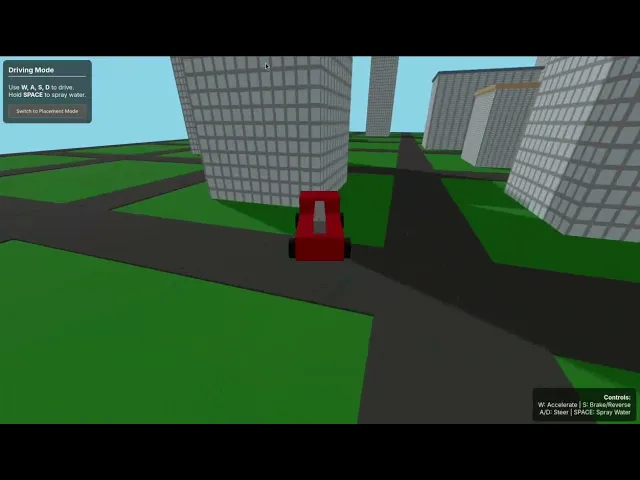

Response from Claude Opus 4

You can find the code it generated here: Link

Here’s the output of the program:

This is amazing. I can easily switch from a normal car to a fire truck, see alerts, and everything works. The logic is all fine, except the helicopter doesn't put out the fire.

This isn't your typical question that it might be trained on. It's a completely random idea, and for something this random, it's too good. If a few follow-up prompts are made, I'm fairly certain it can even resolve that issue.

Overall, this is a rock-solid response and probably the best you can expect from any model in one shot.

Response from Gemini 2.5 Pro

You can find the code it generated here: Link

Here’s the output of the program:

Now, this is interesting. For some reason, the controls are now completely inverted. It has improved the UI a bit but introduced a lot of new bugs in the code.

Not the best you can expect, but it's good enough for this feature request.

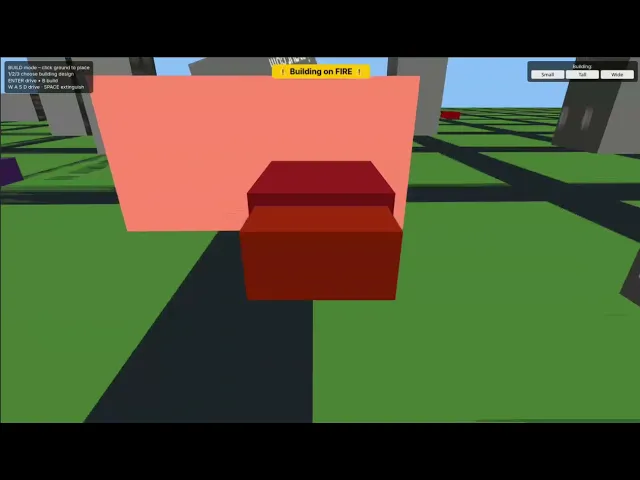

Response from OpenAI o3-Pro

You can find the code it generated here: Link

Here’s the output of the program:

This is another disaster. The controls are inverted, the fire truck does not function, the buildings request an image that does not exist, and numerous other issues. Bugs are everywhere, and nothing seems to work.

Even if you're using this as a starting point for a project like this one, it's better to build it all from scratch.

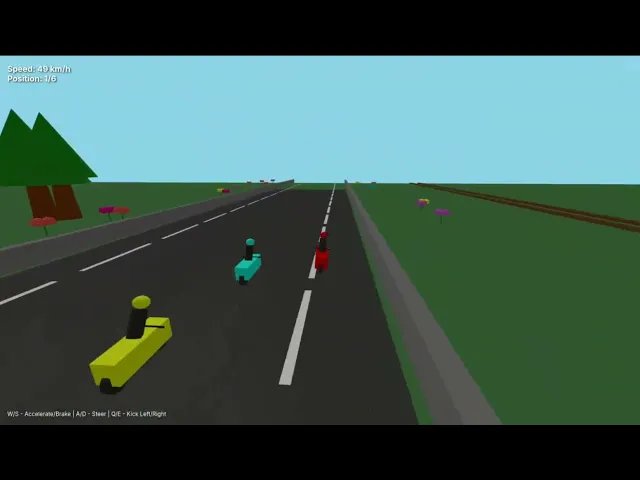

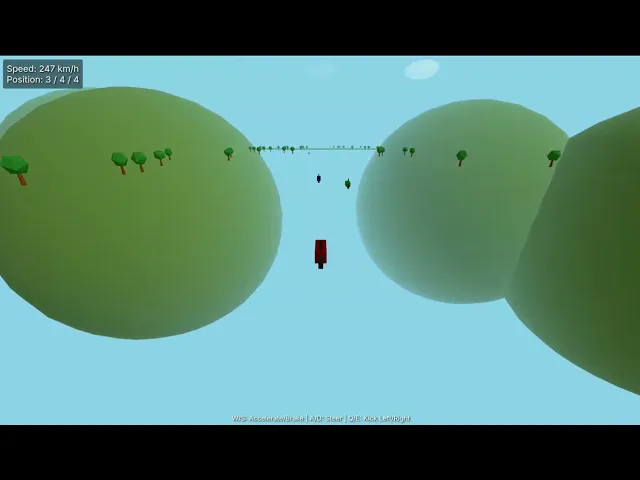

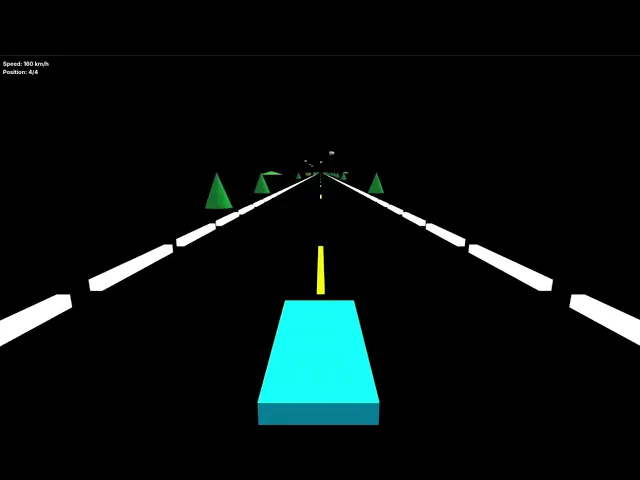

2. Bike Racing

Prompt: You can find the prompt I've used here: Link

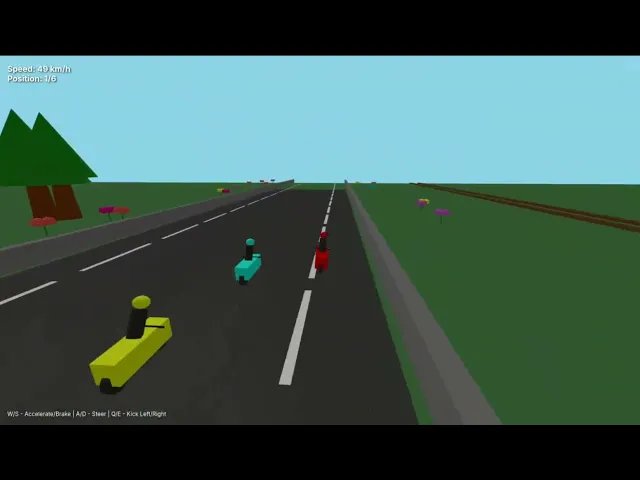

Response from Claude Opus 4

You can find the code it generated here: Link

Here’s the output of the program:

Unlike its previous implementations, this one has a few problems. First, the UI looks extremely good, and it has added all the features I asked for. However, the game has no end, and the map is not complete.

There isn't much I can comment on regarding the implementation, but there is room for improvement.

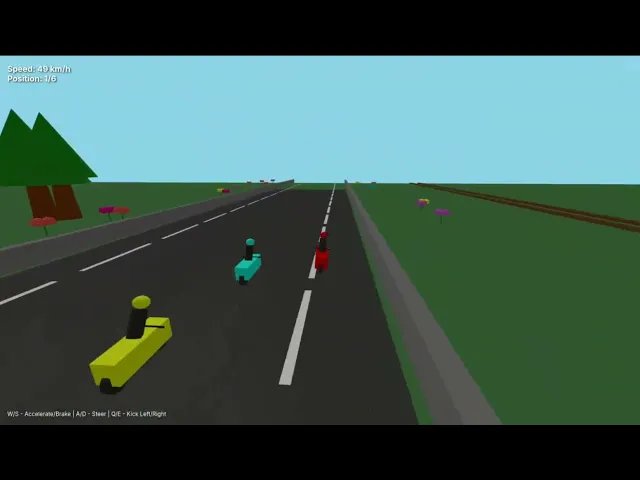

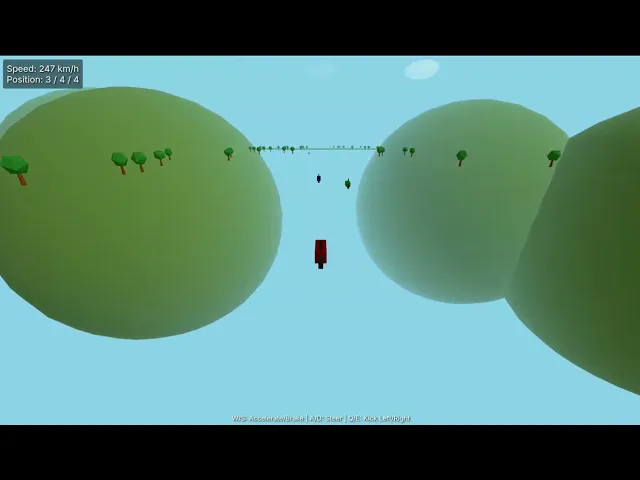

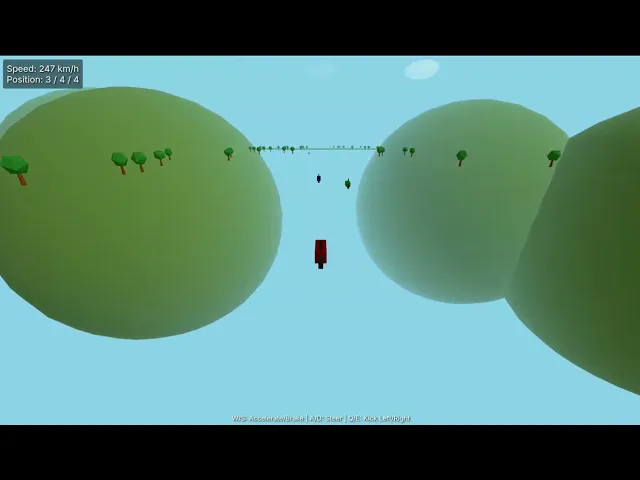

Response from Gemini 2.5 Pro

You can find the code it generated here: Link

Here’s the output of the program:

Similar result, but with bad UI. The game is endless, which is what I want, but it stops rendering the roads midway and has no boundary for driving, allowing you to move anywhere.

Not the best you'd expect from Gemini 2.5 Pro for this question.

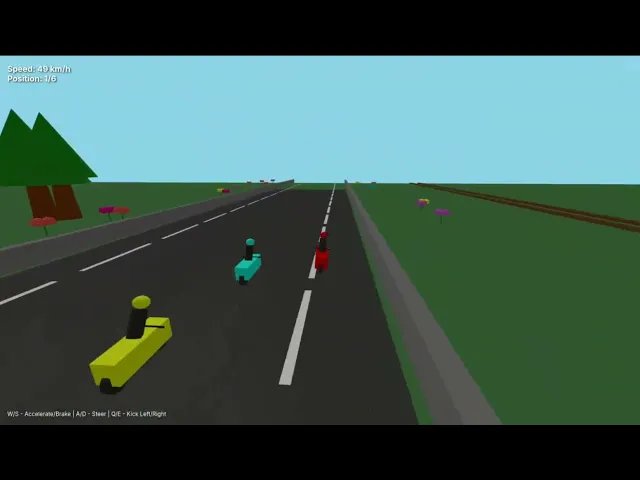

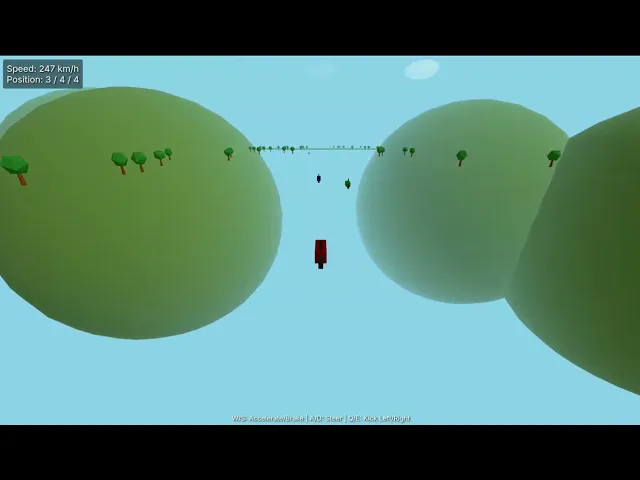

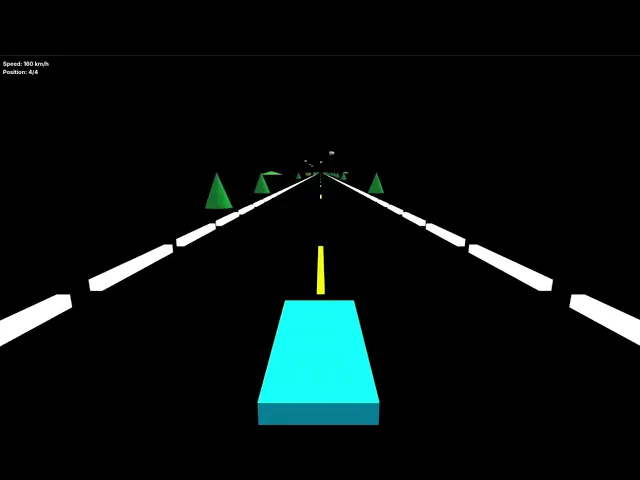

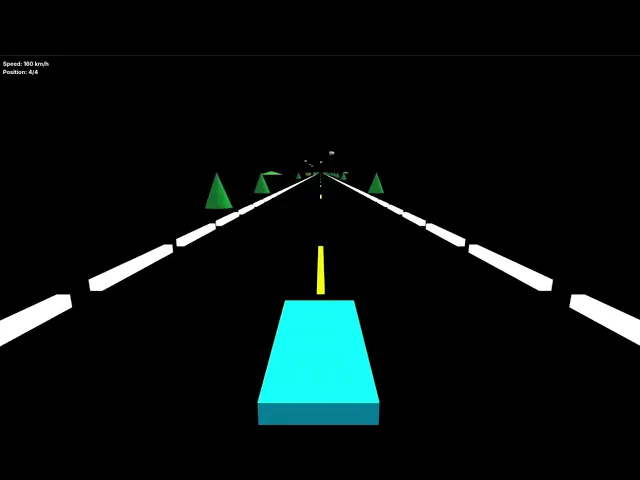

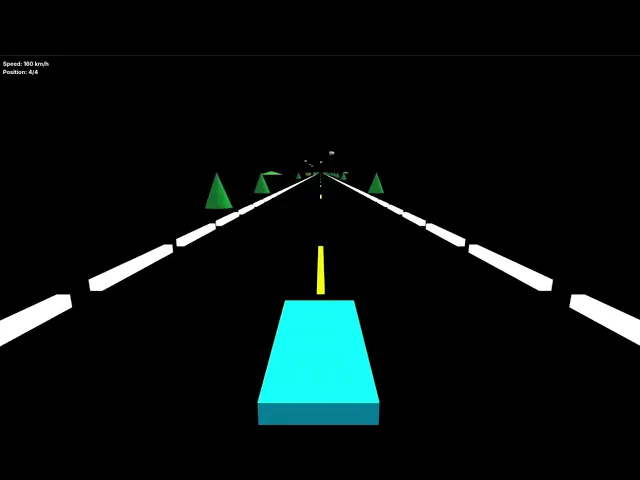

Response from OpenAI o3-Pro

You can find the code it generated here: Link

Here’s the output of the program:

This is slightly better than the earlier implementation, but still not satisfactory. The position calculation is incorrect, and the game somewhat forces a start at a single point, mimicking an endless run.

It's still not right and nowhere near the Claude Opus 4 or the Gemini 2.5 Pro implementation.

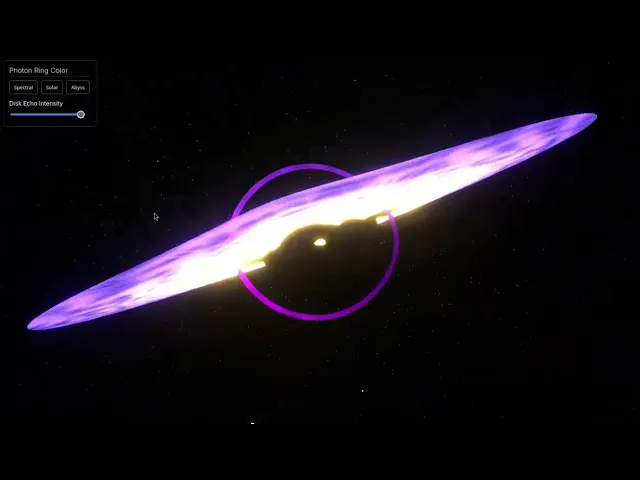

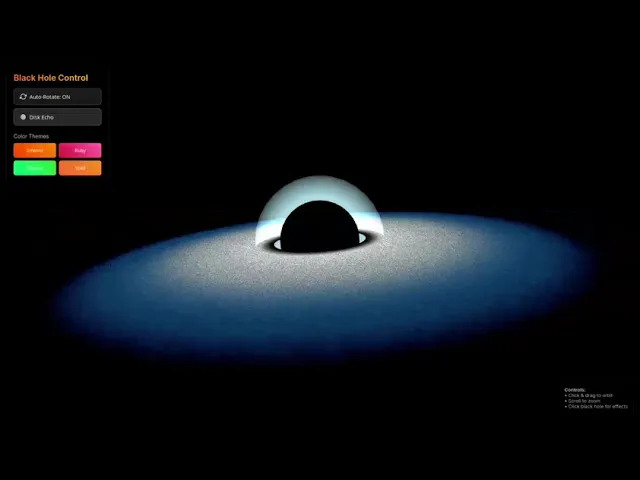

3. Black Hole Simulation

Let's end our test with a quick animation challenge.

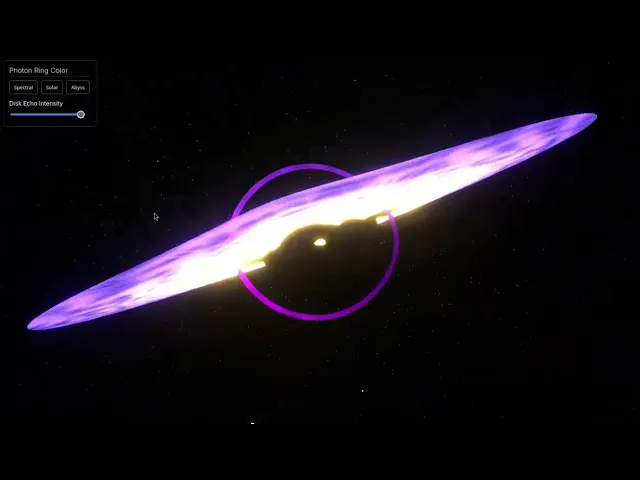

Prompt: Build an interactive 3D black hole visualisation using Three.js/WebGL. It should feature different colours to add to the ring and a button that triggers the 'Disk Echo' effect.

This is an idea I got from Puneet from this tweet:

Here, he used the earlier Gemini 2.5 Pro model (05-06) with several follow-up prompts to achieve this result. Now, let's see what kind of results we can get from our models, which are supposedly better than those in one shot.

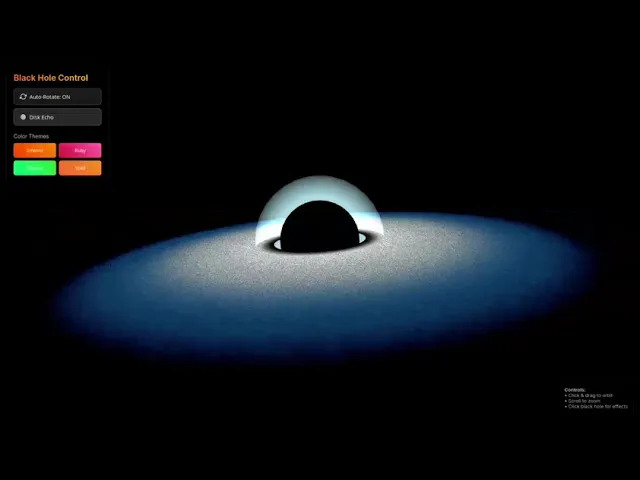

Response from Claude Opus 4

You can find the code it generated here: Link

Here’s the output of the program:

This is okay, and honestly, it's the best you could expect from a model in one go. It has implemented everything correctly; perhaps the blackhole itself could be a bit better, but this is completely valid and a great response.

It has implemented everything correctly, from different colour support to Disk echo, but when you see a result like the one above in the tweet from a slightly less capable model, you expect a bit more, right? 🥴 But this is fine.

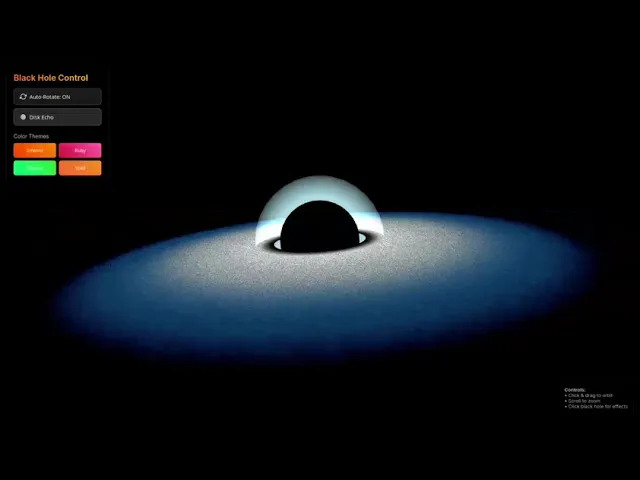

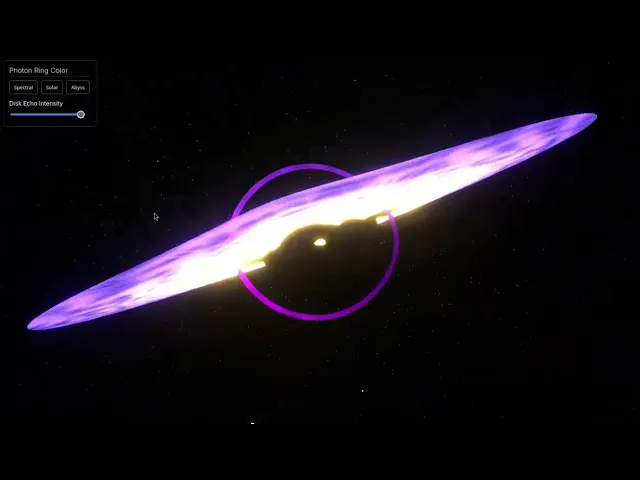

Response from Gemini 2.5 Pro

You can find the code it generated here: Link

Here’s the output of the program:

Honestly, I seem to prefer this result over Claude Opus 4, mainly because the black hole and the animation here look a bit more realistic.

This still does not look as good as the one in the tweet, but it's a one-shot, don't forget. Once we iterate on the code and ask it to change accordingly, we should get closer to that or even better.

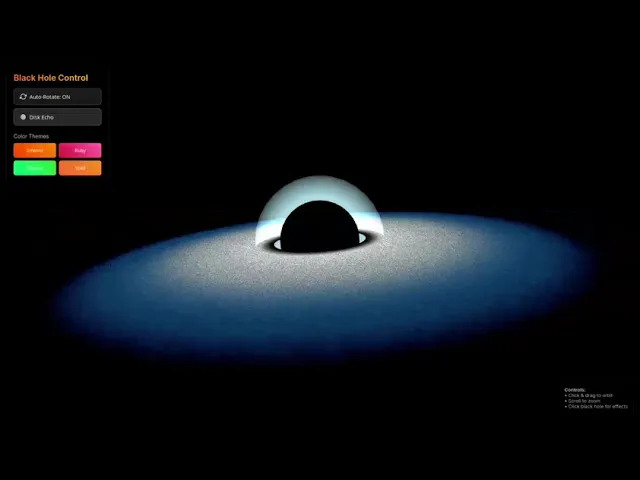

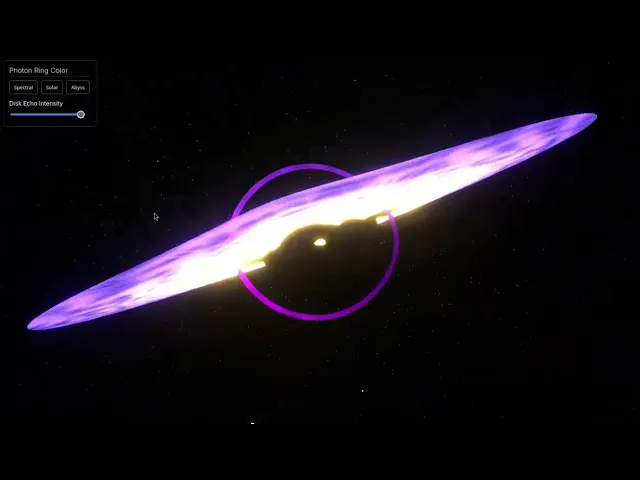

Response from OpenAI o3-Pro

You can find the code it generated here: Link

Here’s the output of the program:

Nah, this is complete dogshit. In what sense does it look like a black hole animation? Yes, this model has an excellent benchmark for research, reasoning, and all that, but when it comes to coding, it consistently falls short.

And yeah, I got this result after thinking for over 5 minutes, which is the longest any model has taken in the entire test. This is super disappointing.

Conclusion

Claude Opus 4 is the clear winner in all of our tests. It's a great model, and it justifies why it's the best model for coding.

To me, Gemini 2.5 Pro is the real find. In most cases, it does a pretty solid job. The benchmarks are too impressive for a model at this low price point.

Don't forget, it's about to get a 2M token context window update. Imagine how good this model will be after a 2M token context window.

O3-Pro was somewhat disappointing, but it's not entirely a coding model either. You might be better off using this model for research purposes, but not for coding.

OpenAI finally unveiled their much expensive O3, the O3-Pro. The model is available in their API and Pro plan, which costs $200 per month. The O1-pro is still one of the best models I have used in terms of raw reasoning. But lately I have been a fan of Claude 4 Opus and Gemini 2.5 Pro, some of the best cosing models.

I so badly wanted to see how the o3-Pro is, and my curiosity got the better of me, leading me to spend $10 on OpenRouter, so you can decide which one to go for without much ambiguity.

TL;DR

Claude 4 Opus has been the best model on all tests.

Claude 4 Opus excels in prompt following, output, and understanding user intention.

Gemini 2.5 Pro is the most economical with the best price-to-performance ratio; it's not even close.

You will not miss O3-pro ever. It's expensive, slow and not worth it, unless your interest is research.

I didn't get the O1-pro vibes.

SWE Benchmark and Pricing Comparison

Model | Context window | SWE-bench accuracy | Hallucination rate* | Price (per M tokens) |

|---|---|---|---|---|

Claude Opus 4 | 200 K | 72.5 % → 79.4 %† | Low (–65 % vs. earlier) | $15 in / $75 out |

OpenAI o3-Pro | 200 K | > 69.1 % (exact TBD) | ~18 % | $20 in / $80 out |

Gemini 2.5 Pro | 1 M (→ 2 M) | 67.2 % | 8.5 % | ~$1.25 in / $10 out |

* Hallucination figures are those mentioned by the respective vendors and may vary in practice.

† 79.4 % achievable with extra parallel compute at inference time.

Coding Comparison

1. 3D Town Simulation

Prompt: Create a 3D game where I can place buildings of various designs and sizes, and drive through the town I've created. Add traffic to the road as well.

Response from Claude Opus 4

You can find the code it generated here: Link

Here’s the output of the program:

Look how beautiful this is for one shot. Everything works, and it has a nicer UI and shadows. I can switch from build mode to drive mode, add buildings, and continue driving. There are no logical issues in any part of it.

One thing that could be improved is the collision detection between the vehicle and the buildings, but I didn't ask for it, so it's fine.

Response from Gemini 2.5 Pro

You can find the code it generated here: Link

Here’s the output of the program:

This is great too. You can place buildings in the right spot and not on the road. The overall user interface and feel are somewhat average.

One thing I love that it did, which the Claude Opus 4 didn't, is the collision detection. The response was quite quick, within seconds, and for a model that's this affordable and performs so much better than most, what more could you ask for, right?

Response from OpenAI o3-Pro

You can find the code it generated here: Link

Here’s the output of the program:

As you can see, this is a much worse result than both models. There is no validation for where you can place the buildings, and even when you try to put them in the correct spot, they end up on the road.

The overall UI and feel are terrible, flickering everywhere. The controls are completely inverted, and the colours are bizarre.

Overall, this is a very disappointing result from this model for such a simple question.

Follow-up prompt: Now, please create a firetruck and randomly set some buildings on fire. I must be able to extinguish the fire and add an alert in the UI when a building is on fire. Also, add a rival helicopter that detects the fire and attempts to extinguish it before me. Additionally, make the buildings look a bit more realistic and add fire effects to the affected buildings.

Now, let's see how well these models can understand their own code and add some features on top.

Response from Claude Opus 4

You can find the code it generated here: Link

Here’s the output of the program:

This is amazing. I can easily switch from a normal car to a fire truck, see alerts, and everything works. The logic is all fine, except the helicopter doesn't put out the fire.

This isn't your typical question that it might be trained on. It's a completely random idea, and for something this random, it's too good. If a few follow-up prompts are made, I'm fairly certain it can even resolve that issue.

Overall, this is a rock-solid response and probably the best you can expect from any model in one shot.

Response from Gemini 2.5 Pro

You can find the code it generated here: Link

Here’s the output of the program:

Now, this is interesting. For some reason, the controls are now completely inverted. It has improved the UI a bit but introduced a lot of new bugs in the code.

Not the best you can expect, but it's good enough for this feature request.

Response from OpenAI o3-Pro

You can find the code it generated here: Link

Here’s the output of the program:

This is another disaster. The controls are inverted, the fire truck does not function, the buildings request an image that does not exist, and numerous other issues. Bugs are everywhere, and nothing seems to work.

Even if you're using this as a starting point for a project like this one, it's better to build it all from scratch.

2. Bike Racing

Prompt: You can find the prompt I've used here: Link

Response from Claude Opus 4

You can find the code it generated here: Link

Here’s the output of the program:

Unlike its previous implementations, this one has a few problems. First, the UI looks extremely good, and it has added all the features I asked for. However, the game has no end, and the map is not complete.

There isn't much I can comment on regarding the implementation, but there is room for improvement.

Response from Gemini 2.5 Pro

You can find the code it generated here: Link

Here’s the output of the program:

Similar result, but with bad UI. The game is endless, which is what I want, but it stops rendering the roads midway and has no boundary for driving, allowing you to move anywhere.

Not the best you'd expect from Gemini 2.5 Pro for this question.

Response from OpenAI o3-Pro

You can find the code it generated here: Link

Here’s the output of the program:

This is slightly better than the earlier implementation, but still not satisfactory. The position calculation is incorrect, and the game somewhat forces a start at a single point, mimicking an endless run.

It's still not right and nowhere near the Claude Opus 4 or the Gemini 2.5 Pro implementation.

3. Black Hole Simulation

Let's end our test with a quick animation challenge.

Prompt: Build an interactive 3D black hole visualisation using Three.js/WebGL. It should feature different colours to add to the ring and a button that triggers the 'Disk Echo' effect.

This is an idea I got from Puneet from this tweet:

Here, he used the earlier Gemini 2.5 Pro model (05-06) with several follow-up prompts to achieve this result. Now, let's see what kind of results we can get from our models, which are supposedly better than those in one shot.

Response from Claude Opus 4

You can find the code it generated here: Link

Here’s the output of the program:

This is okay, and honestly, it's the best you could expect from a model in one go. It has implemented everything correctly; perhaps the blackhole itself could be a bit better, but this is completely valid and a great response.

It has implemented everything correctly, from different colour support to Disk echo, but when you see a result like the one above in the tweet from a slightly less capable model, you expect a bit more, right? 🥴 But this is fine.

Response from Gemini 2.5 Pro

You can find the code it generated here: Link

Here’s the output of the program:

Honestly, I seem to prefer this result over Claude Opus 4, mainly because the black hole and the animation here look a bit more realistic.

This still does not look as good as the one in the tweet, but it's a one-shot, don't forget. Once we iterate on the code and ask it to change accordingly, we should get closer to that or even better.

Response from OpenAI o3-Pro

You can find the code it generated here: Link

Here’s the output of the program:

Nah, this is complete dogshit. In what sense does it look like a black hole animation? Yes, this model has an excellent benchmark for research, reasoning, and all that, but when it comes to coding, it consistently falls short.

And yeah, I got this result after thinking for over 5 minutes, which is the longest any model has taken in the entire test. This is super disappointing.

Conclusion

Claude Opus 4 is the clear winner in all of our tests. It's a great model, and it justifies why it's the best model for coding.

To me, Gemini 2.5 Pro is the real find. In most cases, it does a pretty solid job. The benchmarks are too impressive for a model at this low price point.

Don't forget, it's about to get a 2M token context window update. Imagine how good this model will be after a 2M token context window.

O3-Pro was somewhat disappointing, but it's not entirely a coding model either. You might be better off using this model for research purposes, but not for coding.

Recommended Blogs

Recommended Blogs

OpenAI o3-pro

Connect AI agents to SaaS apps in Minutes

Connect AI agents to SaaS apps in Minutes

We handle auth, tools, triggers, and logs, so you build what matters.

Stay updated.

Stay updated.