Moonshot AI recently announced its new AI model, Kimi K2, an open-source model designed explicitly for agentic tasks. Some consider this model an alternative and open-source version of the Claude Sonnet 4 model. For a breakdown of Kimi K2 technical details, check out our Notes on Kimi K2.

While Claude Sonnet 4 comes with $3/M input token and $15/M output token, Kimi K2 is a fraction of that, costing $0.15/M input token and $2.50/M output token. Crazy, right?

Kimi K2 has outperformed almost all coding models in various [benchmarks](https://moonshotai.github.io/Kimi-K2); however, the primary test is ultimately using and testing it in a real-world scenario.

In this article, we'll explore what Kimi K2 can do and how it compares with Sonnet 4 in raw code output quality, agentic coding, cost, and time. We’ll use both models to build the same app using Claude code.

TL;DR

If you’ve somewhere else to be, here’s a summary of the test.

If we compare frontend coding with NextJS, both are solid, but I slightly prefer Kimi K2’s responses.

Neither model got the MCP integration working out of the box, but Kimi K2’s implementation was closer to being usable.

For newer libraries and agentic workflows, both struggle. But Kimi K2 looks more promising.

We ran two code-heavy prompts with Claude Sonnet 4, resulting in approximately 300K token consumption and a cost of about $5.

For comparison, Kimi K2 used a similar number of tokens and cost only about $0.53, which is nearly 10 times cheaper.

Kimi K2 has a very low output of tokens per second, around 34.1, significantly slower than Claude Sonnet 4, which is around 91.3. However, you do receive slightly better coding responses, with little difference in code quality.

Kimi K2 is way cheaper. So, if you’re on a budget, the choice is pretty obvious.

If I had to pick between these two, I'd definitely go with Kimi K2 for coding. However, at times, it feels like waiting an eternity for it to finish.

Now, decide for yourself which one is the better fit for you based on your workflow.

What's Covered?

All in all, in this blog post, we'll compare the open-source Kimi K2 and Claude Sonnet 4 in frontend and agentic coding to see which one comes out on top. We'll mainly look at:

Speed of task execution by the models

How good it is with the frontend (we'll test with an end-to-end chat app built using NextJS)

How well it handles recently launched libraries

If that sounds interesting to you, stick around, and you might find out which one is the better fit for you.

How's the test done?

I’ll be using Claude Code as the coding agent for both models. Yes, both. Claude models are well-tuned for Claude code so that the tests won’t be completely impartial. However, Kimi K2 is an exception, as it is the best non-reasoning tool calling model available, and it should be able to do our job.

For Kimi K2, I used the OpenRouter

Another roadblock was using Kimi K2 with Claude, so I had to do a quick hack to integrate it with Claude Code. We will be using the Claude Code interface with Kimi K2 as the underlying engine.

If you’re curious about how to set that up, check out this guide: Run Kimi K2 Inside Claude Code.

Coding Comparison

Our testing approach includes:

Using both the models in Claude Code to

Building a complete chat application in NextJS with:

Voice support

image support

Implementing MCP client functionality:

Ability to connect to any MCP servers

Testing integration capabilities

Initial Setup

Let's make things a bit easier for these models by setting up a Next.js application and adding all the environment variables before they start coding the implementation.

Initialise a new Next.js application with the following command:

npx create-next-app@latest agentic-chat-composio \\\\ --typescript --tailwind --eslint --app --use-npm && \\\\ cd

npx create-next-app@latest agentic-chat-composio \\\\ --typescript --tailwind --eslint --app --use-npm && \\\\ cd

npx create-next-app@latest agentic-chat-composio \\\\ --typescript --tailwind --eslint --app --use-npm && \\\\ cd

Next, we'll need Composio's API key because we'll use it to access managed production-grade MCP servers in our chat application.

Go ahead and create an account on Composio, get your API key, and paste it into the .env file in the root of the project.

COMPOSIO_API_KEY=<your_composio_api_key> OPENAI_API_KEY=<your_openai_api_key>

COMPOSIO_API_KEY=<your_composio_api_key> OPENAI_API_KEY=<your_openai_api_key>

COMPOSIO_API_KEY=<your_composio_api_key> OPENAI_API_KEY=<your_openai_api_key>

And that's all the setup we need to do manually. Now we'll leave everything else to these two models.

Frontend Coding Comparison

💁 Prompt: Build a real-time chat application in this Next.js application using WebSockets. Users should be able to connect and chat directly, without friend requests. Set up a basic backend (which can be API routes or a simple server) to manage WebSocket connections. Also, add voice support. Focus on a clean message flow between the users. Make the UI beautiful and modern using Tailwind CSS and ShadCN components. Keep the code modular and clean. Ensure everything is working.

Kimi K2

This took almost forever to implement. I used the Chutes provider on OpenRouter, and it took over 5 minutes to generate this code. Initially, I tried adding WebSocket support directly in Next.js, but after realizing it lacks solid support for it, I reverted and ended up implementing a separate Node.js server for WebSocket instead.

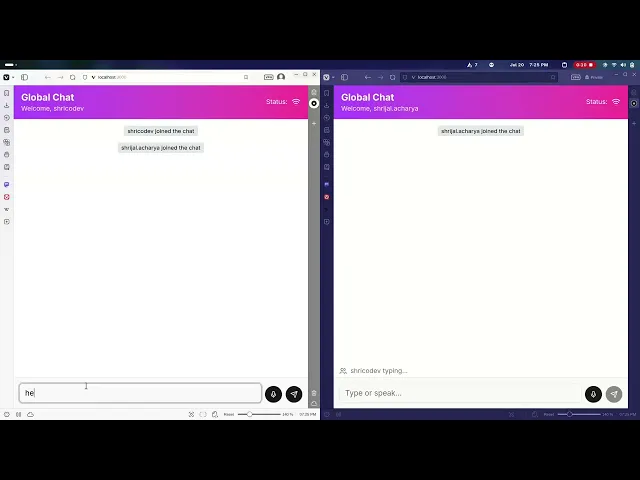

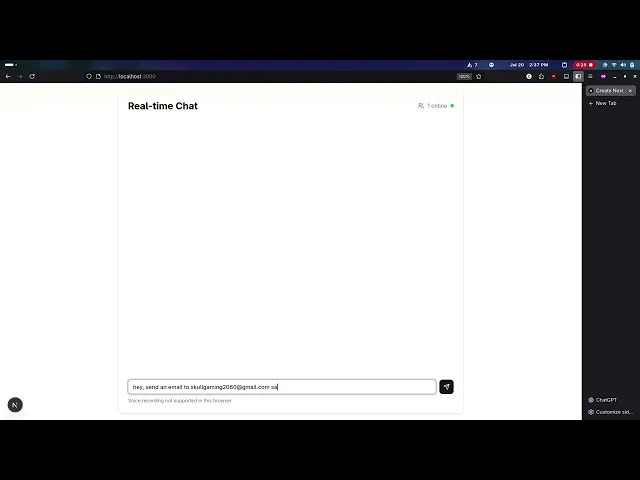

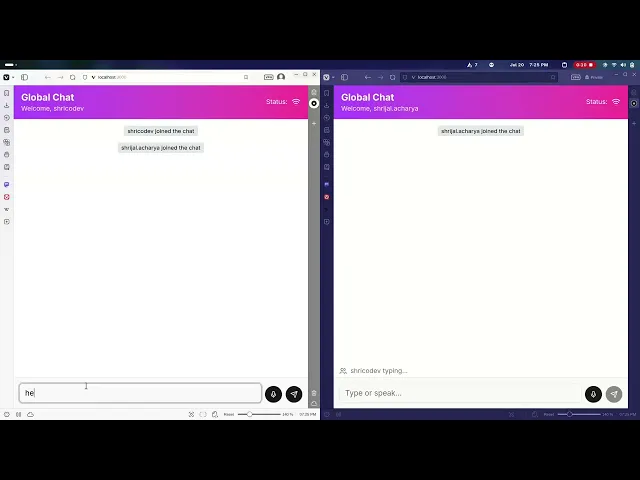

Here's the output of the program:

However, despite all that, it did implement the chat app with voice and WebSocket support (spoiler alert: Claude Sonnet 4 failed to implement voice support completely).

Claude Sonnet 4

This portion of the implementation took less than 2-3 minutes and was similar to the Kimi K2 implementation; everything works as expected.

Oh, and I forgot to ask it to add voice support, so I asked it to implement voice and optional image support for the chat application.

The first thing that disappointed me was that, despite requesting both voice and image support, it failed to add image support. I requested image support only if it was 100% sure it would work, but it still should have explained why it didn't include the image support feature.

I guess it had some issue with following the prompt.

Additionally, I notice that it has added functionality using the Web Speech API, which is not supported on some browsers, such as Firefox. However, it is incorrectly listed as not supported on all browsers, including Chrome, which fully supports it. So, there's a problem with its implementation of the API.

If I compare just the frontend coding, it's good. In fact, it's really good; it understood everything and planned the implementation from a single prompt. However, for some specific browser APIs, I'm not sure if it's as effective as we've already seen above.

Agentic Coding Comparison

The primary purpose of this comparison is to see how well these two AI models can work with recent libraries like Composio's MCP server support.

💁 Prompt: Extend the existing Next.js chat application to support agentic workflows via MCP (Model Context Protocol). Integrate Composio for tool calling and third-party service access. The app should be able to connect to any MCP server and handle tool calls based on user messages. For example, if a user says "send an email to XYZ," it should use the Gmail integration via Composio and pass the correct arguments automatically. Ensure the system handles tool responses gracefully in the chat UI.

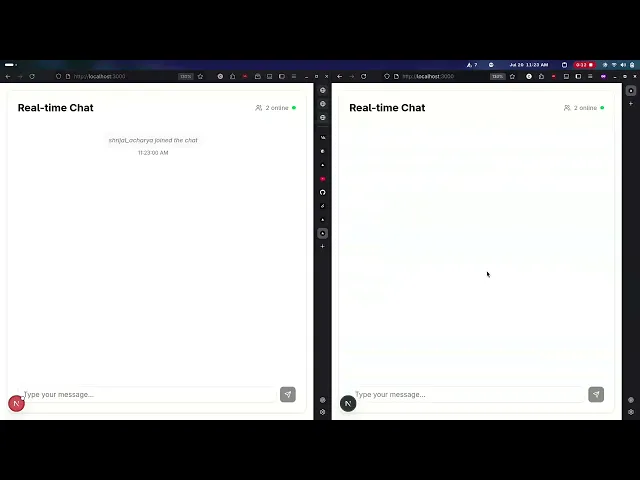

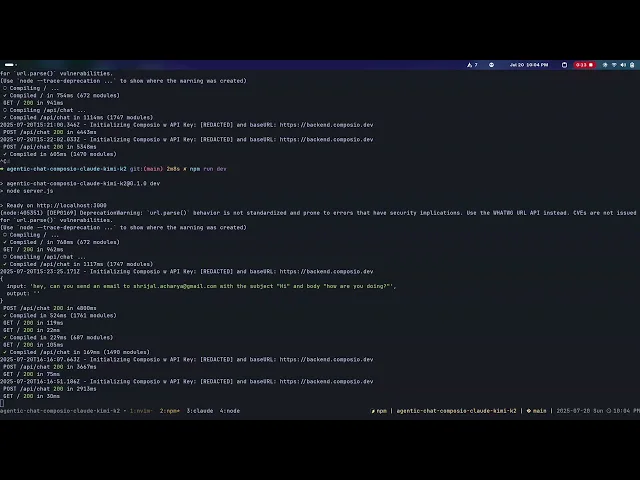

Kimi K2

You can find the entire source code here: Kimi K2 Chat App with MCP Support Gist

To my surprise, it didn't work at all. I mean, looking at the implementation code, it's pretty close, but it does not work. If we follow up with a few prompts or manually modify the code ourselves, it's not difficult to fix it.

Here's the output of the program:

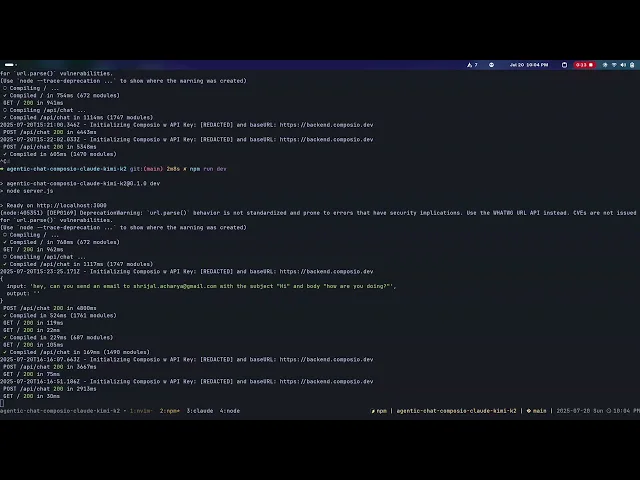

Claude Sonnet 4

You can find the entire source code here: Claude Sonnet 4 Chat App with MCP Support Gist.

You can find the complete chat with Claude Code here: Claude Sonnet 4 Claude Code Raw Response Gist.

Here's the output of the program:

On this one, it literally took about 10 minutes, and during that time, it kept breaking and fixing things back and forth. The most time-consuming part was literally fixing TypeScript errors 🤦♂️, as you can see in the Gist link I’ve shared above.

Finally, after all that, I got the code, and it still does not work. Worse, it gives a false positive, indicating that tool calls were successful, but they were not. Also, it did not use the correct Composio's TypeScript SDK, which is @composio/core.

Overall, this is not what is expected from this model. If we got a bare minimum working product, that would be better than this.

Summary

In both frontend and agentic coding, Kimi K2 held its ground, slightly outperforming Claude Sonnet 4 in terms of implementation quality and code response accuracy. I used the Chutes provider on OpenRouter to run Kimi K2. While neither model nailed the MCP integration perfectly, Kimi K2's output was a bit cleaner and closer to working code.

It's worth mentioning that all the back and forth from Claude Sonnet 4 racked up around 300K tokens, costing about $5, while Kimi K2’s equivalent output only cost about $0.53. Same token ballpark, vastly cheaper output.

Conclusion

Both models are rock-solid, but not always. When it comes to finding and integrating components into working code using recent libraries and techniques, they still fall short.

However, if I have to compare the code responses from both models, Kimi K2 seems to be slightly better, but this might differ based on the questions. I mean, there's not much difference between these two.

It's just that the pricing of Kimi K2 is too low and performs comparatively the same, or even better at times, than the Claude Sonnet 4, so I'd recommend you pick Kimi K2 for your coding workflows.

Let me know what you think of these two models in the comments below! ✌️

Moonshot AI recently announced its new AI model, Kimi K2, an open-source model designed explicitly for agentic tasks. Some consider this model an alternative and open-source version of the Claude Sonnet 4 model. For a breakdown of Kimi K2 technical details, check out our Notes on Kimi K2.

While Claude Sonnet 4 comes with $3/M input token and $15/M output token, Kimi K2 is a fraction of that, costing $0.15/M input token and $2.50/M output token. Crazy, right?

Kimi K2 has outperformed almost all coding models in various [benchmarks](https://moonshotai.github.io/Kimi-K2); however, the primary test is ultimately using and testing it in a real-world scenario.

In this article, we'll explore what Kimi K2 can do and how it compares with Sonnet 4 in raw code output quality, agentic coding, cost, and time. We’ll use both models to build the same app using Claude code.

TL;DR

If you’ve somewhere else to be, here’s a summary of the test.

If we compare frontend coding with NextJS, both are solid, but I slightly prefer Kimi K2’s responses.

Neither model got the MCP integration working out of the box, but Kimi K2’s implementation was closer to being usable.

For newer libraries and agentic workflows, both struggle. But Kimi K2 looks more promising.

We ran two code-heavy prompts with Claude Sonnet 4, resulting in approximately 300K token consumption and a cost of about $5.

For comparison, Kimi K2 used a similar number of tokens and cost only about $0.53, which is nearly 10 times cheaper.

Kimi K2 has a very low output of tokens per second, around 34.1, significantly slower than Claude Sonnet 4, which is around 91.3. However, you do receive slightly better coding responses, with little difference in code quality.

Kimi K2 is way cheaper. So, if you’re on a budget, the choice is pretty obvious.

If I had to pick between these two, I'd definitely go with Kimi K2 for coding. However, at times, it feels like waiting an eternity for it to finish.

Now, decide for yourself which one is the better fit for you based on your workflow.

What's Covered?

All in all, in this blog post, we'll compare the open-source Kimi K2 and Claude Sonnet 4 in frontend and agentic coding to see which one comes out on top. We'll mainly look at:

Speed of task execution by the models

How good it is with the frontend (we'll test with an end-to-end chat app built using NextJS)

How well it handles recently launched libraries

If that sounds interesting to you, stick around, and you might find out which one is the better fit for you.

How's the test done?

I’ll be using Claude Code as the coding agent for both models. Yes, both. Claude models are well-tuned for Claude code so that the tests won’t be completely impartial. However, Kimi K2 is an exception, as it is the best non-reasoning tool calling model available, and it should be able to do our job.

For Kimi K2, I used the OpenRouter

Another roadblock was using Kimi K2 with Claude, so I had to do a quick hack to integrate it with Claude Code. We will be using the Claude Code interface with Kimi K2 as the underlying engine.

If you’re curious about how to set that up, check out this guide: Run Kimi K2 Inside Claude Code.

Coding Comparison

Our testing approach includes:

Using both the models in Claude Code to

Building a complete chat application in NextJS with:

Voice support

image support

Implementing MCP client functionality:

Ability to connect to any MCP servers

Testing integration capabilities

Initial Setup

Let's make things a bit easier for these models by setting up a Next.js application and adding all the environment variables before they start coding the implementation.

Initialise a new Next.js application with the following command:

npx create-next-app@latest agentic-chat-composio \\\\ --typescript --tailwind --eslint --app --use-npm && \\\\ cd

Next, we'll need Composio's API key because we'll use it to access managed production-grade MCP servers in our chat application.

Go ahead and create an account on Composio, get your API key, and paste it into the .env file in the root of the project.

COMPOSIO_API_KEY=<your_composio_api_key> OPENAI_API_KEY=<your_openai_api_key>

And that's all the setup we need to do manually. Now we'll leave everything else to these two models.

Frontend Coding Comparison

💁 Prompt: Build a real-time chat application in this Next.js application using WebSockets. Users should be able to connect and chat directly, without friend requests. Set up a basic backend (which can be API routes or a simple server) to manage WebSocket connections. Also, add voice support. Focus on a clean message flow between the users. Make the UI beautiful and modern using Tailwind CSS and ShadCN components. Keep the code modular and clean. Ensure everything is working.

Kimi K2

This took almost forever to implement. I used the Chutes provider on OpenRouter, and it took over 5 minutes to generate this code. Initially, I tried adding WebSocket support directly in Next.js, but after realizing it lacks solid support for it, I reverted and ended up implementing a separate Node.js server for WebSocket instead.

Here's the output of the program:

However, despite all that, it did implement the chat app with voice and WebSocket support (spoiler alert: Claude Sonnet 4 failed to implement voice support completely).

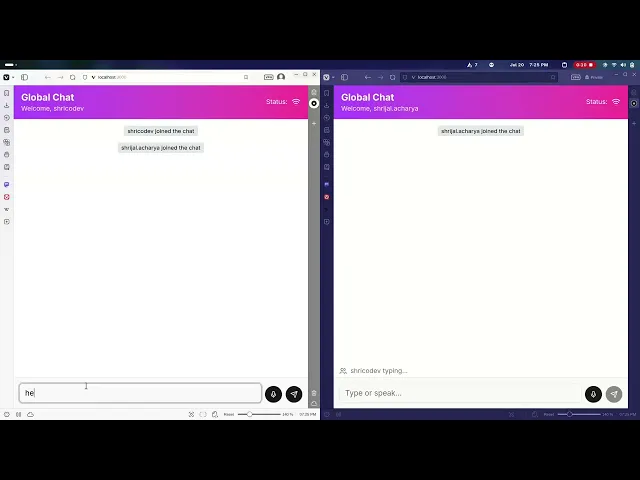

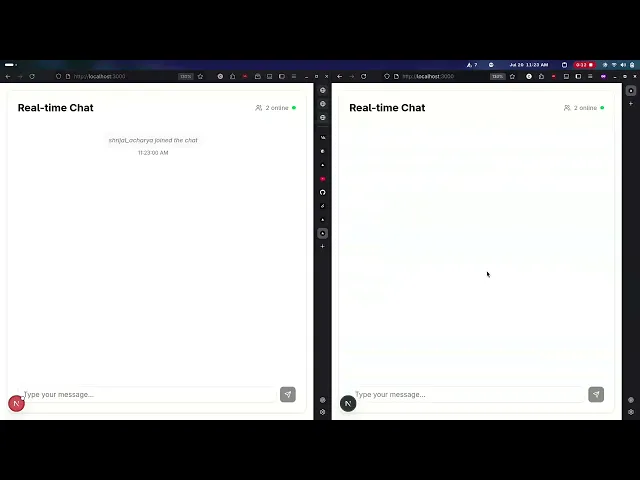

Claude Sonnet 4

This portion of the implementation took less than 2-3 minutes and was similar to the Kimi K2 implementation; everything works as expected.

Oh, and I forgot to ask it to add voice support, so I asked it to implement voice and optional image support for the chat application.

The first thing that disappointed me was that, despite requesting both voice and image support, it failed to add image support. I requested image support only if it was 100% sure it would work, but it still should have explained why it didn't include the image support feature.

I guess it had some issue with following the prompt.

Additionally, I notice that it has added functionality using the Web Speech API, which is not supported on some browsers, such as Firefox. However, it is incorrectly listed as not supported on all browsers, including Chrome, which fully supports it. So, there's a problem with its implementation of the API.

If I compare just the frontend coding, it's good. In fact, it's really good; it understood everything and planned the implementation from a single prompt. However, for some specific browser APIs, I'm not sure if it's as effective as we've already seen above.

Agentic Coding Comparison

The primary purpose of this comparison is to see how well these two AI models can work with recent libraries like Composio's MCP server support.

💁 Prompt: Extend the existing Next.js chat application to support agentic workflows via MCP (Model Context Protocol). Integrate Composio for tool calling and third-party service access. The app should be able to connect to any MCP server and handle tool calls based on user messages. For example, if a user says "send an email to XYZ," it should use the Gmail integration via Composio and pass the correct arguments automatically. Ensure the system handles tool responses gracefully in the chat UI.

Kimi K2

You can find the entire source code here: Kimi K2 Chat App with MCP Support Gist

To my surprise, it didn't work at all. I mean, looking at the implementation code, it's pretty close, but it does not work. If we follow up with a few prompts or manually modify the code ourselves, it's not difficult to fix it.

Here's the output of the program:

Claude Sonnet 4

You can find the entire source code here: Claude Sonnet 4 Chat App with MCP Support Gist.

You can find the complete chat with Claude Code here: Claude Sonnet 4 Claude Code Raw Response Gist.

Here's the output of the program:

On this one, it literally took about 10 minutes, and during that time, it kept breaking and fixing things back and forth. The most time-consuming part was literally fixing TypeScript errors 🤦♂️, as you can see in the Gist link I’ve shared above.

Finally, after all that, I got the code, and it still does not work. Worse, it gives a false positive, indicating that tool calls were successful, but they were not. Also, it did not use the correct Composio's TypeScript SDK, which is @composio/core.

Overall, this is not what is expected from this model. If we got a bare minimum working product, that would be better than this.

Summary

In both frontend and agentic coding, Kimi K2 held its ground, slightly outperforming Claude Sonnet 4 in terms of implementation quality and code response accuracy. I used the Chutes provider on OpenRouter to run Kimi K2. While neither model nailed the MCP integration perfectly, Kimi K2's output was a bit cleaner and closer to working code.

It's worth mentioning that all the back and forth from Claude Sonnet 4 racked up around 300K tokens, costing about $5, while Kimi K2’s equivalent output only cost about $0.53. Same token ballpark, vastly cheaper output.

Conclusion

Both models are rock-solid, but not always. When it comes to finding and integrating components into working code using recent libraries and techniques, they still fall short.

However, if I have to compare the code responses from both models, Kimi K2 seems to be slightly better, but this might differ based on the questions. I mean, there's not much difference between these two.

It's just that the pricing of Kimi K2 is too low and performs comparatively the same, or even better at times, than the Claude Sonnet 4, so I'd recommend you pick Kimi K2 for your coding workflows.

Let me know what you think of these two models in the comments below! ✌️

Recommended Blogs

Recommended Blogs

Kimi K2, Kimi k2 vs. Claude 4 Sonnet, Kimi k2 vs. Sonnet 4

Connect AI agents to SaaS apps in Minutes

Connect AI agents to SaaS apps in Minutes

We handle auth, tools, triggers, and logs, so you build what matters.

Stay updated.

Stay updated.