With recent models from Moonshot AI (Kimi K2), Alibaba didn't fall behind. Alibaba released Qwen3-Coder, an agentic coding model available in multiple sizes, with the strongest variant being Qwen3-Coder-480B-A35B-Instruct. Along with these models, they also open-sourced Qwen Code CLI, which is a CLI coding agent and a fork of Gemini CLI. I have also compared Gemini CLI and Claude Code; you can check that out as well.

So I had this urge to test this model with some other recently released models, Kimi K2 and Claude Sonnet 4 (especially), in some of my coding challenges.

In this article, we'll see what Qwen3 Coder can do and how well it compares with Kimi K2 and Sonnet 4 in coding.

TL;DR

If you want to dive straight into the results and see how both models compare in coding, here's a summary of the test:

Claude Sonnet 4 consistently gave the most complete and reliable implementations across all tests. Fastest output too. Most tasks were done in under 5–7 minutes.

Kimi K2 has strong coding and writes great UI code, but it's painfully slow and often gets stuck or produces non-functional code.

Qwen3 Coder surprised me with some solid outputs and is significantly faster than Kimi (used via Openrouter), although not quite at Claude’s level. Its agentic CLI tool is again a big plus.

For tool-heavy and complex prompts like MCP + Composio, Claude Sonnet 4 is far ahead in both quality and structure. It was the only one that got it right on the first try.

So, if you're optimising for speed and reliability, Claude Sonnet 4 is the clear winner. If you're looking for cost-effective solutions with relatively excellent outputs, Qwen3 Coder and Kimi K2 are great options, but be prepared for longer wait times. Although it largely depends on the providers you use, for this, I used OpenRouter, which routed requests to the best available providers.

How's the test done?

For this entire test, I'll be using Qwen Code CLI, which is an open-source tool for agentic coding.

It's a fork of the Gemini CLI, specifically adapted for use with the Qwen3 Coder for agentic coding tasks.

💁 I'm not sure why they still have the text saying "Gemini CLI." Even if you fork it, at least try changing the name. 🤦♂️

I'll be using OpenRouter to access all three models via their API in Qwen Code CLI to keep things fair, instead of using multiple different CLI coding agents like Claude Code for Sonnet 4.

NOTE: All the tests are done using OpenRouter, so the timings might vary as there are many providers. The latency and TPS of the provider in OpenRouter will greatly affect the output timings.

If you're curious about how to set up LLMs with OpenRouter in Qwen Code, you can simply export these three environment variables:

export OPENAI_API_KEY="<API_KEY_FROM_OPENROUTER_FOR_MODELS>" export OPENAI_MODEL="qwen/qwen3-coder" # Use the model of your choice export OPENAI_BASE_URL="<https://openrouter.ai/api/v1>"

export OPENAI_API_KEY="<API_KEY_FROM_OPENROUTER_FOR_MODELS>" export OPENAI_MODEL="qwen/qwen3-coder" # Use the model of your choice export OPENAI_BASE_URL="<https://openrouter.ai/api/v1>"

export OPENAI_API_KEY="<API_KEY_FROM_OPENROUTER_FOR_MODELS>" export OPENAI_MODEL="qwen/qwen3-coder" # Use the model of your choice export OPENAI_BASE_URL="<https://openrouter.ai/api/v1>"

And that's it. Now let's begin the test without further ado.

Coding Comparison

For the coding comparison, I did a total of 3 rounds:

CLI Chat MCP Client: Build a CLI chat MCP client in Python.

Geometry Dash WebApp Simulation: Build a web app version of Geometry Dash.

Typing Test WebApp: Build a monkeytype-like typing test app.

1. CLI Chat MCP Client

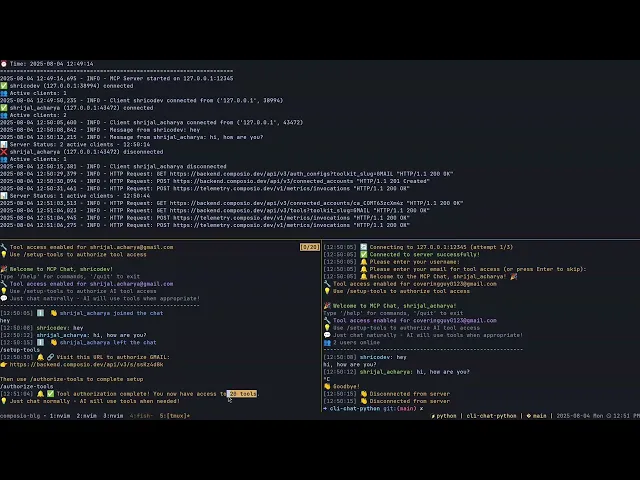

For the first test, I asked the models to build a chat MCP CLI client powered by Composio to connect with SaaS tools to see how well it can handle new SDKs (we have just released the v3 SDK) and tool calls. Do checkout if you need production-grade app integrations for AI agents.

For this one, I decided to do something crazy. Instead of a regular chat agent using Next.js, why not build the same thing for the terminal? It should be a chat room where multiple users can connect and chat, and most importantly, have the feature to utilise integrations (E.g., Gmail, Slack).

💁 Prompt: Build a CLI chat MCP client in Python. More like a chat room. Integrate Composio for tool calling and third-party service access. It should be able to connect to any MCP server and handle tool calls based on user messages. For example, if a user says "send an email to XYZ," it should use the Gmail integration via Composio and pass the correct arguments automatically. Ensure the system handles tool responses gracefully in the CLI. Follow this quickstart guide: https://docs.composio.dev/docs/quickstart

Response from Qwen 3 Coder:

You can find the code it generated here: Link

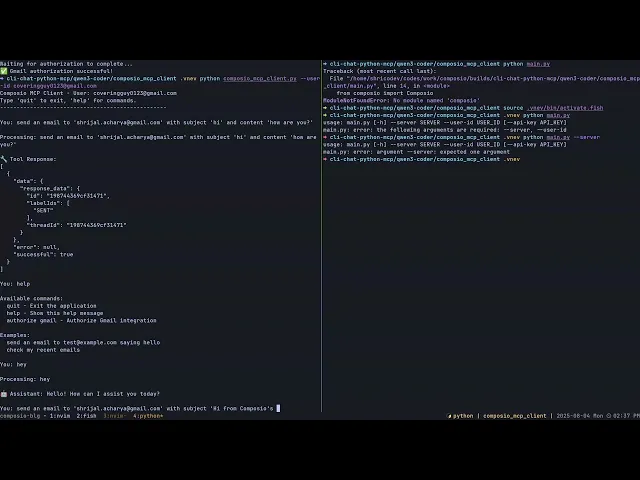

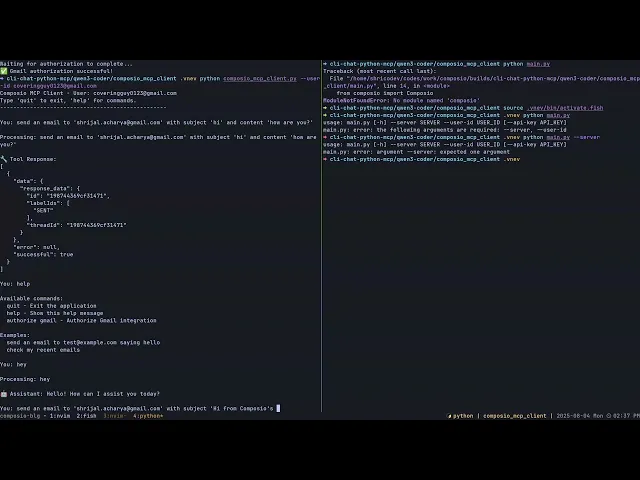

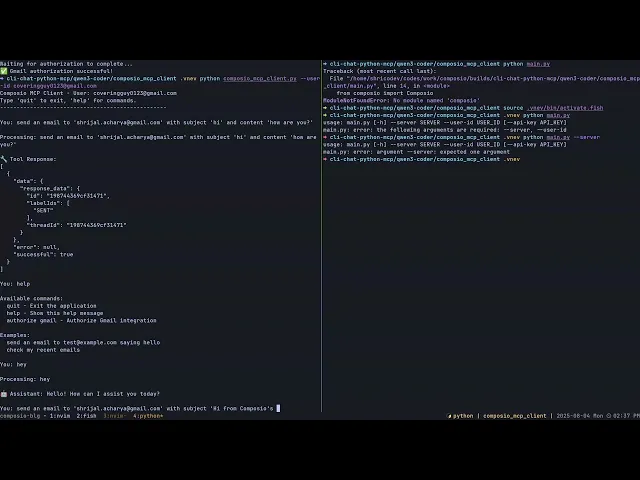

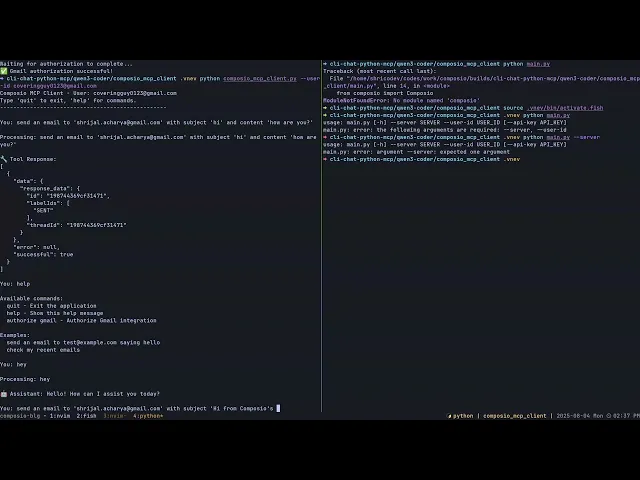

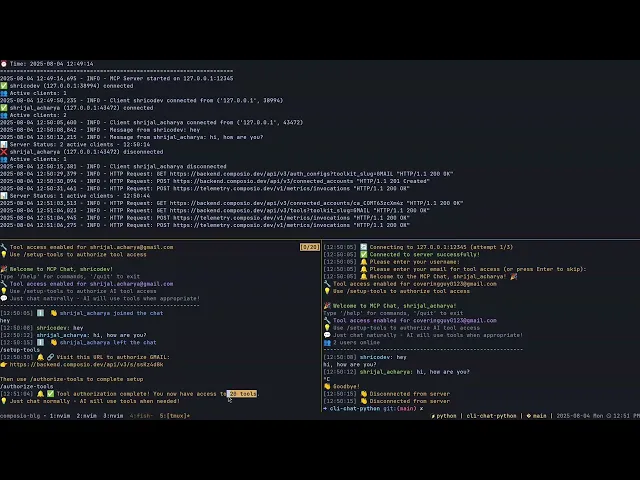

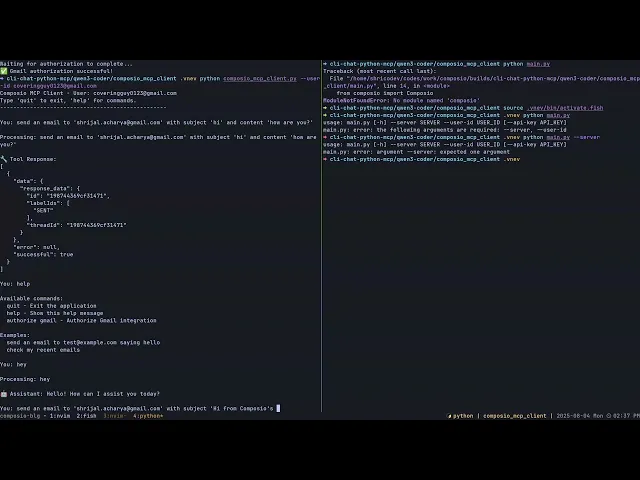

Here’s the output of the program:

The implementation is not bad. I wanted it to use websockets, so I described it like a chat room, but it simply implemented a one-on-one chat. It was able to quickly search the quickstart guide and find how to work with Composio, and that's great.

However, the WebSocket logic is completely missing.

If we look at the time it took to implement, it's a little over 9 minutes with a tool call error. In terms of code and Composio's integration, it's great, but prompt following is not very good here.

Response from Kimi K2:

You can find the code it generated here: Link

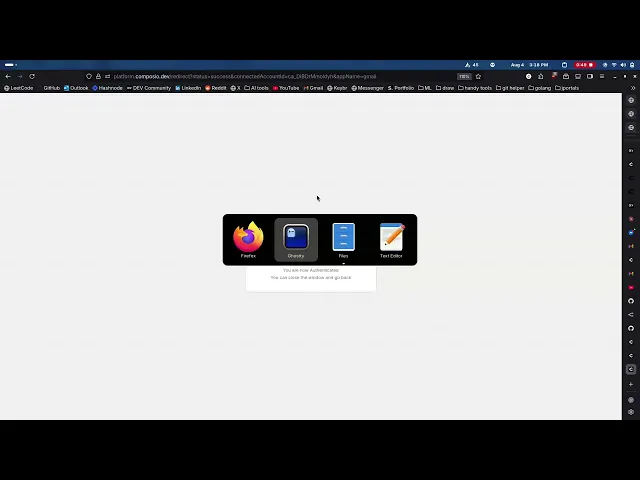

Here’s the output of the program:

I love that it uses nice CLI packages like Click to implement the chat functionality and the slash commands. However, it doesn't work at all. I can authenticate with Composio, but it still doesn't authenticate the toolkit (which, in my case, is Gmail).

Overall, it appears to be good, but the code lacks modularity. All the logic is contained in a single file.

It took about 22 minutes to implement, making it the slowest among all the models in this question. Unfortunately, after all that, the implementation didn't work. It took 22 minutes for finishing the implementation.

Response from Claude Sonnet 4:

You can find the code it generated here: Link

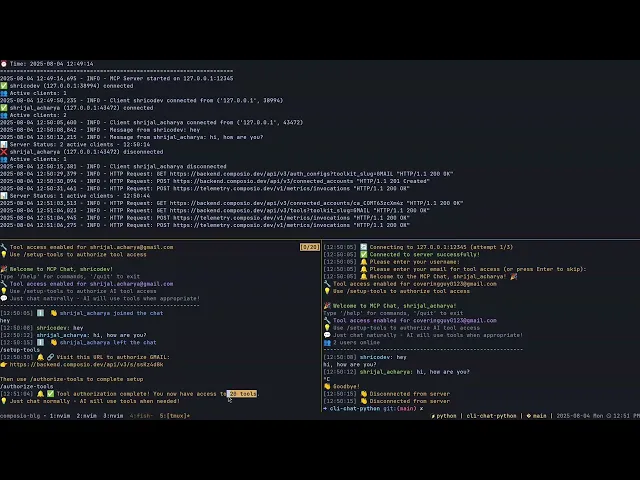

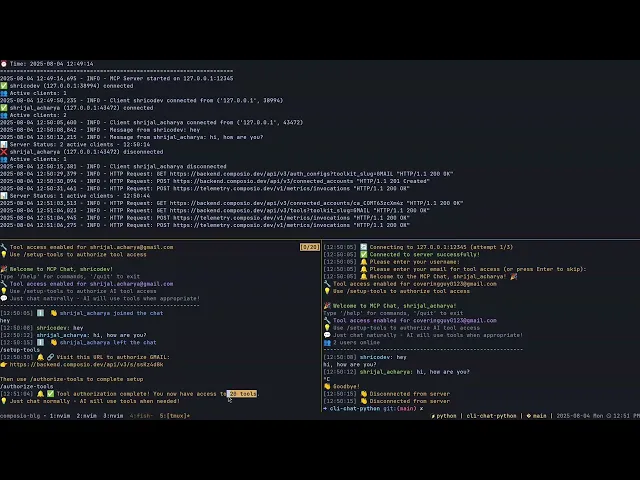

Here’s the output of the program:

By far the best implementation for this question. See how beautiful the implementation is? I started doubting my programming skills. 🙃

It added slash commands, logging, and a few other features that I hadn't even considered. It's just too good, and to be honest, other than some type errors, there's no problem I can see in the code. It's production-ready, all in one shot.

Upon examining the timing, it was swift, taking only five minutes. Overall, rock-solid implementation, no doubt!

2. Geometry Dash

Let's continue with a relatively simple test where I'll ask the models to build a Geometry Dash-like test web app.

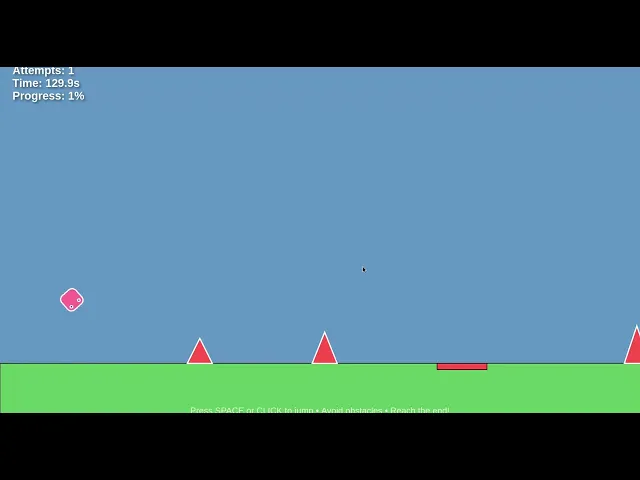

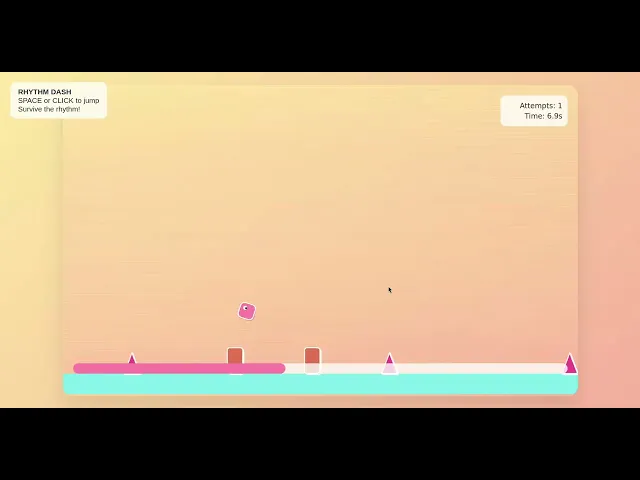

Response from Qwen 3 Coder:

You can find the code it generated here: Link

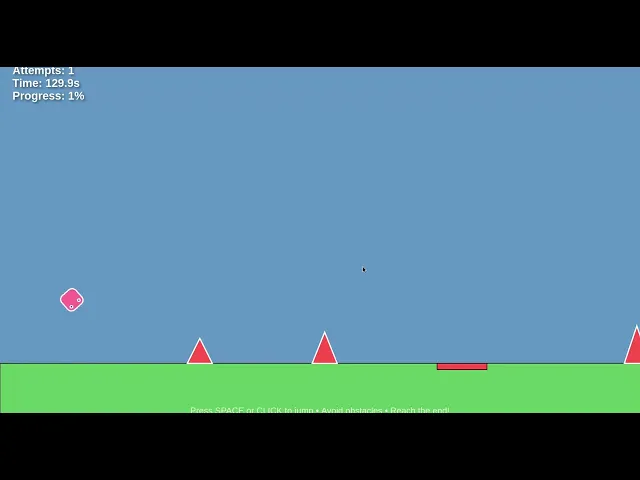

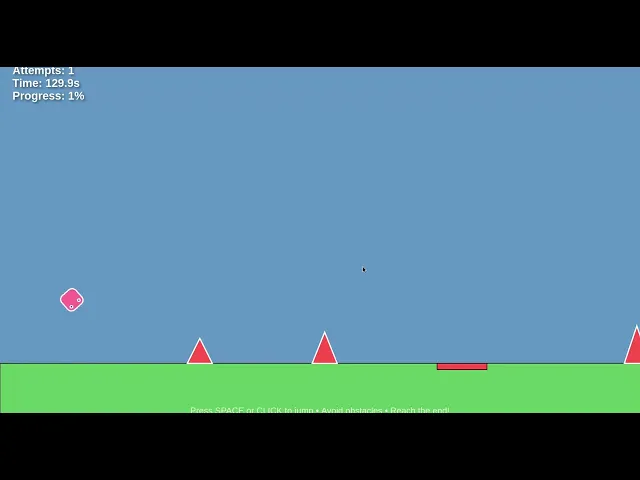

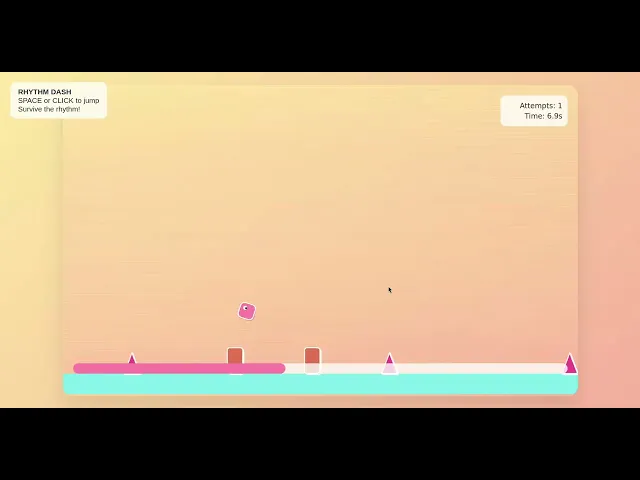

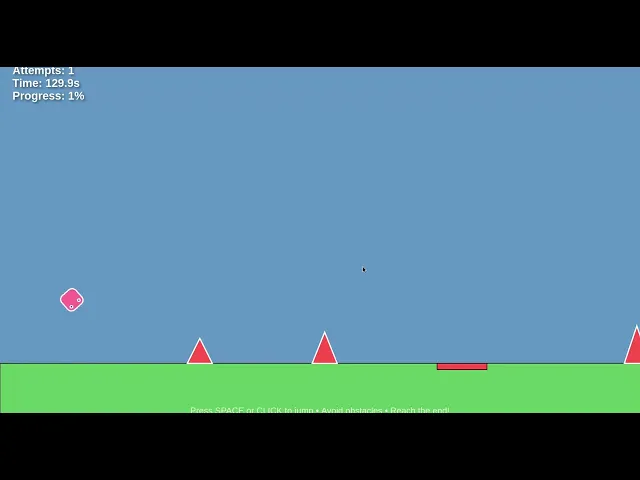

Here’s the output of the program:

Again, this one is perfectly implemented; it followed the prompts exactly. The score works well, and the game gets progressively difficult, which is awesome.

I also love the fact that it added a nice UI touch with the stars.

This took me over 13 minutes to get the working game. It had some problems with the tool calls here, and I'm surprised by the token usage for input, about 450K tokens for this prompt? Crazy.

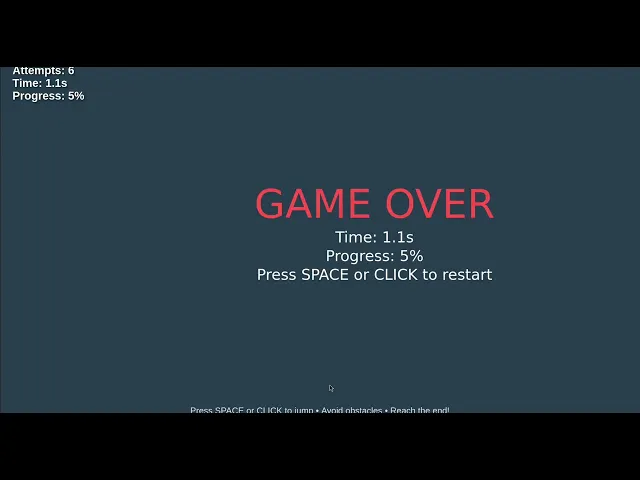

Response from Kimi K2:

You can find the code it generated here: Link

This came out on the first try!

There are bugs with the player movement. It does not move at all. Other than jumping, you can't do much with this implementation.

So, I did a quick follow-up prompt to ask it to fix it.

It turns out that it forgot to move the player position forward, which was successfully fixed in the second iteration.

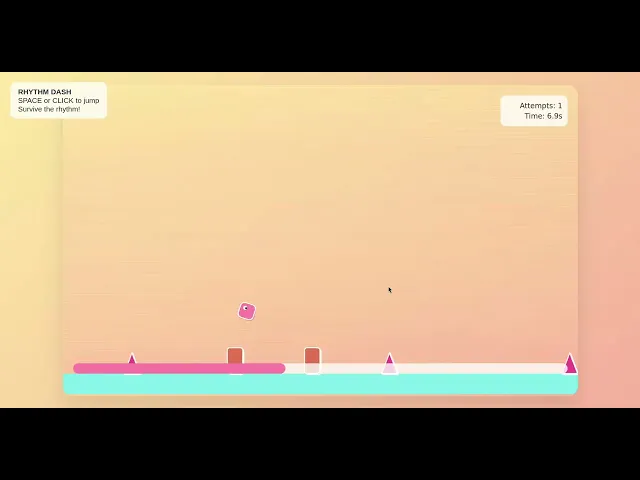

Here’s the final output of the program:

It took about 26 minutes (Wall Time) for the implementation to complete. Still, with this fix in place, I don't like its implementation and the UI compared to the one we got from Qwen 3 Coder, but it could be a personal preference thing.

Response from Claude Sonnet 4:

You can find the code it generated here: Link

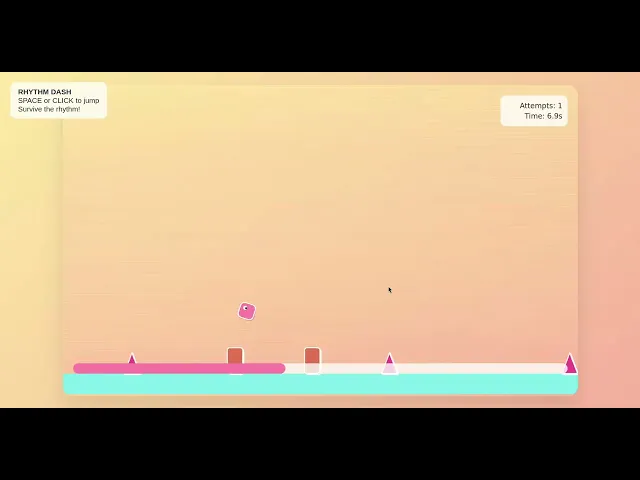

Here’s the output of the program:

At first glance, I can clearly see it's much better looking and a great implementation compared to Kimi K2. However, it's not the best I imagined from Sonnet 4.

The UI looks pretty weird in places, and the timer does not stop working even after the player hits the finish line.

This was the fastest model among the three models. It took just about 3 minutes to implement all of it, which is a fraction of what it took for Qwen 3 Coder and Kimi K2. Also, if we look at the token usage, it's much less and more efficient than the other two models.

3. Typing Test WebApp

Let's start with a fairly simple test where I'll ask the models to build a MonkeyType-like typing test app.

Prompt: Build a modern, highly responsive typing game inspired by Monkeytype, with a clean and elegant UI based on the Catppuccin color themes (choose one or allow toggling between Latte, Frappe, Macchiato, Mocha). The typing area should feature randomized or curated famous texts (quotes, speeches, literature) displayed in the center of the screen. - *Key Requirements:** - The user must type the exact characters, including uppercase and lowercase, punctuation, and spaces. - As the user types correctly, a fire or flame animation should appear under each typed character (like a typing trail). - Mis-typed characters should be clearly marked with a subtle but visible indicator (e.g., red underline or animated shake). - A minimalist virtual keyboard should be shown at the bottom center, softly glowing when keys are pressed. - Include features such as WPM, accuracy, time, and combo streak counter. - Once the user finishes typing the passage, show a summary screen with statistics and an animated celebration (like fireworks or confetti). - **Design Aesthetic:** - Soft but expressive, using the **Catppuccin**

Prompt: Build a modern, highly responsive typing game inspired by Monkeytype, with a clean and elegant UI based on the Catppuccin color themes (choose one or allow toggling between Latte, Frappe, Macchiato, Mocha). The typing area should feature randomized or curated famous texts (quotes, speeches, literature) displayed in the center of the screen. - *Key Requirements:** - The user must type the exact characters, including uppercase and lowercase, punctuation, and spaces. - As the user types correctly, a fire or flame animation should appear under each typed character (like a typing trail). - Mis-typed characters should be clearly marked with a subtle but visible indicator (e.g., red underline or animated shake). - A minimalist virtual keyboard should be shown at the bottom center, softly glowing when keys are pressed. - Include features such as WPM, accuracy, time, and combo streak counter. - Once the user finishes typing the passage, show a summary screen with statistics and an animated celebration (like fireworks or confetti). - **Design Aesthetic:** - Soft but expressive, using the **Catppuccin**

Prompt: Build a modern, highly responsive typing game inspired by Monkeytype, with a clean and elegant UI based on the Catppuccin color themes (choose one or allow toggling between Latte, Frappe, Macchiato, Mocha). The typing area should feature randomized or curated famous texts (quotes, speeches, literature) displayed in the center of the screen. - *Key Requirements:** - The user must type the exact characters, including uppercase and lowercase, punctuation, and spaces. - As the user types correctly, a fire or flame animation should appear under each typed character (like a typing trail). - Mis-typed characters should be clearly marked with a subtle but visible indicator (e.g., red underline or animated shake). - A minimalist virtual keyboard should be shown at the bottom center, softly glowing when keys are pressed. - Include features such as WPM, accuracy, time, and combo streak counter. - Once the user finishes typing the passage, show a summary screen with statistics and an animated celebration (like fireworks or confetti). - **Design Aesthetic:** - Soft but expressive, using the **Catppuccin**

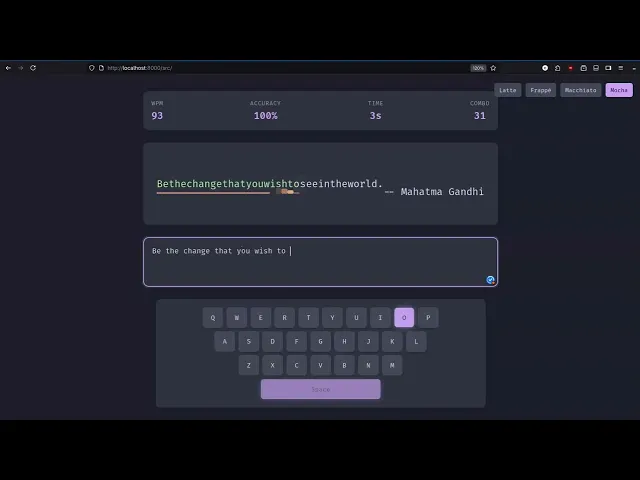

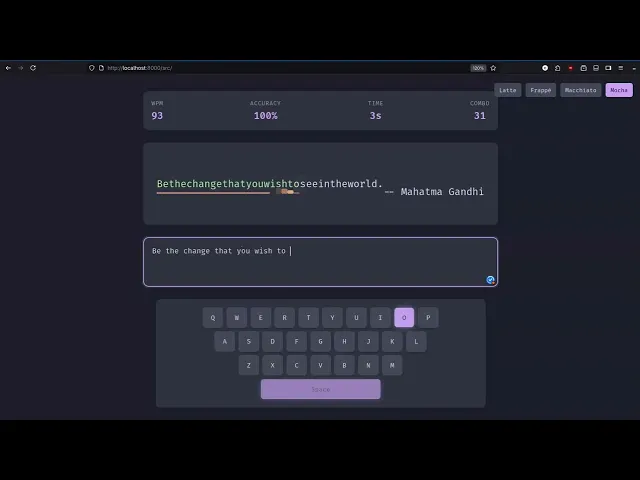

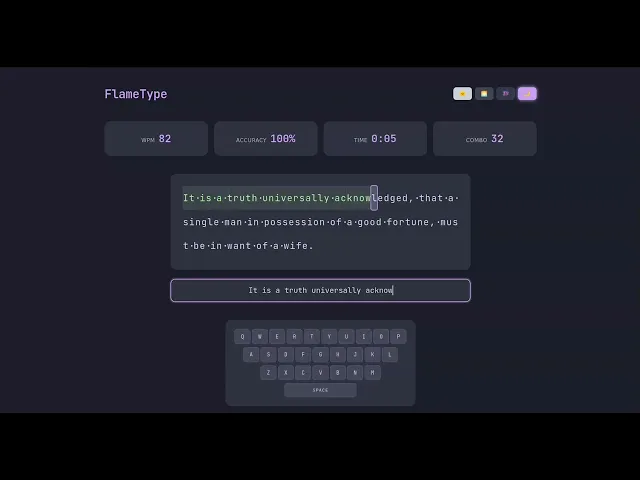

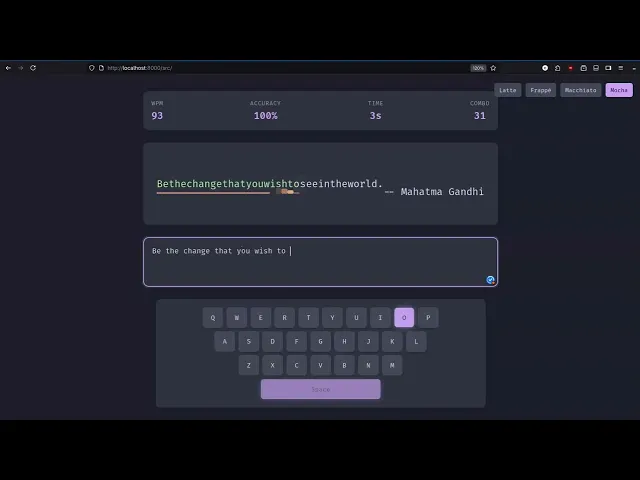

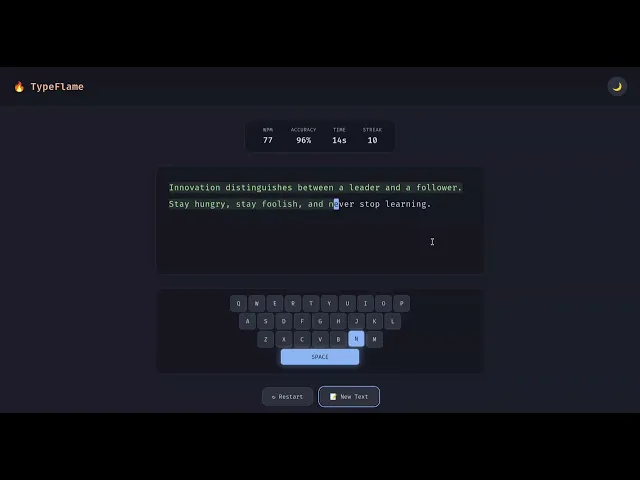

Response from Qwen 3 Coder:

You can find the code it generated here: Link

Here’s the output of the program:

It works great. The theme switcher, which allows switching between multiple Catppuccin themes, works well, and all calculations are implemented correctly.

The only problem I can see is the way quotes are displayed on the screen. Other than that, there's not much I can say.

It took approximately 15 minutes in total to generate this code. The tool calls work great, and the entire implementation is solid. It's just the time that could be a turn-off to some of you. Otherwise, there are no complaints here.

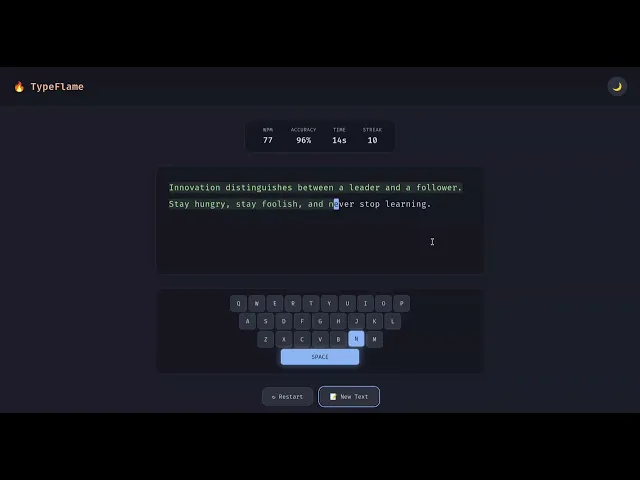

Response from Kimi K2:

You can find the code it generated here: Link

Here’s the output of the program:

A pretty similar implementation to the Qwen 3 Coder, or I'd say even a bit better, with the whole UI and animations with the typing trail.

The theme switcher and all the logic work great. The only issue I've noticed with the implementation is that the end screen is completely black, which appears to be an error with the pop-up implementation.

This one took even longer than the Qwen 3 Coder. It took me over 28 minutes to get to the working product. That's nasty, but the model output token speed itself is just in the line of ~40-50 tokens/sec, which is one big reason as well.

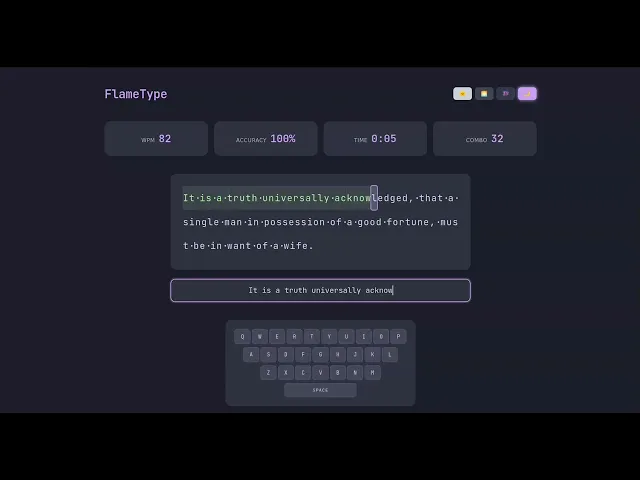

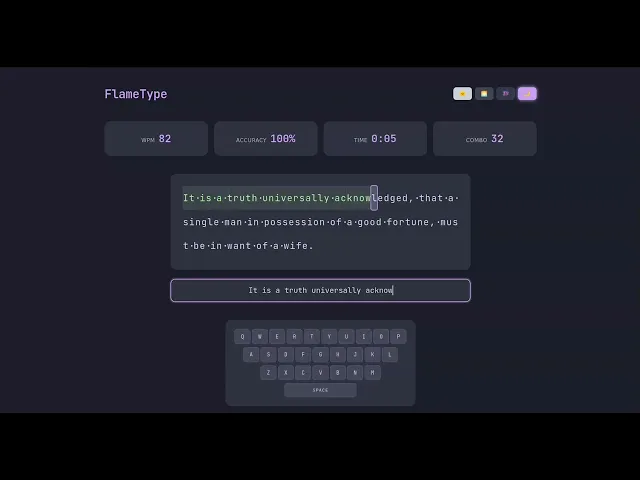

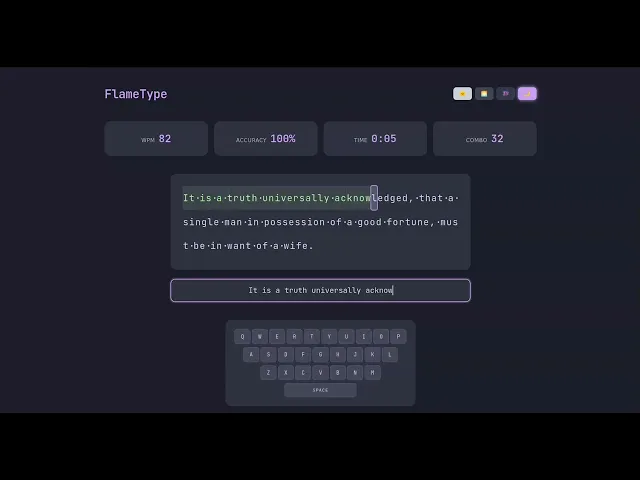

Response from Claude Sonnet 4:

You can find the code it generated here: Link

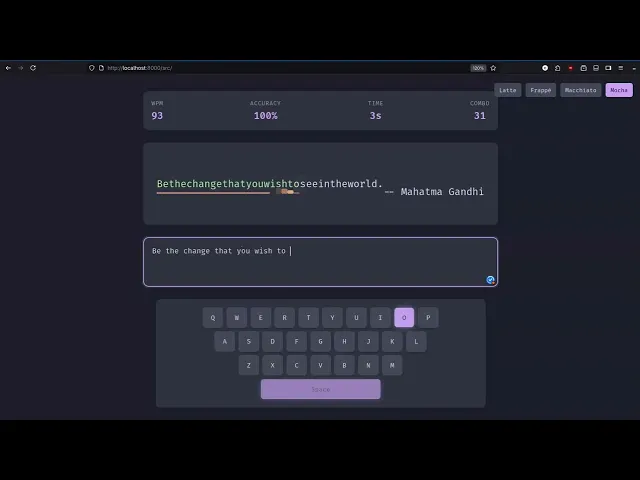

Here’s the output of the program:

This one's the best so far in terms of the overall implementation. I can see there's some Catppuccin theme going on, but you can't necessarily switch between all four Catppuccin themes. It is implemented in just dark and light modes.

Upon examining the timing, it took just over 7 minutes. Claude Sonnet is generally fast in terms of output token speed and is also a relatively efficient model in terms of token usage, which is a great plus.

Conclusion

All three models are pretty capable and perform decently in coding. The entire test didn't cost much, about $4-5, with Claude Sonnet 4 taking most of it.

I've done the last test with Grok 4 as well, but I didn't get it right. If I need to judge the overall performance, code quality, token usage, and all, I'd go for Claude Sonnet 4. This model is just another approach to coding.

The other two, Kimi K2 and Qwen 3 Coder, are also somewhat similar in terms of code quality, but they were a lot slower than Claude Sonnet 4. Both Kimi K2 and Qwen 3 Coder are a fraction of the price compared to Claude Sonnet 4, with relatively decent code, so if you're on a budget or have massive token requirements, you can go with either of them, but Qwen 3 Coder feels slightly better in terms of timing.

It's up to you to choose which model you want to use, but if budget is not an issue, I suggest you go with the Claude Sonnet 4. Sonnet still dominates the recent model when it comes to coding.

With recent models from Moonshot AI (Kimi K2), Alibaba didn't fall behind. Alibaba released Qwen3-Coder, an agentic coding model available in multiple sizes, with the strongest variant being Qwen3-Coder-480B-A35B-Instruct. Along with these models, they also open-sourced Qwen Code CLI, which is a CLI coding agent and a fork of Gemini CLI. I have also compared Gemini CLI and Claude Code; you can check that out as well.

So I had this urge to test this model with some other recently released models, Kimi K2 and Claude Sonnet 4 (especially), in some of my coding challenges.

In this article, we'll see what Qwen3 Coder can do and how well it compares with Kimi K2 and Sonnet 4 in coding.

TL;DR

If you want to dive straight into the results and see how both models compare in coding, here's a summary of the test:

Claude Sonnet 4 consistently gave the most complete and reliable implementations across all tests. Fastest output too. Most tasks were done in under 5–7 minutes.

Kimi K2 has strong coding and writes great UI code, but it's painfully slow and often gets stuck or produces non-functional code.

Qwen3 Coder surprised me with some solid outputs and is significantly faster than Kimi (used via Openrouter), although not quite at Claude’s level. Its agentic CLI tool is again a big plus.

For tool-heavy and complex prompts like MCP + Composio, Claude Sonnet 4 is far ahead in both quality and structure. It was the only one that got it right on the first try.

So, if you're optimising for speed and reliability, Claude Sonnet 4 is the clear winner. If you're looking for cost-effective solutions with relatively excellent outputs, Qwen3 Coder and Kimi K2 are great options, but be prepared for longer wait times. Although it largely depends on the providers you use, for this, I used OpenRouter, which routed requests to the best available providers.

How's the test done?

For this entire test, I'll be using Qwen Code CLI, which is an open-source tool for agentic coding.

It's a fork of the Gemini CLI, specifically adapted for use with the Qwen3 Coder for agentic coding tasks.

💁 I'm not sure why they still have the text saying "Gemini CLI." Even if you fork it, at least try changing the name. 🤦♂️

I'll be using OpenRouter to access all three models via their API in Qwen Code CLI to keep things fair, instead of using multiple different CLI coding agents like Claude Code for Sonnet 4.

NOTE: All the tests are done using OpenRouter, so the timings might vary as there are many providers. The latency and TPS of the provider in OpenRouter will greatly affect the output timings.

If you're curious about how to set up LLMs with OpenRouter in Qwen Code, you can simply export these three environment variables:

export OPENAI_API_KEY="<API_KEY_FROM_OPENROUTER_FOR_MODELS>" export OPENAI_MODEL="qwen/qwen3-coder" # Use the model of your choice export OPENAI_BASE_URL="<https://openrouter.ai/api/v1>"

And that's it. Now let's begin the test without further ado.

Coding Comparison

For the coding comparison, I did a total of 3 rounds:

CLI Chat MCP Client: Build a CLI chat MCP client in Python.

Geometry Dash WebApp Simulation: Build a web app version of Geometry Dash.

Typing Test WebApp: Build a monkeytype-like typing test app.

1. CLI Chat MCP Client

For the first test, I asked the models to build a chat MCP CLI client powered by Composio to connect with SaaS tools to see how well it can handle new SDKs (we have just released the v3 SDK) and tool calls. Do checkout if you need production-grade app integrations for AI agents.

For this one, I decided to do something crazy. Instead of a regular chat agent using Next.js, why not build the same thing for the terminal? It should be a chat room where multiple users can connect and chat, and most importantly, have the feature to utilise integrations (E.g., Gmail, Slack).

💁 Prompt: Build a CLI chat MCP client in Python. More like a chat room. Integrate Composio for tool calling and third-party service access. It should be able to connect to any MCP server and handle tool calls based on user messages. For example, if a user says "send an email to XYZ," it should use the Gmail integration via Composio and pass the correct arguments automatically. Ensure the system handles tool responses gracefully in the CLI. Follow this quickstart guide: https://docs.composio.dev/docs/quickstart

Response from Qwen 3 Coder:

You can find the code it generated here: Link

Here’s the output of the program:

The implementation is not bad. I wanted it to use websockets, so I described it like a chat room, but it simply implemented a one-on-one chat. It was able to quickly search the quickstart guide and find how to work with Composio, and that's great.

However, the WebSocket logic is completely missing.

If we look at the time it took to implement, it's a little over 9 minutes with a tool call error. In terms of code and Composio's integration, it's great, but prompt following is not very good here.

Response from Kimi K2:

You can find the code it generated here: Link

Here’s the output of the program:

I love that it uses nice CLI packages like Click to implement the chat functionality and the slash commands. However, it doesn't work at all. I can authenticate with Composio, but it still doesn't authenticate the toolkit (which, in my case, is Gmail).

Overall, it appears to be good, but the code lacks modularity. All the logic is contained in a single file.

It took about 22 minutes to implement, making it the slowest among all the models in this question. Unfortunately, after all that, the implementation didn't work. It took 22 minutes for finishing the implementation.

Response from Claude Sonnet 4:

You can find the code it generated here: Link

Here’s the output of the program:

By far the best implementation for this question. See how beautiful the implementation is? I started doubting my programming skills. 🙃

It added slash commands, logging, and a few other features that I hadn't even considered. It's just too good, and to be honest, other than some type errors, there's no problem I can see in the code. It's production-ready, all in one shot.

Upon examining the timing, it was swift, taking only five minutes. Overall, rock-solid implementation, no doubt!

2. Geometry Dash

Let's continue with a relatively simple test where I'll ask the models to build a Geometry Dash-like test web app.

Response from Qwen 3 Coder:

You can find the code it generated here: Link

Here’s the output of the program:

Again, this one is perfectly implemented; it followed the prompts exactly. The score works well, and the game gets progressively difficult, which is awesome.

I also love the fact that it added a nice UI touch with the stars.

This took me over 13 minutes to get the working game. It had some problems with the tool calls here, and I'm surprised by the token usage for input, about 450K tokens for this prompt? Crazy.

Response from Kimi K2:

You can find the code it generated here: Link

This came out on the first try!

There are bugs with the player movement. It does not move at all. Other than jumping, you can't do much with this implementation.

So, I did a quick follow-up prompt to ask it to fix it.

It turns out that it forgot to move the player position forward, which was successfully fixed in the second iteration.

Here’s the final output of the program:

It took about 26 minutes (Wall Time) for the implementation to complete. Still, with this fix in place, I don't like its implementation and the UI compared to the one we got from Qwen 3 Coder, but it could be a personal preference thing.

Response from Claude Sonnet 4:

You can find the code it generated here: Link

Here’s the output of the program:

At first glance, I can clearly see it's much better looking and a great implementation compared to Kimi K2. However, it's not the best I imagined from Sonnet 4.

The UI looks pretty weird in places, and the timer does not stop working even after the player hits the finish line.

This was the fastest model among the three models. It took just about 3 minutes to implement all of it, which is a fraction of what it took for Qwen 3 Coder and Kimi K2. Also, if we look at the token usage, it's much less and more efficient than the other two models.

3. Typing Test WebApp

Let's start with a fairly simple test where I'll ask the models to build a MonkeyType-like typing test app.

Prompt: Build a modern, highly responsive typing game inspired by Monkeytype, with a clean and elegant UI based on the Catppuccin color themes (choose one or allow toggling between Latte, Frappe, Macchiato, Mocha). The typing area should feature randomized or curated famous texts (quotes, speeches, literature) displayed in the center of the screen. - *Key Requirements:** - The user must type the exact characters, including uppercase and lowercase, punctuation, and spaces. - As the user types correctly, a fire or flame animation should appear under each typed character (like a typing trail). - Mis-typed characters should be clearly marked with a subtle but visible indicator (e.g., red underline or animated shake). - A minimalist virtual keyboard should be shown at the bottom center, softly glowing when keys are pressed. - Include features such as WPM, accuracy, time, and combo streak counter. - Once the user finishes typing the passage, show a summary screen with statistics and an animated celebration (like fireworks or confetti). - **Design Aesthetic:** - Soft but expressive, using the **Catppuccin**

Response from Qwen 3 Coder:

You can find the code it generated here: Link

Here’s the output of the program:

It works great. The theme switcher, which allows switching between multiple Catppuccin themes, works well, and all calculations are implemented correctly.

The only problem I can see is the way quotes are displayed on the screen. Other than that, there's not much I can say.

It took approximately 15 minutes in total to generate this code. The tool calls work great, and the entire implementation is solid. It's just the time that could be a turn-off to some of you. Otherwise, there are no complaints here.

Response from Kimi K2:

You can find the code it generated here: Link

Here’s the output of the program:

A pretty similar implementation to the Qwen 3 Coder, or I'd say even a bit better, with the whole UI and animations with the typing trail.

The theme switcher and all the logic work great. The only issue I've noticed with the implementation is that the end screen is completely black, which appears to be an error with the pop-up implementation.

This one took even longer than the Qwen 3 Coder. It took me over 28 minutes to get to the working product. That's nasty, but the model output token speed itself is just in the line of ~40-50 tokens/sec, which is one big reason as well.

Response from Claude Sonnet 4:

You can find the code it generated here: Link

Here’s the output of the program:

This one's the best so far in terms of the overall implementation. I can see there's some Catppuccin theme going on, but you can't necessarily switch between all four Catppuccin themes. It is implemented in just dark and light modes.

Upon examining the timing, it took just over 7 minutes. Claude Sonnet is generally fast in terms of output token speed and is also a relatively efficient model in terms of token usage, which is a great plus.

Conclusion

All three models are pretty capable and perform decently in coding. The entire test didn't cost much, about $4-5, with Claude Sonnet 4 taking most of it.

I've done the last test with Grok 4 as well, but I didn't get it right. If I need to judge the overall performance, code quality, token usage, and all, I'd go for Claude Sonnet 4. This model is just another approach to coding.

The other two, Kimi K2 and Qwen 3 Coder, are also somewhat similar in terms of code quality, but they were a lot slower than Claude Sonnet 4. Both Kimi K2 and Qwen 3 Coder are a fraction of the price compared to Claude Sonnet 4, with relatively decent code, so if you're on a budget or have massive token requirements, you can go with either of them, but Qwen 3 Coder feels slightly better in terms of timing.

It's up to you to choose which model you want to use, but if budget is not an issue, I suggest you go with the Claude Sonnet 4. Sonnet still dominates the recent model when it comes to coding.

Recommended Blogs

Recommended Blogs

Qwen 3, Qwen 3 Coder, Qwen 3 vs. Kimi K2, Qwen 3 vs Claude 4 Sonnet

Connect AI agents to SaaS apps in Minutes

Connect AI agents to SaaS apps in Minutes

We handle auth, tools, triggers, and logs, so you build what matters.

Stay updated.

Stay updated.