I vibe-coded the AI super agent app Genspark in a weekend

I vibe-coded the AI super agent app Genspark in a weekend

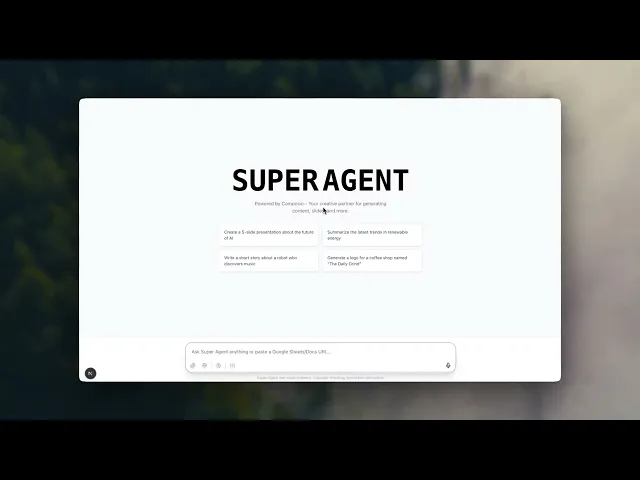

General-purpose AI agents like Manus and GenSpark have caught everyone’s attention. And VSs are pouring money into them. You can find many in the YC cohorts. These agents are really cool and provide access to a wide range of external tools used in our daily lives, such as spreadsheets, documents, and PowerPoint slides.

I received a text to build this kind of Agent within 24 hours for a demo. Let’s vibe code this shit. Here’s how I went about it. I opened my Cursor instance and set up the repo. My weapon of choice was Claude 4 Sonnet (thinking) in agent mode.

Vibe Coding Setup

I had to choose between Claude Code and Cursor IDE. For something more open-ended, I’d use Claude Code to let the model explore and build, but due to time constraints, I needed more control, so I went with Cursor Agent. I decided to make a Web App with NextJS and use the AI SDK for the ease of using agents and LLMs with the AI SDK.

Compared to Langgraph, it'd be significantly more complex, and I’d have to define the workflows myself, which isn’t necessary for open-ended tasks. Instead of the Gemini 2.5 Pro + GPT 4.1 approach last time, I went all guns blazing with Claude 4 Sonnet (thinking), hoping the model would be able to handle most development without me managing every aspect.

For the Agent tools, Composio was the choice because I can handle authentication with Google Suite Apps and utilise their APIs as actions in the agent without having to read and plumb Google’s API documentation.

What to avoid

The worst mistake you can make while vibe coding is making open-ended requests. I made the dumb mistake of giving Claude documentation and asking it to build based on that. The code it wrote was disastrous and just bad. Not to mention, Claude also tends to use a lot of dummy variables, which is the worst part. I rejected all of its changes. I had set up the Next.js project and installed the necessary packages, mainly AI SDK and Composio. What core abilities from GenSpark do I want to replicate? Its ability to read/edit sheets, documents, and presentations.

Getting back on course

I didn’t expect how easy it would be to embed Google Sheets/Docs as iframes on a sidebar. I expected a process, but it was straightforward. I can’t build this for my users without implementing authentication for each user’s Google Sheets account.

I used Composio for easy authentication with Sheets and Docs. Once signed in, the agent can access the user’s files. After handling authentication, the challenging part was enabling the agent to create presentations. There’s no native tool that lets you do it, and I did not want to explore the Google Slides API. I referred to GenSpark and noticed it wrote HTML code.

You can use HTML to generate a presentation and download it as pptx. I set up an endpoint for generating a presentation, a static HTML layout code, and the LLM will dynamically create the slide content. The idea was to use this as a tool for my AI agent. I also had to allow the user to download the presentation using the PPTXGenJS library. Here’s the final flow:

The super agent recognises the request for a presentation and responds with ‘[SLIDES]’. This triggers the generate slides endpoint, where the super agent passes the topic, content, slide count, and style. In the generate presentation endpoint, an LLM generates an array of slide objects containing: type, title, content, and bullet points. This array is received by the frontend and, using my static HTML code, renders a preview version that the user can download.

Let’s discuss the Google Sheets and Docs integrations. I wanted to add a sidebar to view the sheets/docs being edited in real-time. It’s nice to see the changes instantly as your agent does it. Composio to the rescue. I had the toolkits ready, just had to pass them to the generateText function from the AI SDK. I added the code to render a resizable sidebar for any detected drive doc URL. I integrated a web search tool, and now it was time for the Browser.

In Python, there are multiple Browser-based Agent libraries, but in JS, there are very few of them. I planned to use a famous Browser provider, but it refused to let me sign in. I tried deleting the cookies, but I couldn’t spend time fixing that because of the deadline, so I looked at other options and chose Puppeteer since it was easy to integrate.

I provided Claude with the documents for Composio’s custom tool creation, and Claude created the Puppeteer tool, wrapped it in the custom tool format, and passed it to the Super Agent with the ability to scrape, click, and input text.

The final demo included reading data from Sheets/Docs and using it to generate slides dynamically. It worked successfully and met the deadline.

The code is on GitHub. Fork it, break it, make it better.

How difficult was it to vibe code?

I have to admit there were a lot of times when existing features broke when I tried to add new ones, and a persistent error was Claude’s confusion about using Tailwind v3/v4, creating scenarios where I had to restore checkpoints to ensure the UI didn’t break. I wrote the code for all the route files, and I don’t think AI agents are good at backend logic as they are at the frontend. I used one or two 21st.dev components for the UI.

General-purpose AI agents like Manus and GenSpark have caught everyone’s attention. And VSs are pouring money into them. You can find many in the YC cohorts. These agents are really cool and provide access to a wide range of external tools used in our daily lives, such as spreadsheets, documents, and PowerPoint slides.

I received a text to build this kind of Agent within 24 hours for a demo. Let’s vibe code this shit. Here’s how I went about it. I opened my Cursor instance and set up the repo. My weapon of choice was Claude 4 Sonnet (thinking) in agent mode.

Vibe Coding Setup

I had to choose between Claude Code and Cursor IDE. For something more open-ended, I’d use Claude Code to let the model explore and build, but due to time constraints, I needed more control, so I went with Cursor Agent. I decided to make a Web App with NextJS and use the AI SDK for the ease of using agents and LLMs with the AI SDK.

Compared to Langgraph, it'd be significantly more complex, and I’d have to define the workflows myself, which isn’t necessary for open-ended tasks. Instead of the Gemini 2.5 Pro + GPT 4.1 approach last time, I went all guns blazing with Claude 4 Sonnet (thinking), hoping the model would be able to handle most development without me managing every aspect.

For the Agent tools, Composio was the choice because I can handle authentication with Google Suite Apps and utilise their APIs as actions in the agent without having to read and plumb Google’s API documentation.

What to avoid

The worst mistake you can make while vibe coding is making open-ended requests. I made the dumb mistake of giving Claude documentation and asking it to build based on that. The code it wrote was disastrous and just bad. Not to mention, Claude also tends to use a lot of dummy variables, which is the worst part. I rejected all of its changes. I had set up the Next.js project and installed the necessary packages, mainly AI SDK and Composio. What core abilities from GenSpark do I want to replicate? Its ability to read/edit sheets, documents, and presentations.

Getting back on course

I didn’t expect how easy it would be to embed Google Sheets/Docs as iframes on a sidebar. I expected a process, but it was straightforward. I can’t build this for my users without implementing authentication for each user’s Google Sheets account.

I used Composio for easy authentication with Sheets and Docs. Once signed in, the agent can access the user’s files. After handling authentication, the challenging part was enabling the agent to create presentations. There’s no native tool that lets you do it, and I did not want to explore the Google Slides API. I referred to GenSpark and noticed it wrote HTML code.

You can use HTML to generate a presentation and download it as pptx. I set up an endpoint for generating a presentation, a static HTML layout code, and the LLM will dynamically create the slide content. The idea was to use this as a tool for my AI agent. I also had to allow the user to download the presentation using the PPTXGenJS library. Here’s the final flow:

The super agent recognises the request for a presentation and responds with ‘[SLIDES]’. This triggers the generate slides endpoint, where the super agent passes the topic, content, slide count, and style. In the generate presentation endpoint, an LLM generates an array of slide objects containing: type, title, content, and bullet points. This array is received by the frontend and, using my static HTML code, renders a preview version that the user can download.

Let’s discuss the Google Sheets and Docs integrations. I wanted to add a sidebar to view the sheets/docs being edited in real-time. It’s nice to see the changes instantly as your agent does it. Composio to the rescue. I had the toolkits ready, just had to pass them to the generateText function from the AI SDK. I added the code to render a resizable sidebar for any detected drive doc URL. I integrated a web search tool, and now it was time for the Browser.

In Python, there are multiple Browser-based Agent libraries, but in JS, there are very few of them. I planned to use a famous Browser provider, but it refused to let me sign in. I tried deleting the cookies, but I couldn’t spend time fixing that because of the deadline, so I looked at other options and chose Puppeteer since it was easy to integrate.

I provided Claude with the documents for Composio’s custom tool creation, and Claude created the Puppeteer tool, wrapped it in the custom tool format, and passed it to the Super Agent with the ability to scrape, click, and input text.

The final demo included reading data from Sheets/Docs and using it to generate slides dynamically. It worked successfully and met the deadline.

The code is on GitHub. Fork it, break it, make it better.

How difficult was it to vibe code?

I have to admit there were a lot of times when existing features broke when I tried to add new ones, and a persistent error was Claude’s confusion about using Tailwind v3/v4, creating scenarios where I had to restore checkpoints to ensure the UI didn’t break. I wrote the code for all the route files, and I don’t think AI agents are good at backend logic as they are at the frontend. I used one or two 21st.dev components for the UI.

Recommended Blogs

Recommended Blogs

Connect AI agents to SaaS apps in Minutes

Connect AI agents to SaaS apps in Minutes

We handle auth, tools, triggers, and logs, so you build what matters.

Stay updated.

Stay updated.