Gemini 2.5 Pro vs. Claude 3.7 Sonnet (thinking) vs. Grok 3 (think)

Gemini 2.5 Pro vs. Claude 3.7 Sonnet (thinking) vs. Grok 3 (think)

Google dropped its best-ever creation, Gemini 2.5 Pro Experimental, on March 25. It is a stupidly incredible reasoning model shining on every benchmark, but guess what went wrong? Yes, you guessed it right: OpenAI. They released the GPT-4o image generation model, and everyone isekai’d to Ghibli world. Not that I complain; I enjoyed it myself, but it wholly shrouded the release hype of Gemini 2.5 Pro, a much bigger deal.

This tells a lot about how humans work more than anything. We’re a lot more hedonist than we like to admit. Anyway, we now have the best from Google and for FREE. Yes, you can access the model in Google AI Studio for free. Again, why is it not on Gemini, and why is AI Studio not available for mobile users? If it wants to win over the masses, Google needs to sort these things out. As an LLM user, I often find myself using mobile apps and not having these complimentary offerings substantially impact adoption.

We saw how Deepseek trended in app stores after Deepseek r1, and Google does not have them despite owning Android. Crazy!

Table of Contents

TL;DR

If you’ve somewhere to go, here’s the summary.

• Finally, a great model from Google that is on par with the rest of the AI big boys.

• Improved coding performance, one million context window, and much better multi-lingual understanding.

• The model excels in raw coding performance and highly positive user feedback.

• It can solve most day-to-day coding tasks better than any reasoning model.

• However, Grok 3 and Claude 3.7 Sonnet have more nuanced and streamlined reasoning.

Notable improvements

Gemini 2.5 Pro is an advanced reasoning model, building upon Gemini 2.0 Flash’s architecture with enhanced post-training and potentially larger parameter sizes for superior performance.

What stands out is its colossal one million context size. You can throw an entire codebase at it and still get reasonably good performance. The closest was the 200k context length from Claude 3.7 Sonnet.

The new Gemini 2.5 Pro is much better at multilingual understanding. As noted by Omar, it even broke the LMSYS leaderboard for Spanish.

Shining on Benchmarks

Gemini 2.5 Pro has performed exceedingly well on all major benchmarks, such as Lmsys, Livebench, GPQA, AIME, SWEbench verified, etc.

However, on the ARC-AGI benchmark, it scored on par with Deepseek r1 but less than Claude 3.7 (thinking) and o3-mini-high.

The Gemini 2.5 Pro topped WeirdML benchmark, a benchmark testing LLMs ability to solve weird and unusual machine learning tasks by writing working PyTorch code and iteratively learn from feedback.

It’s also now the leading model on Paul Gauthier‘s Aider Polyglot benchmark.

Gemini 2.5 Pro in real-world

Since the new Gemini 2.5 Pro was launched, people have been having a field day, especially in coding. Here are some great examples.

Xeophon tested Gemini 2.5 Pro, Claude 3.7 Sonnet, and GPT-4.5 against today’s Wordle problem.

Prompt to shader by Ethan Mollick.

Gemini 2.5 is a very impressive model so far.

For example, it is only the third LLM (after Grok 3 and o3-mini-high) to be able to pull off "create a visually interesting shader that can run in twigl-dot-app make it like the ocean in a storm," and I think the best so far.

Phillip Schmid generated a flight simulation in 3js in one go with Gemini 2.5 Pro.

Mathew Berman on made a Rubik's cube solver in 3d.

A Rubik's cube generator AND solver. I've tried this with Claude 3.7 thinking, DeepSeek etc and never came close to this.

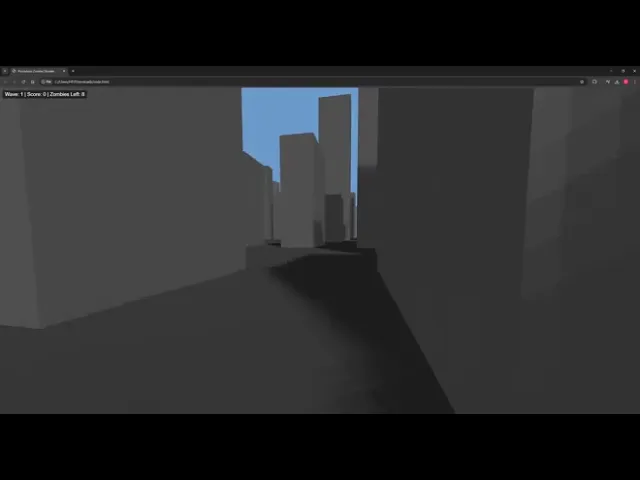

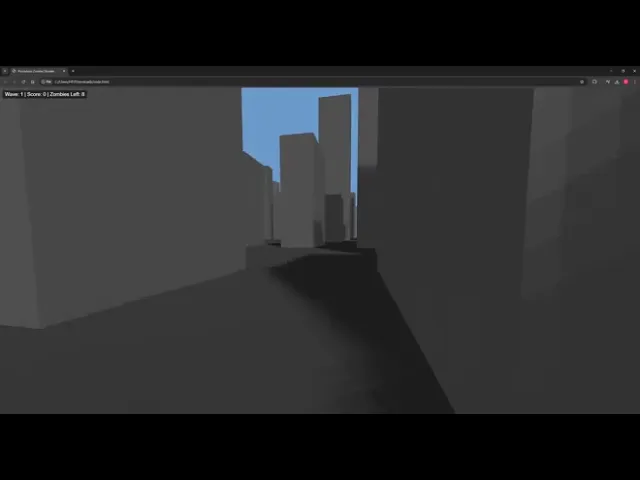

Phil created a zombie game in a single shot.

Wow crazy, Gemini 2.5 Pro one-shotted this.

"Create a 3d zombie wave shooter without any downloads with Three.js which runs in the browser. The settings should be in an apocalyptic city which is procedural generated. Without the need of a server"

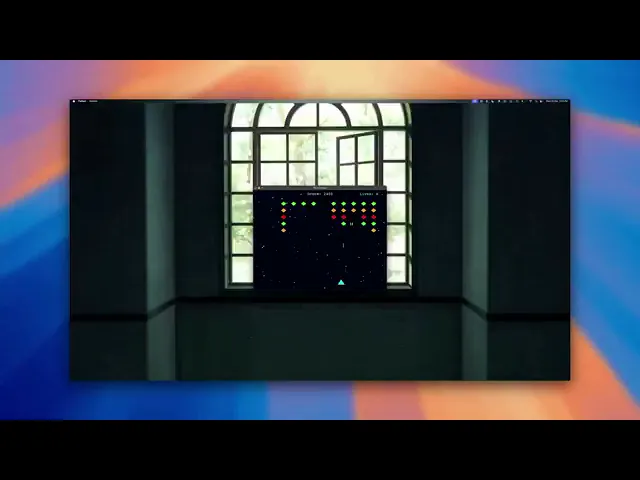

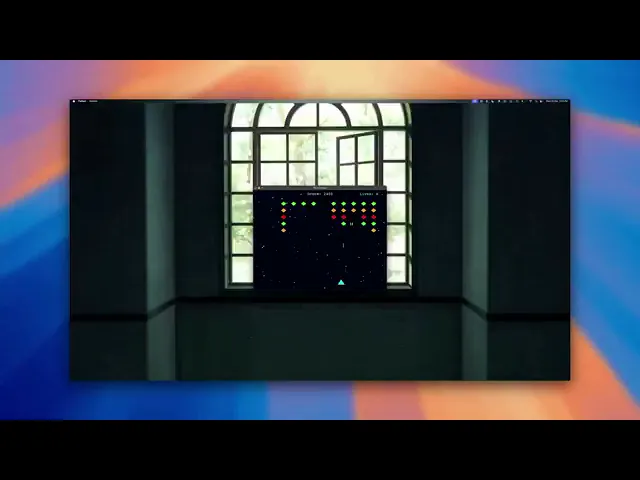

Karan Vaidya built an agent to build games end-to-end with Composio tools.

Gemini 2.5 Pro might just be the best model for vibe coding games!!!

We built an AI Agent and asked it to create Galaga, and in seconds, it generated a fully playable arcade shooter. How it works:

Uses Pygame to create the game

Generates, saves, and runs the game instantly

Powered by Composio's File and Shell Tool

Gemini 2.5 Pro vs. Claude 3.7 thinking vs. Grok 3 thinking

I have used and tested all the models, and among reasoning models, Claude 3.7 and Grok 3 stood out the most. And they are currently the best reasoning models available on the market.

So, how does the new Gemini 2.5 Pro compare to these reasoning models? I was as curious as the second person, so I ran three coding tests to determine which one was the best.

Coding problems

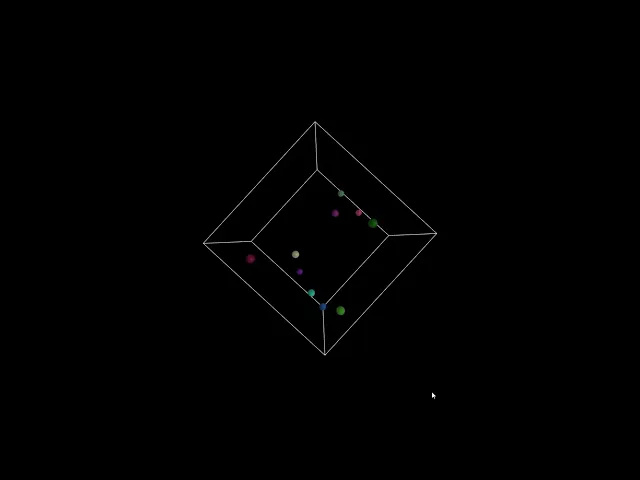

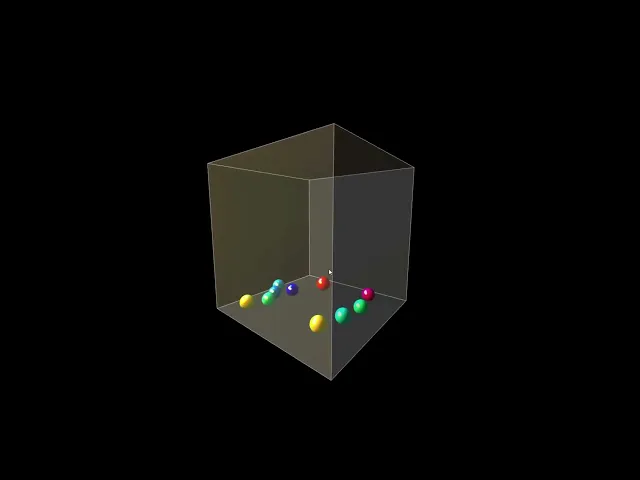

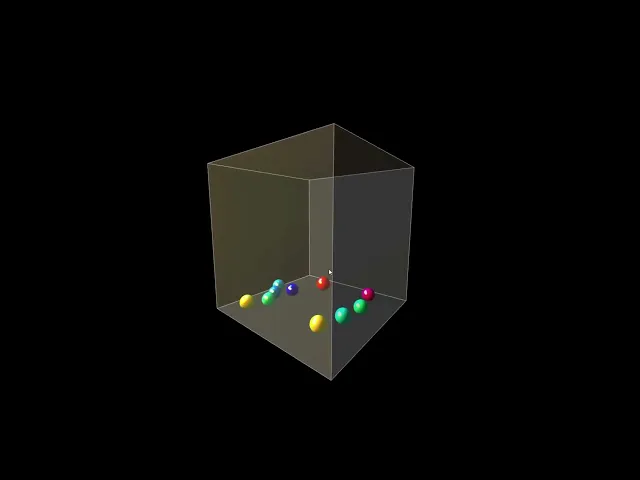

1. Interactive Bouncing Balls in a 3D Cube

Prompt: Create a 3D scene using Three.js with a visible transparent cube containing 10 coloured balls. The balls should bounce off the cube walls and respond to mouse movement and camera position. Use OrbitControls and add ambient lighting. All code should be in a single HTML file.

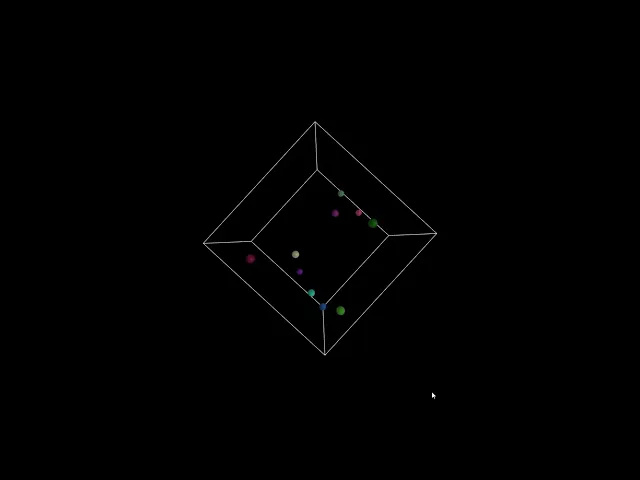

Response from Grok 3 Think:

You can find the code it generated here: Link

Here's the output of the program:

The result from Grok 3 looked good at first. The balls were moving the right way in the beginning, and everything showed up as expected. But the balls started sticking together after a short time and stopped bouncing properly. They began to collapse into each other, and the scene did not unfold as I had hoped.

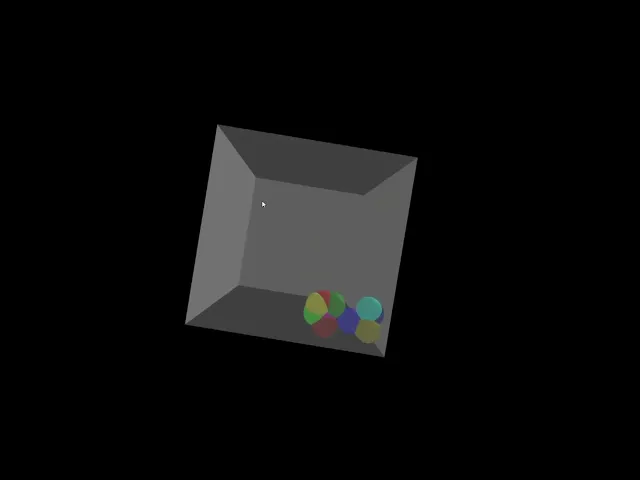

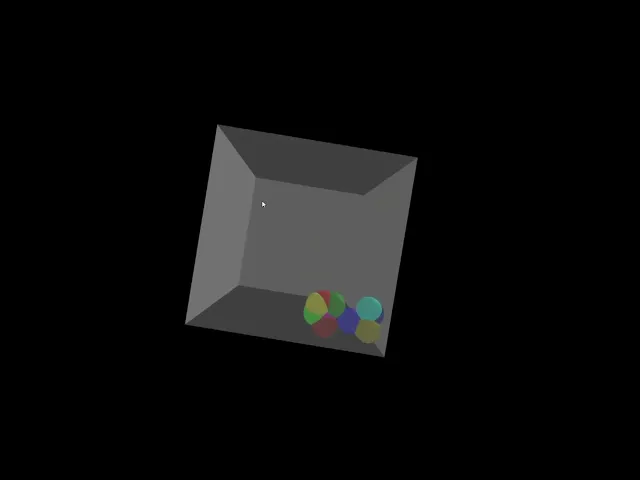

Response from Gemini 2.5:

You can find the code it generated here: Link

Here's the output of the program:

Gemini 2.5 gave the most accurate result. The movement was smooth, and the way the balls bounced felt surprisingly close to real-world physics. Everything ran flawlessly from the start.

Response from Claude 3.7 Sonnet Thinking:

You can find the code it generated here: Link

Here's the output of the program:

Claude 3.7 didn’t quite land it. The cube and balls looked great, but the ball movement felt off. They bounced at the start, then just froze. The interaction was missing, and the whole thing felt unfinished.

Summary

Gemini 2.5 gave the best result. The ball movement was smooth and realistic, and everything worked as expected. Grok 3 started off well, but the balls quickly began to stick together and stop bouncing, which broke the effect. Claude 3.7 had a nice setup, but the balls froze soon after starting, and the scene felt unfinished.

Gemini was the only one that stayed solid from start to finish.

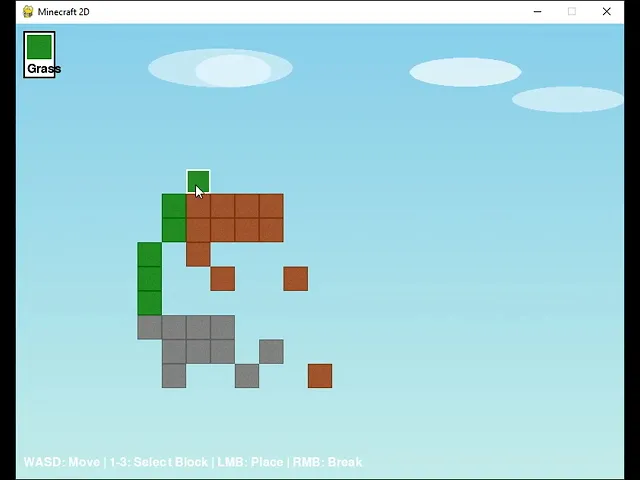

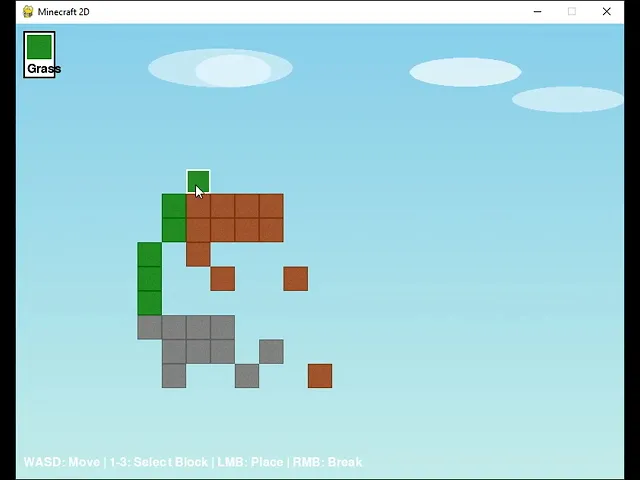

3. Minecraft with Pygame

It's time to get creative with the models. For this one, I wanted to see how well they could prototype a simple Minecraft-style game using Pygame.

The goal wasn’t to rebuild Minecraft from scratch but to generate a basic world made of blocks with the ability to move around and place or remove blocks—basically, something that looks and feels like Minecraft Lite.

Prompt:

Create a 2D Minecraft-style game in Pygame. Build a colourful, scrollable grid world with smooth camera movement using WASD keys. Left-click to place blocks, right-click to break them. Include at least three block types with different textures or colours. Add simple animations, a sky background, and basic sound effects for placing and breaking blocks. Everything should run in one Python file and feel playful and responsive.

Response from Grok 3 Think

You can find the code it generated here: Link

Grok gave me a working Minecraft-style sandbox, but the result was disappointing. Placing blocks had some delay, the movement felt a bit slow, and the overall design was not very appealing. It worked, but the experience didn’t feel smooth or polished.

There was no scrolling or proper camera movement, and the blocks didn’t snap to a grid, which made the building feel messy. It was usable but far from enjoyable.

Here is the output:

Response from Gemini 2.5

You can find the code it generated here: Link

Gemini absolutely nailed this one. It gave me a smooth, fully playable Minecraft-style game that felt more polished than the other two. The controls felt like they came straight out of an actual game.

I could move the camera with WASD, place blocks with the left mouse button, and remove them with the right. Switching between block types like Grass, Dirt, and Stone using the number keys worked perfectly, and even ESC to quit was built in.

Everything ran cleanly, the block placement felt satisfying, and the camera movement was smooth. It just felt right.

Here is the output:

Response from Claude 3.7 Sonnet Thinking

You can find the code it generated here: Link

Claude went all in on this one. It gave me a fully playable 2D Minecraft-style game that actually felt like a game. I could move around with WASD, place and break blocks with mouse clicks, and even switch between five different block types using the number keys. Its visual block selector at the bottom made it feel more like a real UI than just boxes on a screen.

And then came the extras. Smooth camera movement, particle effects when blocks broke, soft shadows, a gradient sky, and even terrain that looked like something you’d actually want to build on. Claude was not messing around.

Here is the output:

Summary

All three models successfully built a working Minecraft-style game, but the results varied. Grok 3's version was functional but disappointing. Block placement had a noticeable delay, the movement felt slow, and the lack of grid snapping or camera controls made the experience rough.

Gemini 2.5 and Claude 3.7 both delivered strong, playable games with smooth controls and responsive mechanics. Gemini focused on clean execution and solid gameplay, while Claude added extra touches like particle effects, block switching, and UI enhancements.

In terms of overall quality, Gemini and Claude stood out equally, each offering a complete and enjoyable experience in their own way. Grok worked, but didn’t match the level of the other two.

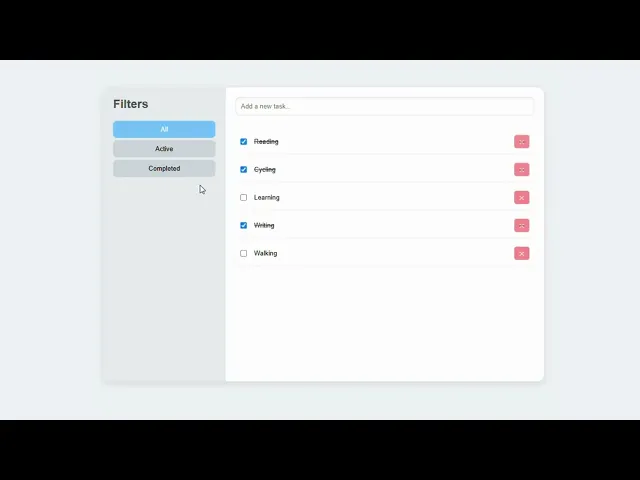

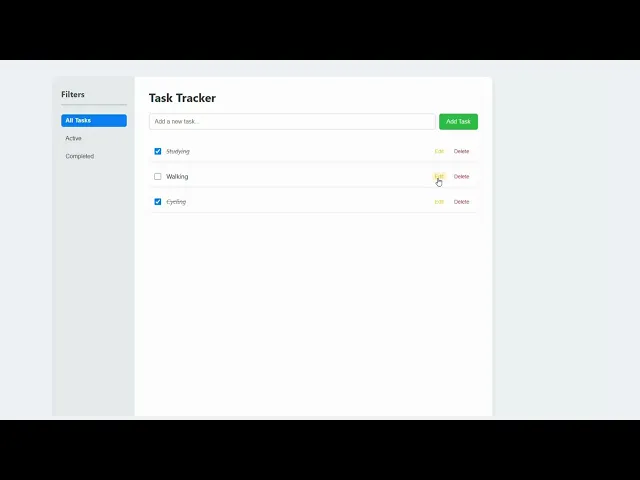

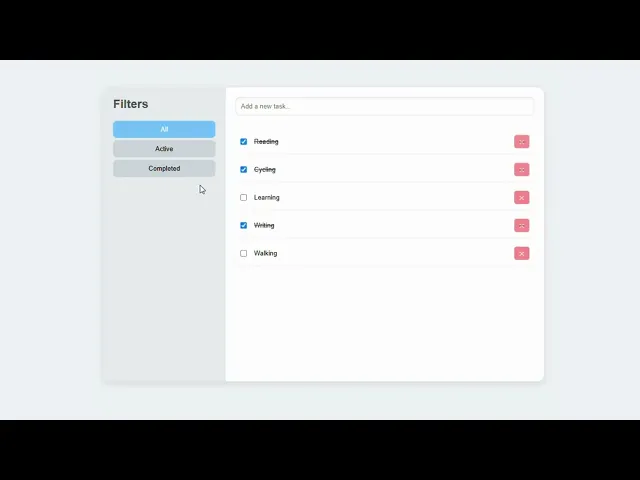

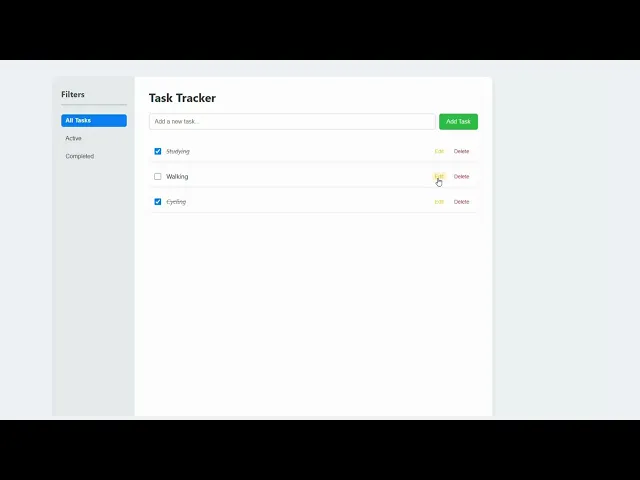

3. Build a Creative Web App

For the final challenge, I wanted to see how the models handle building a complete, interactive website. This one isn't just about clean HTML and CSS — it needs structure, logic, storage, and a touch of design sense.

Prompt:

Build a modern, responsive "Task Tracker" web app using HTML, CSS, and JavaScript. The app should let users add, edit, delete, and complete tasks. Include a sidebar with filters like “All”, “Active”, and “Completed”. Use localStorage to save tasks between sessions. The layout should be split-screen, with the task list on the right and filters on the left. Style it with a clean, minimal UI. Use soft shadows, rounded corners, and a muted color palette. Add basic animations for task transitions (such as fade or slide), and ensure they work well on both desktop and mobile devices. Everything should run in a single HTML file.

Response from Grok 3 Think

You can find the code it generated here: Link

Grok showed up for this one. It provided me with a fully functional task tracker that included every feature I requested. Adding, editing, completing, deleting, and filtering tasks all worked precisely how they should.

The layout followed the split-screen style, and the design looked surprisingly polished. Soft shadows, clean fonts, and smooth interactions made it feel like something you could actually use.

Here is the output:

Response from Gemini 2.5

You can find the code it generated here: Link

Gemini completely outperformed the rest on this one. The flow of the app felt natural from start to finish. Every interaction was smooth, the UI was clean and modern, and the layout followed the split-screen structure perfectly. Adding, editing, deleting, and filtering tasks all worked without a hitch.

The transitions were subtle, the animations felt polished, and even the little UI details made the whole thing feel a step above the others. It just clicked.

Here is the output:

Response from Claude 3.7 Sonnet Thinking

You can find the code it generated here: Link

Claude’s output was pretty much on the same level as Grok. It checked all the boxes, task creation, editing, deleting, completing, and filtering. The layout followed the prompt well, and the split-screen design looked clean. It even had nice visual touches that made the app more enjoyable.

Here is the output:

Summary

Grok 3, Claude 3.7, and Gemini 2.5 all delivered solid task tracker apps that followed the prompt closely. They handled adding, editing, deleting, filtering, and saving tasks with ease. The split-screen layout was implemented well in all three, and the designs felt clean and easy to use.

Claude added a bit more visual polish, Grok kept things simple and smooth, and Gemini brought a well-structured layout with a strong sense of flow. Each had its own strengths, but overall, they all performed at the same level and produced results that felt complete and ready to use.

Complex Reasoning

1. Riddle to check cognitive bias

Prompt: A woman and her son are in a car accident. The woman is sadly killed. The boy is rushed to the hospital. When the doctor sees the boy, he says, “I can’t operate on this child; he is my son! How is this possible?

Claude 3.7 Sonnet was able to get it right.

However, changing the same a bit derails the model.

Prompt: The surgeon, who is the boy’s father, says, “I can’t operate on this child; he is my son”, who is the surgeon of this child. Be straightforward”.

Grok 3’s answer for the original prompt.

Therefore, the answer is: The doctor is the boy’s father. The woman who died in the accident was the boy’s mother and the doctor’s wife. The doctor cannot operate on the boy because he is his son, and medical ethics discourage doctors from treating close family members due to emotional involvement. This explains both the relationship and the doctor’s refusal to proceed with the surgery.<br><br>

Therefore, the answer is: The doctor is the boy’s father. The woman who died in the accident was the boy’s mother and the doctor’s wife. The doctor cannot operate on the boy because he is his son, and medical ethics discourage doctors from treating close family members due to emotional involvement. This explains both the relationship and the doctor’s refusal to proceed with the surgery.<br><br>

Therefore, the answer is: The doctor is the boy’s father. The woman who died in the accident was the boy’s mother and the doctor’s wife. The doctor cannot operate on the boy because he is his son, and medical ethics discourage doctors from treating close family members due to emotional involvement. This explains both the relationship and the doctor’s refusal to proceed with the surgery.<br><br>

For the second prompt.

While the surgeon says he cannot operate, likely due to ethical guidelines preventing doctors from treating close family members to avoid emotional bias, the question does not ask who will perform the surgery. Instead, it asks, “Who is the surgeon of this child?” This focuses on identity, not action. Since the problem defines the surgeon as the boy’s father and provides no indication of another surgeon, the straightforward answer is based on the given description.<br>Thus, the surgeon of this child is the boy’s father.<br>Answer: the boy’s father<br

While the surgeon says he cannot operate, likely due to ethical guidelines preventing doctors from treating close family members to avoid emotional bias, the question does not ask who will perform the surgery. Instead, it asks, “Who is the surgeon of this child?” This focuses on identity, not action. Since the problem defines the surgeon as the boy’s father and provides no indication of another surgeon, the straightforward answer is based on the given description.<br>Thus, the surgeon of this child is the boy’s father.<br>Answer: the boy’s father<br

While the surgeon says he cannot operate, likely due to ethical guidelines preventing doctors from treating close family members to avoid emotional bias, the question does not ask who will perform the surgery. Instead, it asks, “Who is the surgeon of this child?” This focuses on identity, not action. Since the problem defines the surgeon as the boy’s father and provides no indication of another surgeon, the straightforward answer is based on the given description.<br>Thus, the surgeon of this child is the boy’s father.<br>Answer: the boy’s father<br

So, Grok 3 (thinking) was better at overall reasoning; it was able to answer it correctly.

2. Playing Tic-tac-toe

In this test, I will provide a partial Tic Tac Toe board state and ask the models to determine the next position that will ensure a 100% victory.

The model was able to identify one possible scenario. Great indeed.

Claude 3.7 Sonnet's response

You should place your X in the top-right corner (upper right position). This creates a "fork" - a situation where you have two potential winning lines: You'll threaten to complete the top row (where you already have the top-left X) You'll also threaten to complete the diagonal from top-right to bottom-left (where you already have the bottom-left X) Since your opponent can only block one of these threats on their next turn, you'll be able to win on your subsequent move by completing whichever line they didn't block. This is a 100% guaranteed win strategy

You should place your X in the top-right corner (upper right position). This creates a "fork" - a situation where you have two potential winning lines: You'll threaten to complete the top row (where you already have the top-left X) You'll also threaten to complete the diagonal from top-right to bottom-left (where you already have the bottom-left X) Since your opponent can only block one of these threats on their next turn, you'll be able to win on your subsequent move by completing whichever line they didn't block. This is a 100% guaranteed win strategy

You should place your X in the top-right corner (upper right position). This creates a "fork" - a situation where you have two potential winning lines: You'll threaten to complete the top row (where you already have the top-left X) You'll also threaten to complete the diagonal from top-right to bottom-left (where you already have the bottom-left X) Since your opponent can only block one of these threats on their next turn, you'll be able to win on your subsequent move by completing whichever line they didn't block. This is a 100% guaranteed win strategy

Grok 3 thinking's response

All three models were able to find it. Grok, however, was too verbose, and none could find both positions, 3 and 5.

3. Complex blood relationship

So, this is a very complex and somewhat vague blood relationship riddle that most likely wasn't in the training data.

Ptompt: Jeff has two brothers, and each of his brothers has three sisters. Each of the sisters has four step-brothers, and each step-brother has five step-sisters. So, how many siblings are in the extended family?

Gemini 2.5 Pro's response

Gemini could not solve it, let's see the other models.

Claude 3.7 Sonnet's response

Perfect, correct answer.

Grok 3's response

Though it didn't answer it correctly, it could reason well and consider the right relationship.

Summary of math abilities

Of all the thinkers, Grok 3 thinking is the clearest, followed by Claude 3.7 Sonnet and then Gemini 2.5 Pro.

Mathematics

1. GCD of an infinite series

Prompt: Find the GCD of this series set {n^99(n^60-1): n>1}

It is a difficult question, and I would not be able to solve it. So, let’s see if the models get it right. Only Deepseek r1 and o1-pro were able to do it.

Gemini 2.5 Pro

Okay, it was correct.

Grok 3 was wrong

2. Find (x-14)^10 where x is the number of vowels in the response

To be honest, it requires more reasoning than math, but let’s see how the models fare.

Claude 3.7 Sonnet was able to answer it correctly.

Grok 3

Completely failed to get the gist of the question.

Summary of Math Abilities

In raw mathematics, I find the Gemini 2.5 Pro to be better, but when it involves a bit of reasoning, it doesn’t perform as well. While Claude 3.7 Sonnet is most balanced here, and Grok 3 is the third.

Conclusion

Google has finally unveiled a great model that competes with the other frontier models, which is a definite sign that this improvement will continue in the future.

So, here are my final findings.

Extremely good at coding

Not good at raw reasoning compared to Grok 3 (thinking) and Claude 3.7 Sonnet (thinking).

For raw math, it's a great model.

Google dropped its best-ever creation, Gemini 2.5 Pro Experimental, on March 25. It is a stupidly incredible reasoning model shining on every benchmark, but guess what went wrong? Yes, you guessed it right: OpenAI. They released the GPT-4o image generation model, and everyone isekai’d to Ghibli world. Not that I complain; I enjoyed it myself, but it wholly shrouded the release hype of Gemini 2.5 Pro, a much bigger deal.

This tells a lot about how humans work more than anything. We’re a lot more hedonist than we like to admit. Anyway, we now have the best from Google and for FREE. Yes, you can access the model in Google AI Studio for free. Again, why is it not on Gemini, and why is AI Studio not available for mobile users? If it wants to win over the masses, Google needs to sort these things out. As an LLM user, I often find myself using mobile apps and not having these complimentary offerings substantially impact adoption.

We saw how Deepseek trended in app stores after Deepseek r1, and Google does not have them despite owning Android. Crazy!

Table of Contents

TL;DR

If you’ve somewhere to go, here’s the summary.

• Finally, a great model from Google that is on par with the rest of the AI big boys.

• Improved coding performance, one million context window, and much better multi-lingual understanding.

• The model excels in raw coding performance and highly positive user feedback.

• It can solve most day-to-day coding tasks better than any reasoning model.

• However, Grok 3 and Claude 3.7 Sonnet have more nuanced and streamlined reasoning.

Notable improvements

Gemini 2.5 Pro is an advanced reasoning model, building upon Gemini 2.0 Flash’s architecture with enhanced post-training and potentially larger parameter sizes for superior performance.

What stands out is its colossal one million context size. You can throw an entire codebase at it and still get reasonably good performance. The closest was the 200k context length from Claude 3.7 Sonnet.

The new Gemini 2.5 Pro is much better at multilingual understanding. As noted by Omar, it even broke the LMSYS leaderboard for Spanish.

Shining on Benchmarks

Gemini 2.5 Pro has performed exceedingly well on all major benchmarks, such as Lmsys, Livebench, GPQA, AIME, SWEbench verified, etc.

However, on the ARC-AGI benchmark, it scored on par with Deepseek r1 but less than Claude 3.7 (thinking) and o3-mini-high.

The Gemini 2.5 Pro topped WeirdML benchmark, a benchmark testing LLMs ability to solve weird and unusual machine learning tasks by writing working PyTorch code and iteratively learn from feedback.

It’s also now the leading model on Paul Gauthier‘s Aider Polyglot benchmark.

Gemini 2.5 Pro in real-world

Since the new Gemini 2.5 Pro was launched, people have been having a field day, especially in coding. Here are some great examples.

Xeophon tested Gemini 2.5 Pro, Claude 3.7 Sonnet, and GPT-4.5 against today’s Wordle problem.

Prompt to shader by Ethan Mollick.

Gemini 2.5 is a very impressive model so far.

For example, it is only the third LLM (after Grok 3 and o3-mini-high) to be able to pull off "create a visually interesting shader that can run in twigl-dot-app make it like the ocean in a storm," and I think the best so far.

Phillip Schmid generated a flight simulation in 3js in one go with Gemini 2.5 Pro.

Mathew Berman on made a Rubik's cube solver in 3d.

A Rubik's cube generator AND solver. I've tried this with Claude 3.7 thinking, DeepSeek etc and never came close to this.

Phil created a zombie game in a single shot.

Wow crazy, Gemini 2.5 Pro one-shotted this.

"Create a 3d zombie wave shooter without any downloads with Three.js which runs in the browser. The settings should be in an apocalyptic city which is procedural generated. Without the need of a server"

Karan Vaidya built an agent to build games end-to-end with Composio tools.

Gemini 2.5 Pro might just be the best model for vibe coding games!!!

We built an AI Agent and asked it to create Galaga, and in seconds, it generated a fully playable arcade shooter. How it works:

Uses Pygame to create the game

Generates, saves, and runs the game instantly

Powered by Composio's File and Shell Tool

Gemini 2.5 Pro vs. Claude 3.7 thinking vs. Grok 3 thinking

I have used and tested all the models, and among reasoning models, Claude 3.7 and Grok 3 stood out the most. And they are currently the best reasoning models available on the market.

So, how does the new Gemini 2.5 Pro compare to these reasoning models? I was as curious as the second person, so I ran three coding tests to determine which one was the best.

Coding problems

1. Interactive Bouncing Balls in a 3D Cube

Prompt: Create a 3D scene using Three.js with a visible transparent cube containing 10 coloured balls. The balls should bounce off the cube walls and respond to mouse movement and camera position. Use OrbitControls and add ambient lighting. All code should be in a single HTML file.

Response from Grok 3 Think:

You can find the code it generated here: Link

Here's the output of the program:

The result from Grok 3 looked good at first. The balls were moving the right way in the beginning, and everything showed up as expected. But the balls started sticking together after a short time and stopped bouncing properly. They began to collapse into each other, and the scene did not unfold as I had hoped.

Response from Gemini 2.5:

You can find the code it generated here: Link

Here's the output of the program:

Gemini 2.5 gave the most accurate result. The movement was smooth, and the way the balls bounced felt surprisingly close to real-world physics. Everything ran flawlessly from the start.

Response from Claude 3.7 Sonnet Thinking:

You can find the code it generated here: Link

Here's the output of the program:

Claude 3.7 didn’t quite land it. The cube and balls looked great, but the ball movement felt off. They bounced at the start, then just froze. The interaction was missing, and the whole thing felt unfinished.

Summary

Gemini 2.5 gave the best result. The ball movement was smooth and realistic, and everything worked as expected. Grok 3 started off well, but the balls quickly began to stick together and stop bouncing, which broke the effect. Claude 3.7 had a nice setup, but the balls froze soon after starting, and the scene felt unfinished.

Gemini was the only one that stayed solid from start to finish.

3. Minecraft with Pygame

It's time to get creative with the models. For this one, I wanted to see how well they could prototype a simple Minecraft-style game using Pygame.

The goal wasn’t to rebuild Minecraft from scratch but to generate a basic world made of blocks with the ability to move around and place or remove blocks—basically, something that looks and feels like Minecraft Lite.

Prompt:

Create a 2D Minecraft-style game in Pygame. Build a colourful, scrollable grid world with smooth camera movement using WASD keys. Left-click to place blocks, right-click to break them. Include at least three block types with different textures or colours. Add simple animations, a sky background, and basic sound effects for placing and breaking blocks. Everything should run in one Python file and feel playful and responsive.

Response from Grok 3 Think

You can find the code it generated here: Link

Grok gave me a working Minecraft-style sandbox, but the result was disappointing. Placing blocks had some delay, the movement felt a bit slow, and the overall design was not very appealing. It worked, but the experience didn’t feel smooth or polished.

There was no scrolling or proper camera movement, and the blocks didn’t snap to a grid, which made the building feel messy. It was usable but far from enjoyable.

Here is the output:

Response from Gemini 2.5

You can find the code it generated here: Link

Gemini absolutely nailed this one. It gave me a smooth, fully playable Minecraft-style game that felt more polished than the other two. The controls felt like they came straight out of an actual game.

I could move the camera with WASD, place blocks with the left mouse button, and remove them with the right. Switching between block types like Grass, Dirt, and Stone using the number keys worked perfectly, and even ESC to quit was built in.

Everything ran cleanly, the block placement felt satisfying, and the camera movement was smooth. It just felt right.

Here is the output:

Response from Claude 3.7 Sonnet Thinking

You can find the code it generated here: Link

Claude went all in on this one. It gave me a fully playable 2D Minecraft-style game that actually felt like a game. I could move around with WASD, place and break blocks with mouse clicks, and even switch between five different block types using the number keys. Its visual block selector at the bottom made it feel more like a real UI than just boxes on a screen.

And then came the extras. Smooth camera movement, particle effects when blocks broke, soft shadows, a gradient sky, and even terrain that looked like something you’d actually want to build on. Claude was not messing around.

Here is the output:

Summary

All three models successfully built a working Minecraft-style game, but the results varied. Grok 3's version was functional but disappointing. Block placement had a noticeable delay, the movement felt slow, and the lack of grid snapping or camera controls made the experience rough.

Gemini 2.5 and Claude 3.7 both delivered strong, playable games with smooth controls and responsive mechanics. Gemini focused on clean execution and solid gameplay, while Claude added extra touches like particle effects, block switching, and UI enhancements.

In terms of overall quality, Gemini and Claude stood out equally, each offering a complete and enjoyable experience in their own way. Grok worked, but didn’t match the level of the other two.

3. Build a Creative Web App

For the final challenge, I wanted to see how the models handle building a complete, interactive website. This one isn't just about clean HTML and CSS — it needs structure, logic, storage, and a touch of design sense.

Prompt:

Build a modern, responsive "Task Tracker" web app using HTML, CSS, and JavaScript. The app should let users add, edit, delete, and complete tasks. Include a sidebar with filters like “All”, “Active”, and “Completed”. Use localStorage to save tasks between sessions. The layout should be split-screen, with the task list on the right and filters on the left. Style it with a clean, minimal UI. Use soft shadows, rounded corners, and a muted color palette. Add basic animations for task transitions (such as fade or slide), and ensure they work well on both desktop and mobile devices. Everything should run in a single HTML file.

Response from Grok 3 Think

You can find the code it generated here: Link

Grok showed up for this one. It provided me with a fully functional task tracker that included every feature I requested. Adding, editing, completing, deleting, and filtering tasks all worked precisely how they should.

The layout followed the split-screen style, and the design looked surprisingly polished. Soft shadows, clean fonts, and smooth interactions made it feel like something you could actually use.

Here is the output:

Response from Gemini 2.5

You can find the code it generated here: Link

Gemini completely outperformed the rest on this one. The flow of the app felt natural from start to finish. Every interaction was smooth, the UI was clean and modern, and the layout followed the split-screen structure perfectly. Adding, editing, deleting, and filtering tasks all worked without a hitch.

The transitions were subtle, the animations felt polished, and even the little UI details made the whole thing feel a step above the others. It just clicked.

Here is the output:

Response from Claude 3.7 Sonnet Thinking

You can find the code it generated here: Link

Claude’s output was pretty much on the same level as Grok. It checked all the boxes, task creation, editing, deleting, completing, and filtering. The layout followed the prompt well, and the split-screen design looked clean. It even had nice visual touches that made the app more enjoyable.

Here is the output:

Summary

Grok 3, Claude 3.7, and Gemini 2.5 all delivered solid task tracker apps that followed the prompt closely. They handled adding, editing, deleting, filtering, and saving tasks with ease. The split-screen layout was implemented well in all three, and the designs felt clean and easy to use.

Claude added a bit more visual polish, Grok kept things simple and smooth, and Gemini brought a well-structured layout with a strong sense of flow. Each had its own strengths, but overall, they all performed at the same level and produced results that felt complete and ready to use.

Complex Reasoning

1. Riddle to check cognitive bias

Prompt: A woman and her son are in a car accident. The woman is sadly killed. The boy is rushed to the hospital. When the doctor sees the boy, he says, “I can’t operate on this child; he is my son! How is this possible?

Claude 3.7 Sonnet was able to get it right.

However, changing the same a bit derails the model.

Prompt: The surgeon, who is the boy’s father, says, “I can’t operate on this child; he is my son”, who is the surgeon of this child. Be straightforward”.

Grok 3’s answer for the original prompt.

Therefore, the answer is: The doctor is the boy’s father. The woman who died in the accident was the boy’s mother and the doctor’s wife. The doctor cannot operate on the boy because he is his son, and medical ethics discourage doctors from treating close family members due to emotional involvement. This explains both the relationship and the doctor’s refusal to proceed with the surgery.<br><br>

For the second prompt.

While the surgeon says he cannot operate, likely due to ethical guidelines preventing doctors from treating close family members to avoid emotional bias, the question does not ask who will perform the surgery. Instead, it asks, “Who is the surgeon of this child?” This focuses on identity, not action. Since the problem defines the surgeon as the boy’s father and provides no indication of another surgeon, the straightforward answer is based on the given description.<br>Thus, the surgeon of this child is the boy’s father.<br>Answer: the boy’s father<br

So, Grok 3 (thinking) was better at overall reasoning; it was able to answer it correctly.

2. Playing Tic-tac-toe

In this test, I will provide a partial Tic Tac Toe board state and ask the models to determine the next position that will ensure a 100% victory.

The model was able to identify one possible scenario. Great indeed.

Claude 3.7 Sonnet's response

You should place your X in the top-right corner (upper right position). This creates a "fork" - a situation where you have two potential winning lines: You'll threaten to complete the top row (where you already have the top-left X) You'll also threaten to complete the diagonal from top-right to bottom-left (where you already have the bottom-left X) Since your opponent can only block one of these threats on their next turn, you'll be able to win on your subsequent move by completing whichever line they didn't block. This is a 100% guaranteed win strategy

Grok 3 thinking's response

All three models were able to find it. Grok, however, was too verbose, and none could find both positions, 3 and 5.

3. Complex blood relationship

So, this is a very complex and somewhat vague blood relationship riddle that most likely wasn't in the training data.

Ptompt: Jeff has two brothers, and each of his brothers has three sisters. Each of the sisters has four step-brothers, and each step-brother has five step-sisters. So, how many siblings are in the extended family?

Gemini 2.5 Pro's response

Gemini could not solve it, let's see the other models.

Claude 3.7 Sonnet's response

Perfect, correct answer.

Grok 3's response

Though it didn't answer it correctly, it could reason well and consider the right relationship.

Summary of math abilities

Of all the thinkers, Grok 3 thinking is the clearest, followed by Claude 3.7 Sonnet and then Gemini 2.5 Pro.

Mathematics

1. GCD of an infinite series

Prompt: Find the GCD of this series set {n^99(n^60-1): n>1}

It is a difficult question, and I would not be able to solve it. So, let’s see if the models get it right. Only Deepseek r1 and o1-pro were able to do it.

Gemini 2.5 Pro

Okay, it was correct.

Grok 3 was wrong

2. Find (x-14)^10 where x is the number of vowels in the response

To be honest, it requires more reasoning than math, but let’s see how the models fare.

Claude 3.7 Sonnet was able to answer it correctly.

Grok 3

Completely failed to get the gist of the question.

Summary of Math Abilities

In raw mathematics, I find the Gemini 2.5 Pro to be better, but when it involves a bit of reasoning, it doesn’t perform as well. While Claude 3.7 Sonnet is most balanced here, and Grok 3 is the third.

Conclusion

Google has finally unveiled a great model that competes with the other frontier models, which is a definite sign that this improvement will continue in the future.

So, here are my final findings.

Extremely good at coding

Not good at raw reasoning compared to Grok 3 (thinking) and Claude 3.7 Sonnet (thinking).

For raw math, it's a great model.

Recommended Blogs

Recommended Blogs

gemini, 25, pro, vs, claude, 37, sonnet, thinking, vs, grok, 3, think

Connect AI agents to SaaS apps in Minutes

Connect AI agents to SaaS apps in Minutes

We handle auth, tools, triggers, and logs, so you build what matters.

Stay updated.

Stay updated.