Notes on Anthropic's Computer Use Ability

Notes on Anthropic's Computer Use Ability

Anthropic has updated its Haiku and Sonnet lineup. Now, we have Haiku 3.5—a smaller model that outperforms Opus 3, the former state-of-the-art—and Sonnet 3.5, with enhanced coding abilities and a groundbreaking new feature called computer use. This is significant for everyone working in the field of AI agents.

As someone who works at an AI start-up, I wanted to know how good it is and what it holds for the future of AI agents.

I tested the model across several real-world use cases you might encounter, and in this article, I'll walk you through each of them.

Table of Contents

TL;DR

What is Anthropic’s Computer Use?

How do you set up Anthropic’s Computer Use?

Let’s see how Computer Use works

What future holds for Agents?

Final Verdict

TL;DR

If you have anywhere else to go, here is the summary of the article.

Computer Use is Anthropic’s latest LLM capability. It lets Sonnet 3.5 determine the coordinates of components in an image.

Equipping the model with tools like a Computer allows it to move cursors and interact with the computer as an actual user.

The model could easily handle simple use cases, such as searching the Internet, retrieving results, creating Spreadsheets, etc.

It still relies on screenshots, so do not expect it to perform real-time tasks like playing pong or Mario, which require sub-second response times.

The model excels at many tasks, from research to filling out simple forms.

The model is too expensive and too slow for anything practical. I burnt nearly $30 for this blog.

What is Anthropic's Computer Use?

The Computer Use feature takes the Sonnet's image understanding and logical reasoning to the next level, allowing it to interact with the computer directly. It can now understand the image and figure out the display component to move cursors, click, and type text to interact with computers like humans.

The model can understand pixels on the images and figure out how many pixels vertically or horizontally it needs to move a cursor to click in the correct place.

Sonnet is now officially the state-of-the-art model for computer interaction, scoring 14.7% in OSWorld, almost double that of the closest model.

If you want to learn more, check out Anthropic's official blog post; though they have not revealed much about their training, it is still a good read.

How to Set up Anthropic's Computer Use?

Anthropic also released a developer cookbook to help you quickly set it up and explore how it works.

You need access to any Anthropic API keys, AWS bedrock, and Vertex to use Sonnet. The README file explains how to do this.

To get started, clone the repository.

git clone <https://github.com/anthropics/anthropic-quickstarts>

git clone <https://github.com/anthropics/anthropic-quickstarts>

git clone <https://github.com/anthropics/anthropic-quickstarts>

Move into the Computer Use demo directory.

cd computer-use-demo

cd computer-use-demo

cd computer-use-demo

Now, pull the image and run the container. I used the Bedrock-hosted model; you can use other providers as well.

docker run \ -e API_PROVIDER=bedrock \ -e AWS_PROFILE=$AWS_PROFILE \ -e AWS_REGION=us-west-2 \ -v $HOME/.aws/credentials:/home/computeruse/.aws/credentials \ -v $HOME/.anthropic:/home/computeruse/.anthropic \ -p 5900:5900 \ -p 8501:8501 \ -p 6080:6080 \ -p 8080:8080 \ -it ghcr.io/anthropics/anthropic-quickstarts:computer-use-demo-latest " style="color:#D4D4D4;display:none" aria-label="Copy">

export AWS_PROFILE=<your_aws_profile>

export AWS_PROFILE=<your_aws_profile>

export AWS_PROFILE=<your_aws_profile>

This will take some time; once it is finished, it will spawn a Streamlit server.

You can view the site.

Send a message to see if everything is up and running.

By default, it has access to a few apps: Firefox, a Spreadsheet, a Terminal, a PDF viewer, a Calculator, etc.

Let’s see how Computer Use works

Example 1: Find the top 5 movies and make a CSV

I started with a simple internet search. I asked it to find the top five movies of all time. The model has access to the Computer Tool, enabling it to move cursors to click on Computer components.

The model dissects the prompt and develops a step-by-step reasoning to complete the task.

It decided to visit MyAnimeList using Firefox.

From every screenshot, it calculates the coordinates of the required component and moves the cursor accordingly.

Each input corresponds to a specific action that determines what task to perform, and the inputs vary depending on the action type. For instance, if the action type is “type,” there will be a text input, while for a “mouse_move” action, the relevant inputs would be coordinates.

Based on the original prompt, screenshots, and reasoning, the model decides which actions to take.

The model moves the cursor, opens Firefox, finds the address bar, inputs the web page, scrolls down the page, and outputs the answers.

You can observe the model could successfully fetch the movies. Next, I asked it to create a CSV file of the film. (I asked for the top 10 this time)

The model created and updated the file using the Shell tool, and it was then opened using Libre Office.

So far, the model has been able to execute the commands successfully. Sometimes, it doesn’t get it right, so it attempts repeatedly until it does. Right now, this can get very expensive for even minor things.

Let’s see another example.

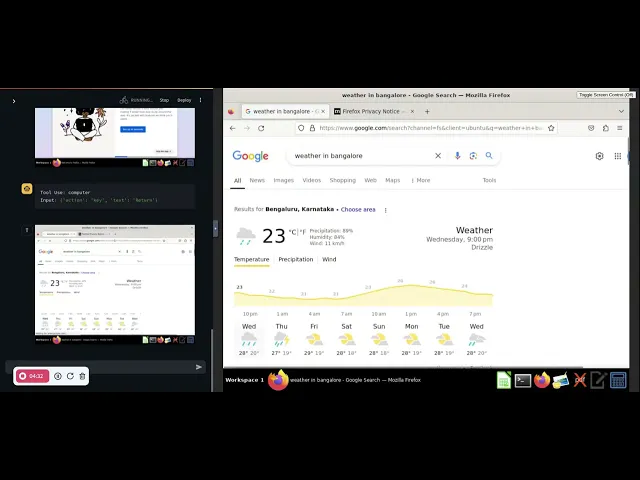

Example 2: Find the best restaurants based on the City's Weather

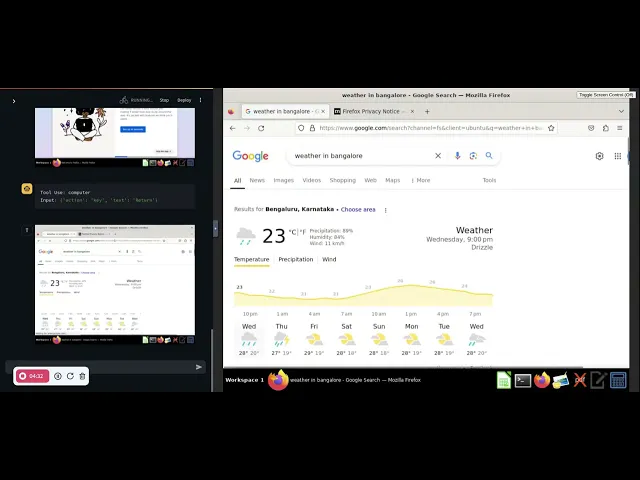

So, this time, I asked it to find me the best restaurants and weather in Bengaluru. It was able to successfully search the web for the best restaurants from the web and get the weather from AccuWeather.

Example 3: Ordering food online

I was hungry, so I asked Claude to order me from Wendy's Burger and gave it the credentials. However, the model refused to move forward.

Example 4: Purchasing from Amazon

I asked Amazon to order me a pair of shorts, but this time, I asked Amazon to search for the product and add it to the cart, which it accomplished.

Next, I asked it to log in and make the purchase, and as expected, it refused. It seems you cannot just ask it to do anything that involves handling critical information.

What future holds for Agents?

The release of Computer Use and the tone of their blog make it clear that Anthropic is betting big on an agentic future. We can expect more labs to release models optimized for computer interaction in the future. It will be interesting to see OpenAI's response to the Computer model.

Final Verdict

The new Sonnet 3.5 is phenomenal at locating the coordinates of components in a screenshot, and it seems the model has improved its ability to call tools. However, the computer tool itself could need some refinement.

The model needs improvement at the current stage. As the Claude team also pointed out, it is expensive, slow, and can hallucinate during execution.

Running these initial experiments cost me around $30, making it far from production-ready. Nonetheless, the future appears promising. Smaller models like Haiku, which also utilize a computer, could be game-changers. Additionally, we anticipate AI labs like Deepseek and Qwen will release open-source models optimized for computer applications.

Anyway, the future looks exciting, and we will be looking forward to it.

We at Composio are building tools optimised for LLMs, including tools for computer use. In one line of code, you can connect over 100 tools with agentic frameworks of your choice, such as LlamaIndex, Autogen, CrewAI, etc. We also allow you to spawn a cloud docker container for Computer Use in a single line of code.

If you are building around agents, we can manage authentication and integration. For more, check out the documentation and GitHub.

Anthropic has updated its Haiku and Sonnet lineup. Now, we have Haiku 3.5—a smaller model that outperforms Opus 3, the former state-of-the-art—and Sonnet 3.5, with enhanced coding abilities and a groundbreaking new feature called computer use. This is significant for everyone working in the field of AI agents.

As someone who works at an AI start-up, I wanted to know how good it is and what it holds for the future of AI agents.

I tested the model across several real-world use cases you might encounter, and in this article, I'll walk you through each of them.

Table of Contents

TL;DR

What is Anthropic’s Computer Use?

How do you set up Anthropic’s Computer Use?

Let’s see how Computer Use works

What future holds for Agents?

Final Verdict

TL;DR

If you have anywhere else to go, here is the summary of the article.

Computer Use is Anthropic’s latest LLM capability. It lets Sonnet 3.5 determine the coordinates of components in an image.

Equipping the model with tools like a Computer allows it to move cursors and interact with the computer as an actual user.

The model could easily handle simple use cases, such as searching the Internet, retrieving results, creating Spreadsheets, etc.

It still relies on screenshots, so do not expect it to perform real-time tasks like playing pong or Mario, which require sub-second response times.

The model excels at many tasks, from research to filling out simple forms.

The model is too expensive and too slow for anything practical. I burnt nearly $30 for this blog.

What is Anthropic's Computer Use?

The Computer Use feature takes the Sonnet's image understanding and logical reasoning to the next level, allowing it to interact with the computer directly. It can now understand the image and figure out the display component to move cursors, click, and type text to interact with computers like humans.

The model can understand pixels on the images and figure out how many pixels vertically or horizontally it needs to move a cursor to click in the correct place.

Sonnet is now officially the state-of-the-art model for computer interaction, scoring 14.7% in OSWorld, almost double that of the closest model.

If you want to learn more, check out Anthropic's official blog post; though they have not revealed much about their training, it is still a good read.

How to Set up Anthropic's Computer Use?

Anthropic also released a developer cookbook to help you quickly set it up and explore how it works.

You need access to any Anthropic API keys, AWS bedrock, and Vertex to use Sonnet. The README file explains how to do this.

To get started, clone the repository.

git clone <https://github.com/anthropics/anthropic-quickstarts>

Move into the Computer Use demo directory.

cd computer-use-demo

Now, pull the image and run the container. I used the Bedrock-hosted model; you can use other providers as well.

docker run \ -e API_PROVIDER=bedrock \ -e AWS_PROFILE=$AWS_PROFILE \ -e AWS_REGION=us-west-2 \ -v $HOME/.aws/credentials:/home/computeruse/.aws/credentials \ -v $HOME/.anthropic:/home/computeruse/.anthropic \ -p 5900:5900 \ -p 8501:8501 \ -p 6080:6080 \ -p 8080:8080 \ -it ghcr.io/anthropics/anthropic-quickstarts:computer-use-demo-latest " style="color:#D4D4D4;display:none" aria-label="Copy">

export AWS_PROFILE=<your_aws_profile>

This will take some time; once it is finished, it will spawn a Streamlit server.

You can view the site.

Send a message to see if everything is up and running.

By default, it has access to a few apps: Firefox, a Spreadsheet, a Terminal, a PDF viewer, a Calculator, etc.

Let’s see how Computer Use works

Example 1: Find the top 5 movies and make a CSV

I started with a simple internet search. I asked it to find the top five movies of all time. The model has access to the Computer Tool, enabling it to move cursors to click on Computer components.

The model dissects the prompt and develops a step-by-step reasoning to complete the task.

It decided to visit MyAnimeList using Firefox.

From every screenshot, it calculates the coordinates of the required component and moves the cursor accordingly.

Each input corresponds to a specific action that determines what task to perform, and the inputs vary depending on the action type. For instance, if the action type is “type,” there will be a text input, while for a “mouse_move” action, the relevant inputs would be coordinates.

Based on the original prompt, screenshots, and reasoning, the model decides which actions to take.

The model moves the cursor, opens Firefox, finds the address bar, inputs the web page, scrolls down the page, and outputs the answers.

You can observe the model could successfully fetch the movies. Next, I asked it to create a CSV file of the film. (I asked for the top 10 this time)

The model created and updated the file using the Shell tool, and it was then opened using Libre Office.

So far, the model has been able to execute the commands successfully. Sometimes, it doesn’t get it right, so it attempts repeatedly until it does. Right now, this can get very expensive for even minor things.

Let’s see another example.

Example 2: Find the best restaurants based on the City's Weather

So, this time, I asked it to find me the best restaurants and weather in Bengaluru. It was able to successfully search the web for the best restaurants from the web and get the weather from AccuWeather.

Example 3: Ordering food online

I was hungry, so I asked Claude to order me from Wendy's Burger and gave it the credentials. However, the model refused to move forward.

Example 4: Purchasing from Amazon

I asked Amazon to order me a pair of shorts, but this time, I asked Amazon to search for the product and add it to the cart, which it accomplished.

Next, I asked it to log in and make the purchase, and as expected, it refused. It seems you cannot just ask it to do anything that involves handling critical information.

What future holds for Agents?

The release of Computer Use and the tone of their blog make it clear that Anthropic is betting big on an agentic future. We can expect more labs to release models optimized for computer interaction in the future. It will be interesting to see OpenAI's response to the Computer model.

Final Verdict

The new Sonnet 3.5 is phenomenal at locating the coordinates of components in a screenshot, and it seems the model has improved its ability to call tools. However, the computer tool itself could need some refinement.

The model needs improvement at the current stage. As the Claude team also pointed out, it is expensive, slow, and can hallucinate during execution.

Running these initial experiments cost me around $30, making it far from production-ready. Nonetheless, the future appears promising. Smaller models like Haiku, which also utilize a computer, could be game-changers. Additionally, we anticipate AI labs like Deepseek and Qwen will release open-source models optimized for computer applications.

Anyway, the future looks exciting, and we will be looking forward to it.

We at Composio are building tools optimised for LLMs, including tools for computer use. In one line of code, you can connect over 100 tools with agentic frameworks of your choice, such as LlamaIndex, Autogen, CrewAI, etc. We also allow you to spawn a cloud docker container for Computer Use in a single line of code.

If you are building around agents, we can manage authentication and integration. For more, check out the documentation and GitHub.

Recommended Blogs

Recommended Blogs

notes, anthropics, computer, use, ability

Connect AI agents to SaaS apps in Minutes

Connect AI agents to SaaS apps in Minutes

We handle auth, tools, triggers, and logs, so you build what matters.

Stay updated.

Stay updated.