Using FireCrawl MCP server with Claude Code for web scraping

Using FireCrawl MCP server with Claude Code for web scraping

Have you ever needed to scrape data from websites? Perhaps for a side project, research, or to automate a tedious task? You probably know the pain: setting up scraping frameworks, writing code, dealing with endless configuration, and wrestling against the messy, unstructured data you get back. It's enough to make anyone want to throw their computer out the window.

Literally me throwing my laptop on my employer’s face if MCPs weren’t there!!

That's when you might have searched for tools like Firecrawl. If you haven't heard of it, Firecrawl is a tool that turns websites into structured, LLM-ready data with just a simple crawl. No more fighting with HTML parsing or weird edge cases - just write a prompt and let it do the work.

But what if you could take it a step further? Could you combine Firecrawl's powerful web scraping with Claude Code's AI automation and run the whole thing right from your terminal? In this article, we'll show you exactly how to do that - so you can automate web data extraction and analysis without the usual headaches.

However, before we delve into the details, let's take a brief look at what Firecrawl is and how we can connect Composio's Firecrawl MCP to automate web scraping whenever needed.

What is MCP?

Think of MCP as a bridge that connects all your SaaS tools to your AI agent. It acts like an adapter, enabling your AI agent (Client) to understand and interact with your tools.

According to Anthropic (the team behind Claude and MCP),

“MCP is an open protocol that standardizes how applications provide context to LLMs. Think of MCP like a USB-C port for AI applications. Just as USB-C provides a standardized way to connect your devices to various peripherals and accessories, MCP provides a standardized way to connect AI models to different data sources and tools.”

credits: modelcontextprotocol.io

What is Firecrawl?

Firecrawl is an AI-powered web crawler that can fetch and process web content into structured, machine-readable formats (like markdown, JSON, or HTML). It's designed to integrate with AI tools, making it a perfect blend for web automation where an LLM needs real-time or contextual data from the web.

What is Firecrawl MCP by Composio

Composio provides an MCP (Model Context Protocol) server that enables LLMs, such as Claude and Cursor, to interact with Firecrawl. It provides an interface layer to manage authentication (via API keys, etc.) and handles the data exchange between the LLM and Firecrawl. What can you do with it? You can automate web scraping, data extraction, and content gathering to index the site and gain a better understanding of the content.

The MCP layer provides a set of tools that you can use to interact with Firecrawl. Some of the tools are:

FIRECRAWL_CRAWL_URLS- Starts a crawl job for a given URL, applying various filtering options and content extraction options.FIRECRAWL_SCRAPE_EXTRACT_DATA_LLM- To scrape a publicly accessible URL.FIRECRAWL_EXTRACT- To extract structured data from a web page.FIRECRAWL_CANCEL_CRAWL_JOB- To cancel a crawl job.FIRECRAWL_CRAWL_JOB_STATUS- Retrieves current status, progress of a web crawl job, and so much more. Read more about the tools here.

Now, I can literally ask Claude to crawl a site, summarise it, extract data, or even perform some analysis by generating structured data, all using natural human language, without having to write any code.

What‘s Covered?

How to connect Composio's Firecrawl MCP and Claude Code in two different ways

How to configure Claude Code to manage Firecrawl jobs from your terminal

How to prompt Claude to crawl a site, scrape a page, and extract structured data

Additionally, we'll learn a few best practices to use Firecrawl MCP with Claude Code

Setting things up with no extra effort

We'll be using the following tools:

You can set up Firecrawl MCP in two ways:

Quick MCP Setup (If you're in a hurry)

Head over to Composio Firecrawl MCP and click on the Claude tab. Under Installation, hit the Generate button and run the generated command in your terminal.

The command will look something like this:

npx @composio/mcp@latest setup "<https://mcp.composio.dev/partner/composio/firecrawl/mcp?customerId=[your-customer-id]>" "firecrawl-lig0gc-38" --client

npx @composio/mcp@latest setup "<https://mcp.composio.dev/partner/composio/firecrawl/mcp?customerId=[your-customer-id]>" "firecrawl-lig0gc-38" --client

npx @composio/mcp@latest setup "<https://mcp.composio.dev/partner/composio/firecrawl/mcp?customerId=[your-customer-id]>" "firecrawl-lig0gc-38" --client

This command works for Cursor, Windsurf, or even plain HTTP endpoints, with slight modifications (you can follow the installation instructions on the MCP page). The only thing left is to authenticate the MCP using your Firecrawl API key.

Since MCP is installed globally, you can copy the generated config file to your project directory with this:

cpcpcpThis gives you a local .mcp.json file that configures the MCP for your project.

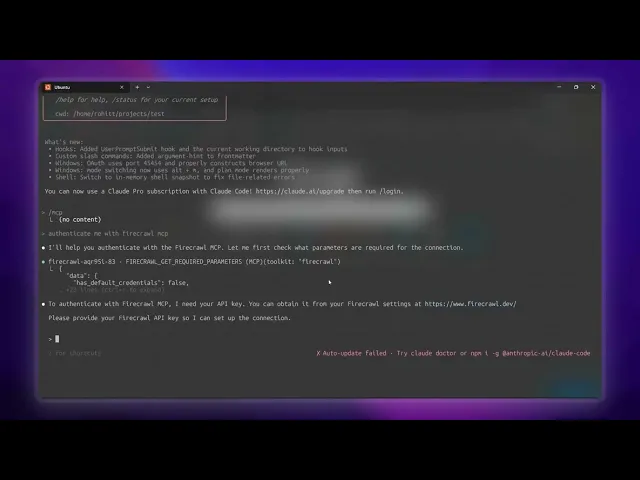

To authenticate, simply run:

And ask it to authenticate the MCP with your Firecrawl API key. Once prompted, enter your API key and hit enter.

That's it! Now you can start a Claude Code session and ask it to crawl a site for structured data. Check out the demo at the end to see it in action.

Dashboard Setup (If you prefer more control)

If you want more control over your setup, use the **Composio Dashboard** to manage your MCP.

You'll see a list of your existing MCPs. Search for "Firecrawl MCP" and click Integrate on the right-hand side.

Once the integration form appears, paste the API key in the API Key field and click Try connecting with Default's firecrawl. You can also provide a different user ID to use another user's key. And that's it, your MCP is ready.

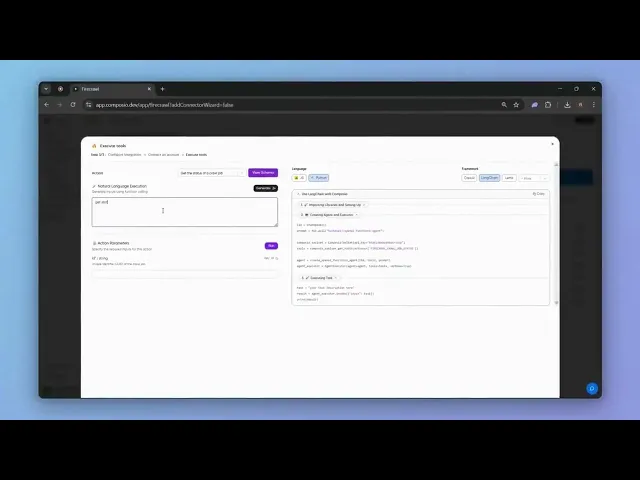

Testing the MCP:

You can test the MCP in the next step. In the Action dropdown, select "Scrape URL" and pass this prompt:

Generate a valid JSON input payload for Firecrawl's `Scrape URL` action based on the following: URL: <https://en.wikipedia.org/wiki/How_Brown_Saw_the_Baseball_Game> Requirements: - The `formats` field must include `"json"`. - Since `json` is requested, include the required `jsonOptions` field (it can be empty `{}` or include common options like `removeScripts`). - Set `onlyMainContent` to `true` to get only the main article. - Use a `timeout` of 30000 milliseconds. - Use `waitFor` value of `0`. - You may leave `actions`, `excludeTags`, `includeTags`, and `location` empty. Output a valid JSON object for the input payload.

Generate a valid JSON input payload for Firecrawl's `Scrape URL` action based on the following: URL: <https://en.wikipedia.org/wiki/How_Brown_Saw_the_Baseball_Game> Requirements: - The `formats` field must include `"json"`. - Since `json` is requested, include the required `jsonOptions` field (it can be empty `{}` or include common options like `removeScripts`). - Set `onlyMainContent` to `true` to get only the main article. - Use a `timeout` of 30000 milliseconds. - Use `waitFor` value of `0`. - You may leave `actions`, `excludeTags`, `includeTags`, and `location` empty. Output a valid JSON object for the input payload.

Generate a valid JSON input payload for Firecrawl's `Scrape URL` action based on the following: URL: <https://en.wikipedia.org/wiki/How_Brown_Saw_the_Baseball_Game> Requirements: - The `formats` field must include `"json"`. - Since `json` is requested, include the required `jsonOptions` field (it can be empty `{}` or include common options like `removeScripts`). - Set `onlyMainContent` to `true` to get only the main article. - Use a `timeout` of 30000 milliseconds. - Use `waitFor` value of `0`. - You may leave `actions`, `excludeTags`, `includeTags`, and `location` empty. Output a valid JSON object for the input payload.

It'll generate a valid JSON input payload for the Scrape URL action. You can now hit "Run" to see the output.

Once done, create a new MCP server by going to "Integrations" tab → "MCP Servers" → "Create Server". Give a name to your server and create it. Now, you can use the generated command to start a new Claude Code session and ask it to crawl a site and get the data in a structured format. Or you can even use it with Cursor, Windsurf, or any other IDE that supports MCP.

Using Firecrawl MCP with Claude Code

Follow along with the demo to see how we can use Firecrawl MCP with Claude Code. Although the demo uses the Go to MCP method, you can use the same method with the MCP server you created in the previous step.

Conclusion

Firecrawl MCP makes it easy to scrape structured content from any site-whether you want a quick CLI setup or a more controlled server setup via the dashboard. Combine it with Claude Code, and you've got a powerful AI-powered scraping workflow at your fingertips.

Have you ever needed to scrape data from websites? Perhaps for a side project, research, or to automate a tedious task? You probably know the pain: setting up scraping frameworks, writing code, dealing with endless configuration, and wrestling against the messy, unstructured data you get back. It's enough to make anyone want to throw their computer out the window.

Literally me throwing my laptop on my employer’s face if MCPs weren’t there!!

That's when you might have searched for tools like Firecrawl. If you haven't heard of it, Firecrawl is a tool that turns websites into structured, LLM-ready data with just a simple crawl. No more fighting with HTML parsing or weird edge cases - just write a prompt and let it do the work.

But what if you could take it a step further? Could you combine Firecrawl's powerful web scraping with Claude Code's AI automation and run the whole thing right from your terminal? In this article, we'll show you exactly how to do that - so you can automate web data extraction and analysis without the usual headaches.

However, before we delve into the details, let's take a brief look at what Firecrawl is and how we can connect Composio's Firecrawl MCP to automate web scraping whenever needed.

What is MCP?

Think of MCP as a bridge that connects all your SaaS tools to your AI agent. It acts like an adapter, enabling your AI agent (Client) to understand and interact with your tools.

According to Anthropic (the team behind Claude and MCP),

“MCP is an open protocol that standardizes how applications provide context to LLMs. Think of MCP like a USB-C port for AI applications. Just as USB-C provides a standardized way to connect your devices to various peripherals and accessories, MCP provides a standardized way to connect AI models to different data sources and tools.”

credits: modelcontextprotocol.io

What is Firecrawl?

Firecrawl is an AI-powered web crawler that can fetch and process web content into structured, machine-readable formats (like markdown, JSON, or HTML). It's designed to integrate with AI tools, making it a perfect blend for web automation where an LLM needs real-time or contextual data from the web.

What is Firecrawl MCP by Composio

Composio provides an MCP (Model Context Protocol) server that enables LLMs, such as Claude and Cursor, to interact with Firecrawl. It provides an interface layer to manage authentication (via API keys, etc.) and handles the data exchange between the LLM and Firecrawl. What can you do with it? You can automate web scraping, data extraction, and content gathering to index the site and gain a better understanding of the content.

The MCP layer provides a set of tools that you can use to interact with Firecrawl. Some of the tools are:

FIRECRAWL_CRAWL_URLS- Starts a crawl job for a given URL, applying various filtering options and content extraction options.FIRECRAWL_SCRAPE_EXTRACT_DATA_LLM- To scrape a publicly accessible URL.FIRECRAWL_EXTRACT- To extract structured data from a web page.FIRECRAWL_CANCEL_CRAWL_JOB- To cancel a crawl job.FIRECRAWL_CRAWL_JOB_STATUS- Retrieves current status, progress of a web crawl job, and so much more. Read more about the tools here.

Now, I can literally ask Claude to crawl a site, summarise it, extract data, or even perform some analysis by generating structured data, all using natural human language, without having to write any code.

What‘s Covered?

How to connect Composio's Firecrawl MCP and Claude Code in two different ways

How to configure Claude Code to manage Firecrawl jobs from your terminal

How to prompt Claude to crawl a site, scrape a page, and extract structured data

Additionally, we'll learn a few best practices to use Firecrawl MCP with Claude Code

Setting things up with no extra effort

We'll be using the following tools:

You can set up Firecrawl MCP in two ways:

Quick MCP Setup (If you're in a hurry)

Head over to Composio Firecrawl MCP and click on the Claude tab. Under Installation, hit the Generate button and run the generated command in your terminal.

The command will look something like this:

npx @composio/mcp@latest setup "<https://mcp.composio.dev/partner/composio/firecrawl/mcp?customerId=[your-customer-id]>" "firecrawl-lig0gc-38" --client

This command works for Cursor, Windsurf, or even plain HTTP endpoints, with slight modifications (you can follow the installation instructions on the MCP page). The only thing left is to authenticate the MCP using your Firecrawl API key.

Since MCP is installed globally, you can copy the generated config file to your project directory with this:

cpThis gives you a local .mcp.json file that configures the MCP for your project.

To authenticate, simply run:

And ask it to authenticate the MCP with your Firecrawl API key. Once prompted, enter your API key and hit enter.

That's it! Now you can start a Claude Code session and ask it to crawl a site for structured data. Check out the demo at the end to see it in action.

Dashboard Setup (If you prefer more control)

If you want more control over your setup, use the **Composio Dashboard** to manage your MCP.

You'll see a list of your existing MCPs. Search for "Firecrawl MCP" and click Integrate on the right-hand side.

Once the integration form appears, paste the API key in the API Key field and click Try connecting with Default's firecrawl. You can also provide a different user ID to use another user's key. And that's it, your MCP is ready.

Testing the MCP:

You can test the MCP in the next step. In the Action dropdown, select "Scrape URL" and pass this prompt:

Generate a valid JSON input payload for Firecrawl's `Scrape URL` action based on the following: URL: <https://en.wikipedia.org/wiki/How_Brown_Saw_the_Baseball_Game> Requirements: - The `formats` field must include `"json"`. - Since `json` is requested, include the required `jsonOptions` field (it can be empty `{}` or include common options like `removeScripts`). - Set `onlyMainContent` to `true` to get only the main article. - Use a `timeout` of 30000 milliseconds. - Use `waitFor` value of `0`. - You may leave `actions`, `excludeTags`, `includeTags`, and `location` empty. Output a valid JSON object for the input payload.

It'll generate a valid JSON input payload for the Scrape URL action. You can now hit "Run" to see the output.

Once done, create a new MCP server by going to "Integrations" tab → "MCP Servers" → "Create Server". Give a name to your server and create it. Now, you can use the generated command to start a new Claude Code session and ask it to crawl a site and get the data in a structured format. Or you can even use it with Cursor, Windsurf, or any other IDE that supports MCP.

Using Firecrawl MCP with Claude Code

Follow along with the demo to see how we can use Firecrawl MCP with Claude Code. Although the demo uses the Go to MCP method, you can use the same method with the MCP server you created in the previous step.

Conclusion

Firecrawl MCP makes it easy to scrape structured content from any site-whether you want a quick CLI setup or a more controlled server setup via the dashboard. Combine it with Claude Code, and you've got a powerful AI-powered scraping workflow at your fingertips.

Recommended Blogs

Recommended Blogs

FireCrwal MCP, Claude Code

Connect AI agents to SaaS apps in Minutes

Connect AI agents to SaaS apps in Minutes

We handle auth, tools, triggers, and logs, so you build what matters.

Stay updated.

Stay updated.