The Gemini 2.5 Pro and Sonnet are two series of models that are arguably ahead of the competition in terms of coding performance. There was a recent update to Gemini 2.5 Pro, which made it even better than before.

This has always put me in a dilemma of which models to use for coding. In this blog, we will directly compare these two models in terms of agentic coding. We will use Sonnet 4 in Claude Code and Gemini 2.5 Pro in Jules. We will then see which model and coding tool is a good combination for agentic coding.

Let’s jump into it without any further ado!

TL;DR

If you want to jump straight to the result, yes, Claude Sonnet 4 is definitely an improvement over Claude Sonnet 3.7. It performs better than any other coding model, including the Gemini 2.5 Pro, but in some cases, there could be exceptions (as you can see for yourself in the comparison below).

And, there can be cases like this as well:

Overall, you won’t go wrong choosing Claude Sonnet 4 over Gemini 2.5 Pro (for Coding!).

However, all of this comes with a cost. Claude Sonnet 4 is slightly more expensive, at $3 per input token and $15 per output token, while Gemini 2.5 Pro costs $1.25 per input token and $10 per output token.

However, I would judge based on the overall model quality and pricing, rather than just coding. In that case, the Gemini 2.5 Pro is one of the most affordable yet powerful models available.

So, unless you are using the model for intensive coding tasks, I suggest sticking with Gemini 2.5 Pro.

Brief on Claude Code

Claude Code is a command-line tool developed by Anthropic that runs directly in your terminal. It utilises agentic search to comprehend your project, including its dependencies, without requiring you to select files to provide context manually.The best part is that it integrates seamlessly with GitHub, GitLab, and other development tools, allowing you to read issues, write code, create pull requests (PRs), and even run tests directly from the terminal.

“Claude Code’s understanding of your codebase and dependencies enables it to make powerful, multi-file edits that actually work.” – Anthropic

Here’s a quick demo to get an idea of how powerful this tool is and what it can do:

Brief on Jules Coding Agent

This is a coding AI agent from Google. Think of it like Claude Code, but it runs on the web and not locally.

If you’ve been following Google I/O, you’re likely already familiar with this. For those of you who don’t know, and if you’re interested, check out this quick demo:

One downside to this agent is that it requires you to connect it to GitHub and your repository to use it. That’s how the agent gets context on your codebase, and we’re using it for the same reason we use Claude Code.

Testing in a real-world project

As I mentioned, here we’ll be testing these models on a real repository, asking it to implement changes, fix bugs, and verify its understanding of the codebase.

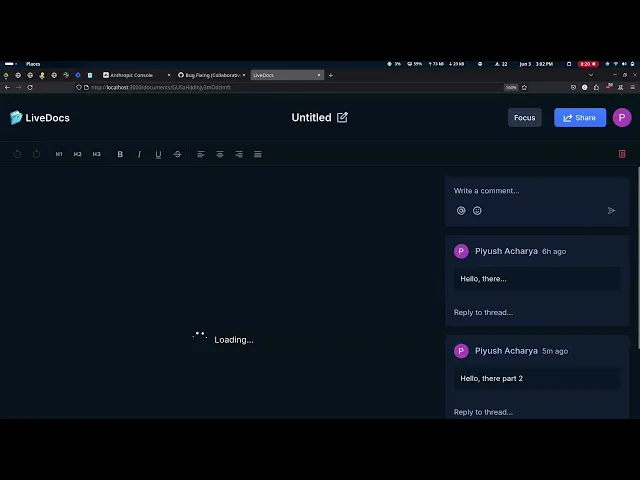

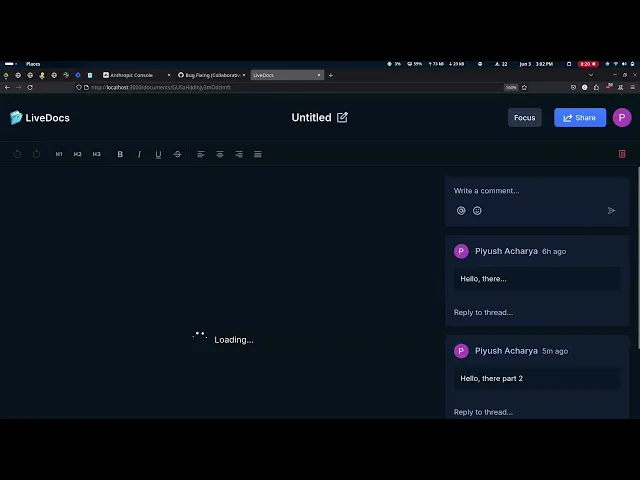

Here’s the project I used: Collaborative Editor. Think of it like Google Docs, which allows for the collaborative sharing and editing of documents in real-time. All kudos to JavaScript Mastery. ✌️

It is a fairly complex project, which should be sufficient to test the models.

1. Codebase Understanding

Let’s start off with something simple; we’ll ask the models to understand the codebase.

With Claude Code, it’s pretty simple. You don’t need to prompt it differently; you need to run \\\\init, which generates a CLAUDE.md A file with everything that the model can understand from the codebase.

Response from Claude Sonnet 4

You can find the response it generated here: Link

It goes without saying that the model can read the project well, as that’s the main purpose of Claude Code: to understand the codebase without providing manual context. Claude Sonnet 4 does a pretty good job at it.

There’s not much more I can add; it simply went through all the files and wrote a clean CLAUDE.md file with great understanding.

Response from Gemini 2.5 Pro

You can find the response it generated here: Link

Again, the same is the case here for Gemini 2.5 Pro; it did pretty much the exact thing and wrote a great README.md file with everything correct.

The only difference I can visibly see between the two models’ responses is that Claude Sonnet 4’s output is a bit thorough and explains the key architectural patterns, while Gemini 2.5 Pro wrote the README.md similar to what you’d find for any other repository on GitHub (clean and concise).

However, I don’t think we can see any major difference in the output between the two models. It’s just some language fluff.

2. Build an AI Agent with Composio

In this section, I will attempt to build a fully functioning AI agent with multiple prompts, if necessary, using both models to determine which one yields the desired result more efficiently. This is to see if all these models can work with the latest packages.

Prompt: Please create an AI agent for Google Sheets that assists with various Google Sheets tasks. Use Composio for the integration and do it in Python. Use packages like openai, composio_llamaindex, and llamaindex. That gets the job done. Also, please use uv it as the package manager. Refer to the docs if required: https://docs.composio.dev/getting-started/welcome

Response from Claude Sonnet 4

You can find the raw response it generated here: Link

I didn’t expect the model to be this good with the code. I wrote the code in one shot, and I didn’t have to iterate on follow-up prompts. It has followed all the right approaches, similar to how Composio docs suggest working with the Google Sheet Agent.

Response from Gemini 2.5 Pro

It was quick this time, and I got a working AI agent for Google Sheets in 6 minutes. This was surprising, considering that writing over 2,500 lines of code without errors in one shot is entirely impressive. Believe me, I’ve tried this with Claude 3.7 Sonnet, and it failed very badly.

You can find the response Claude 3.7 generated here: Link

It’s impressive that the model could refer to the documentation and utilise packages, as I said, adhering to all the best practices and ultimately resulted in a working AI agent.

3. Find the Buggy Commit and Fix It

It’s a real problem and something that most of us face, especially those who don’t use tests much. We make some changes, and it works, but then in a subsequent commit, we do something that breaks the functionality. 😴

Let’s see if these models can find and fix all the issues that I’ll introduce in the codebase, make commits on top, and ask it to fix them.

Hey, the project was working fine, but recently, a few bugs were introduced. A document’s name does not update even when it is renamed, and a user appears to be able to remove themselves as a collaborator from their document. Additionally, a user who should not have permission to view a document can still access it. Please help me fix all of this.

Response from Claude Sonnet 4

You can find the raw response it generated here: Link

Great, we got a nice response, and it really fixed all the bugs that I intentionally added in some commits on top.

Even better, it added a point to its TODO for running the lint and test commands, which is a good addition as it improves overall trust in the changes it made.

Response from Gemini 2.5 Pro

Pretty much the same result we got with Claude Sonnet 4. Gemini 2.5 Pro fixed all of the issues, and the way Jules works is that once you approve the changes, it commits those and pushes them to a new branch on your GitHub. This is handy but also slow, as everything runs inside a Virtual Machine (VM).

Claude Code accomplished the same task within 2-3 minutes using Claude Sonnet 4, whereas Jules with Gemini 2.5 Pro took over 10 minutes.

But we’re interested in the model itself. The Gemini 2.5 Pro is performing well and aligning closely with the Claude Sonnet 4.

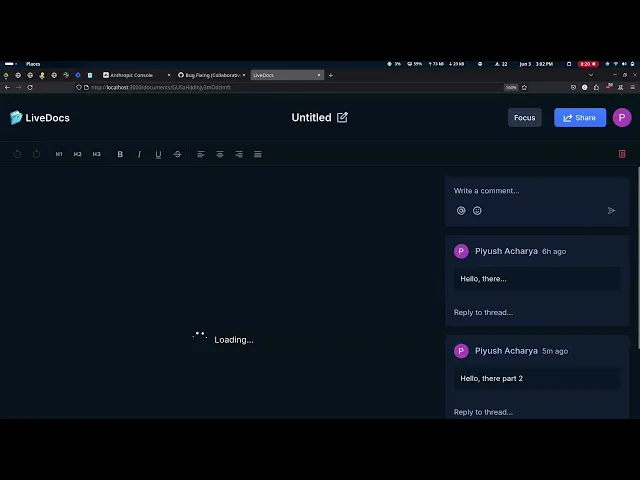

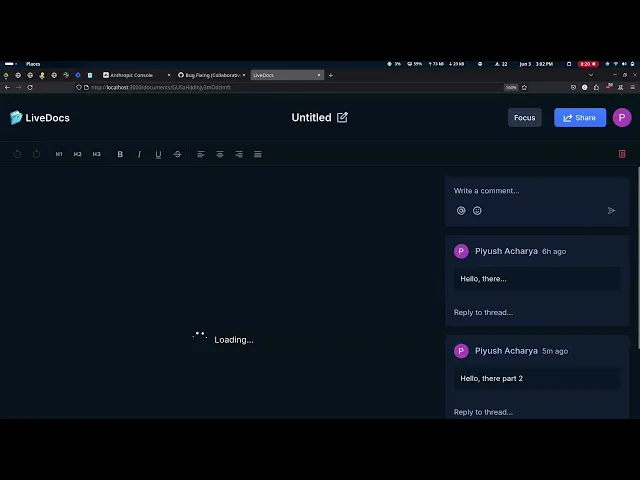

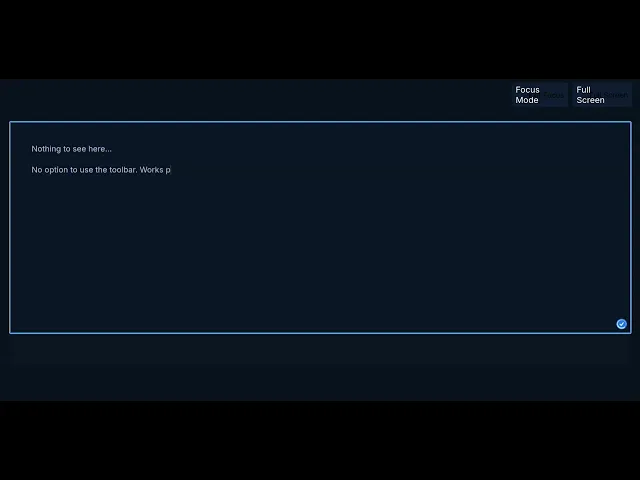

4. Add a New Feature (Focus Mode)

The models are performing well in identifying bugs and fixing them, but now let’s see how well they can add new features on top of it.

Prompt: I need you to implement “Focus Mode” in the doc. Here’s what you need to do: Hide the navigation bar, full-screen toggle option, and formatting toolbar until selection. Also, hide the comments that are displayed on the side (the document needs to occupy the entire width of the page).

Response from Claude Sonnet 4

Here’s the output of the program:

Once again, Claude Sonnet 4 did it in a matter of a few seconds. It almost got everything correct as I asked, but you can never be sure with an AI model, right?

Everything went well, and it all worked properly, except that the text I wrote in the document in Focus Mode does not persist.

Regarding code quality, it’s excellent and adheres to best practices; most importantly, it’s not junior-level code. It has kept everything minimal and just to the point.

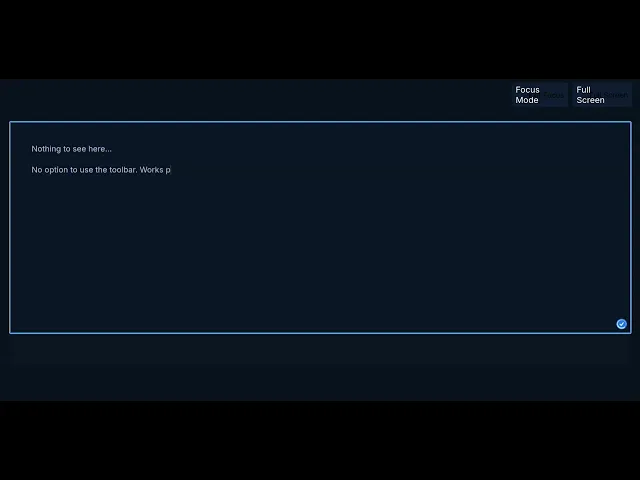

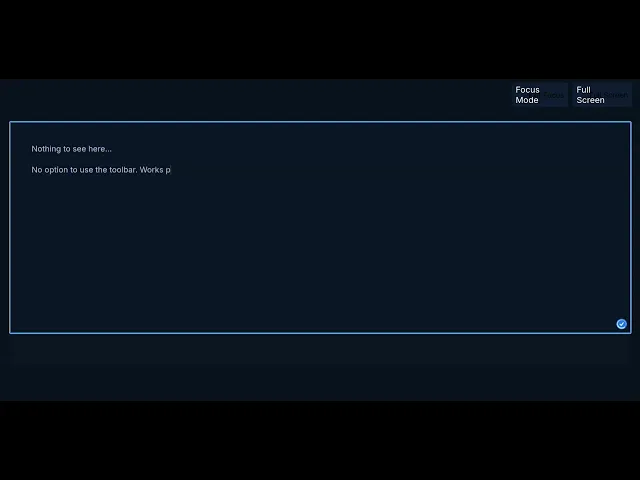

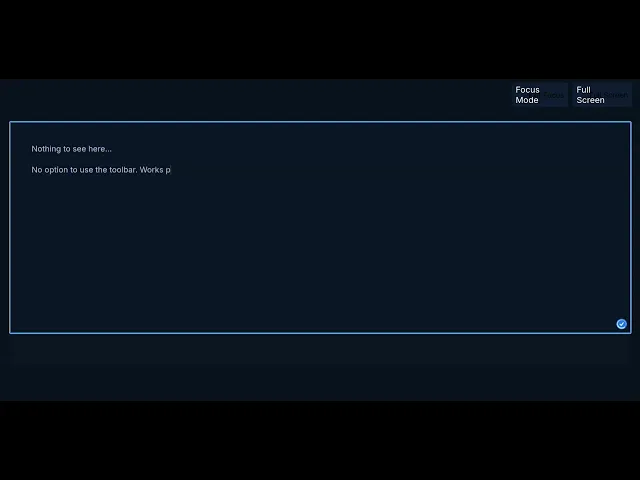

Response from Gemini 2.5 Pro

For some reason, Jules was having a problem midway through trying to add the feature. I tried it over 3 times, and got the same result, and I’m not sure what’s the exact reason for that.

As a final resort, I returned to Google AI Studio and provided manual context; overall, it was able to implement the functionality I requested. However, the UI was not very nice, as you can see in the demo below. 👇

Overall, it added a well-functioning feature, and that’s what counts, with no logic bugs like Claude Sonnet 4.

Gemini 2.5 Pro secured this win, even without Jules and with manual context.

Conclusion

Claude Sonnet 4 is superior to Gemini 2.5 Pro in coding. It may not be by a large margin, but it’s a rock-solid model for coding. The model has certainly improved from Sonnet 3.7. Sonnet 3.7 lacked the scoped editing ability of Sonnet 3.5, but Sonnet 4 is an improvement in this regard.

The Gemini 2.5 Pro and Sonnet are two series of models that are arguably ahead of the competition in terms of coding performance. There was a recent update to Gemini 2.5 Pro, which made it even better than before.

This has always put me in a dilemma of which models to use for coding. In this blog, we will directly compare these two models in terms of agentic coding. We will use Sonnet 4 in Claude Code and Gemini 2.5 Pro in Jules. We will then see which model and coding tool is a good combination for agentic coding.

Let’s jump into it without any further ado!

TL;DR

If you want to jump straight to the result, yes, Claude Sonnet 4 is definitely an improvement over Claude Sonnet 3.7. It performs better than any other coding model, including the Gemini 2.5 Pro, but in some cases, there could be exceptions (as you can see for yourself in the comparison below).

And, there can be cases like this as well:

Overall, you won’t go wrong choosing Claude Sonnet 4 over Gemini 2.5 Pro (for Coding!).

However, all of this comes with a cost. Claude Sonnet 4 is slightly more expensive, at $3 per input token and $15 per output token, while Gemini 2.5 Pro costs $1.25 per input token and $10 per output token.

However, I would judge based on the overall model quality and pricing, rather than just coding. In that case, the Gemini 2.5 Pro is one of the most affordable yet powerful models available.

So, unless you are using the model for intensive coding tasks, I suggest sticking with Gemini 2.5 Pro.

Brief on Claude Code

Claude Code is a command-line tool developed by Anthropic that runs directly in your terminal. It utilises agentic search to comprehend your project, including its dependencies, without requiring you to select files to provide context manually.The best part is that it integrates seamlessly with GitHub, GitLab, and other development tools, allowing you to read issues, write code, create pull requests (PRs), and even run tests directly from the terminal.

“Claude Code’s understanding of your codebase and dependencies enables it to make powerful, multi-file edits that actually work.” – Anthropic

Here’s a quick demo to get an idea of how powerful this tool is and what it can do:

Brief on Jules Coding Agent

This is a coding AI agent from Google. Think of it like Claude Code, but it runs on the web and not locally.

If you’ve been following Google I/O, you’re likely already familiar with this. For those of you who don’t know, and if you’re interested, check out this quick demo:

One downside to this agent is that it requires you to connect it to GitHub and your repository to use it. That’s how the agent gets context on your codebase, and we’re using it for the same reason we use Claude Code.

Testing in a real-world project

As I mentioned, here we’ll be testing these models on a real repository, asking it to implement changes, fix bugs, and verify its understanding of the codebase.

Here’s the project I used: Collaborative Editor. Think of it like Google Docs, which allows for the collaborative sharing and editing of documents in real-time. All kudos to JavaScript Mastery. ✌️

It is a fairly complex project, which should be sufficient to test the models.

1. Codebase Understanding

Let’s start off with something simple; we’ll ask the models to understand the codebase.

With Claude Code, it’s pretty simple. You don’t need to prompt it differently; you need to run \\\\init, which generates a CLAUDE.md A file with everything that the model can understand from the codebase.

Response from Claude Sonnet 4

You can find the response it generated here: Link

It goes without saying that the model can read the project well, as that’s the main purpose of Claude Code: to understand the codebase without providing manual context. Claude Sonnet 4 does a pretty good job at it.

There’s not much more I can add; it simply went through all the files and wrote a clean CLAUDE.md file with great understanding.

Response from Gemini 2.5 Pro

You can find the response it generated here: Link

Again, the same is the case here for Gemini 2.5 Pro; it did pretty much the exact thing and wrote a great README.md file with everything correct.

The only difference I can visibly see between the two models’ responses is that Claude Sonnet 4’s output is a bit thorough and explains the key architectural patterns, while Gemini 2.5 Pro wrote the README.md similar to what you’d find for any other repository on GitHub (clean and concise).

However, I don’t think we can see any major difference in the output between the two models. It’s just some language fluff.

2. Build an AI Agent with Composio

In this section, I will attempt to build a fully functioning AI agent with multiple prompts, if necessary, using both models to determine which one yields the desired result more efficiently. This is to see if all these models can work with the latest packages.

Prompt: Please create an AI agent for Google Sheets that assists with various Google Sheets tasks. Use Composio for the integration and do it in Python. Use packages like openai, composio_llamaindex, and llamaindex. That gets the job done. Also, please use uv it as the package manager. Refer to the docs if required: https://docs.composio.dev/getting-started/welcome

Response from Claude Sonnet 4

You can find the raw response it generated here: Link

I didn’t expect the model to be this good with the code. I wrote the code in one shot, and I didn’t have to iterate on follow-up prompts. It has followed all the right approaches, similar to how Composio docs suggest working with the Google Sheet Agent.

Response from Gemini 2.5 Pro

It was quick this time, and I got a working AI agent for Google Sheets in 6 minutes. This was surprising, considering that writing over 2,500 lines of code without errors in one shot is entirely impressive. Believe me, I’ve tried this with Claude 3.7 Sonnet, and it failed very badly.

You can find the response Claude 3.7 generated here: Link

It’s impressive that the model could refer to the documentation and utilise packages, as I said, adhering to all the best practices and ultimately resulted in a working AI agent.

3. Find the Buggy Commit and Fix It

It’s a real problem and something that most of us face, especially those who don’t use tests much. We make some changes, and it works, but then in a subsequent commit, we do something that breaks the functionality. 😴

Let’s see if these models can find and fix all the issues that I’ll introduce in the codebase, make commits on top, and ask it to fix them.

Hey, the project was working fine, but recently, a few bugs were introduced. A document’s name does not update even when it is renamed, and a user appears to be able to remove themselves as a collaborator from their document. Additionally, a user who should not have permission to view a document can still access it. Please help me fix all of this.

Response from Claude Sonnet 4

You can find the raw response it generated here: Link

Great, we got a nice response, and it really fixed all the bugs that I intentionally added in some commits on top.

Even better, it added a point to its TODO for running the lint and test commands, which is a good addition as it improves overall trust in the changes it made.

Response from Gemini 2.5 Pro

Pretty much the same result we got with Claude Sonnet 4. Gemini 2.5 Pro fixed all of the issues, and the way Jules works is that once you approve the changes, it commits those and pushes them to a new branch on your GitHub. This is handy but also slow, as everything runs inside a Virtual Machine (VM).

Claude Code accomplished the same task within 2-3 minutes using Claude Sonnet 4, whereas Jules with Gemini 2.5 Pro took over 10 minutes.

But we’re interested in the model itself. The Gemini 2.5 Pro is performing well and aligning closely with the Claude Sonnet 4.

4. Add a New Feature (Focus Mode)

The models are performing well in identifying bugs and fixing them, but now let’s see how well they can add new features on top of it.

Prompt: I need you to implement “Focus Mode” in the doc. Here’s what you need to do: Hide the navigation bar, full-screen toggle option, and formatting toolbar until selection. Also, hide the comments that are displayed on the side (the document needs to occupy the entire width of the page).

Response from Claude Sonnet 4

Here’s the output of the program:

Once again, Claude Sonnet 4 did it in a matter of a few seconds. It almost got everything correct as I asked, but you can never be sure with an AI model, right?

Everything went well, and it all worked properly, except that the text I wrote in the document in Focus Mode does not persist.

Regarding code quality, it’s excellent and adheres to best practices; most importantly, it’s not junior-level code. It has kept everything minimal and just to the point.

Response from Gemini 2.5 Pro

For some reason, Jules was having a problem midway through trying to add the feature. I tried it over 3 times, and got the same result, and I’m not sure what’s the exact reason for that.

As a final resort, I returned to Google AI Studio and provided manual context; overall, it was able to implement the functionality I requested. However, the UI was not very nice, as you can see in the demo below. 👇

Overall, it added a well-functioning feature, and that’s what counts, with no logic bugs like Claude Sonnet 4.

Gemini 2.5 Pro secured this win, even without Jules and with manual context.

Conclusion

Claude Sonnet 4 is superior to Gemini 2.5 Pro in coding. It may not be by a large margin, but it’s a rock-solid model for coding. The model has certainly improved from Sonnet 3.7. Sonnet 3.7 lacked the scoped editing ability of Sonnet 3.5, but Sonnet 4 is an improvement in this regard.

Recommended Blogs

Recommended Blogs

Gemini 2.5 Pro vs. Claude 4 Sonnet

Connect AI agents to SaaS apps in Minutes

Connect AI agents to SaaS apps in Minutes

We handle auth, tools, triggers, and logs, so you build what matters.

Stay updated.

Stay updated.