Deepseek v3 0324: Finally, the Sonnet 3.5 at Home

Deepseek v3 0324: Finally, the Sonnet 3.5 at Home

Deepseek v3 o324, a new checkpoint, has been released by Deepseek in silence, with no marketing or hype, just a tweet, a model card and a 641GB MIT-licensed open-weight base model—very Deepseek-like. This, paired with Gemini 2.5 and Chatgpt image generation, caused the entire launch to go under the carpet.

Thinking that they released potentially the best base model and have made a single tweet so far is beyond comprehension; it’s time the Whales hire a great PR team. They could name it Deepseek v3.5 and could’ve wiped another round of billions from Bigtech stocks.

The v3 was released three months ago, around Christmas. Now, we have another base model with increased capability. From the initial vibes, it seems we finally have the Sonnet 3.5 at home (not literally, but you got my point). I blogged about Deepseek v3, and it was a great model and on par with GPT-4o, but this model is a massive upgrade over the last one.

v3 matched GPT-4o, and v3 0324 is on par with Claude 3.5 Sonnet. Eating into the moat of most closed models: A comment from HackerNews perfectly summarizes this, “Sorry, try again with a better moat”.

Table of Contents

Notes on Deepseek v3 0324

Benchmarks that matter

RL training with GRPO for better reasoning

Running it locally

Real-world performance

But how much improvement over others

Simon Willison's Pelican SVG test

3js simulation

Galaga in Python

TL;DR

If you've somewhere else to be, here's a short summary.

It's a massive upgrade over the original v3.

Slightly bigger than v3 but with an MIT license.

Better reasoning, code generation, and a better understanding of user intention thanks to RL training with GRPO.

Performs better than Claude 3.7 Sonnet on many tasks that you would care

Can outperform Claude 3.5 Sonnet on many tasks still, but you may find 3.5 to be better at cases.

It's MIT-licensed, freely available, allows you to opt out of training, and is super cheap.

The best base LLM considering the price to performance.

Notes on Deepseek v3 0324

Unlike the last v3, which had a custom v3 license, the new Deepseek v3 checkpoint comes with an MIT license. The model also has 685b parameters compared to 671b in the earlier v3 model. It should follow the architecture as the original v3, Deepseek Mixture of Experts (MoE) and Multi-head Latent Attention (MLA).

Some of the things that have been improved over the previous model collated from Huggingface

Reasoning capabilities

MMLU-Pro: 75.9 → 81.2 (+5.3)

GPQA: 59.1 → 68.4 (+9.3)

AIME: 39.6 → 59.4 (+19.8)

LiveCodeBench: 39.2 → 49.2 (+10.0)

Front-end development

More aesthetically pleasing websites and game fronts

Better executable code

Function calling ability

Chinese writing ability

Chinese analysis and research capabilities

Exceeding on Benchmarks that matter

Some benchmarks matter more and are better predictors of a model’s capabilities. Not to underestimate the above benchmarks, private benchmarks often give a better picture of models' usability in the real world.

And Deepseek v3 0324 is doing exceedingly well in them as well.

On Xeophon’s private benchmark, Deepseek v3 0324 is now the best non-reasoning model, even beating Claude 3.5 Sonnet.

The model came second to Claude’s 3.5 Sonnet on Paul Gauthier’s Aider benchmark.

On the KCores LLM arena, which tests LLMs for real-world coding performance, Deepseek v3 0324 has surpassed Claude 3.7 Sonnet and is only below Claude 3.5 Sonnet and Claude 3.7 Sonnet (thinking). To think an MIT-licenced model is outperforming the recently released

The Deepseek v3 0324 made a massive 2x jump on the Misguided Attention eval. This is rather impressive, and it is the top non-reasoning model.

As there are no official ARC-AGI results yet, Youtuber YJxAI is doing live testing (as of writing this blog post) on the public ARC-AGI dataset. The 0324 scores 28% on ARC-AGI—above Grok 3 and Gemini 2.0 Pro but below Sonnets 3.5 and 3.7.

RL-tuning with GRPO for better reasoning

During testing, it became evident that Deepseek v3 0324 exhibits signs of reasoning capabilities much better than previous base models, perhaps because most mainline models from Deepseek after Math are RL-trained with GRPO.

What is interesting here is the approach to solving this question by Deepseek v3 0324 and Claude 3.5 and 3.7 Sonnet.

Alright, let's tackle the problem: **How many r's are there in the word 'strawberry'?** ### Step 1: Understanding the Problem First, I need to determine how many times the letter 'r' appears in the word "strawberry." This seems simple enough, but to be thorough, I'll write down the word and count each 'r' one by one. ### Step 2: Writing Down the Word Let's write the word clearly: S T R A W B E R R Y ### Step 3: Identifying Each Letter Now, let's list out each letter in order and note down the 'r's. 1. **S** - First letter. Not an 'r'. 2. **T** - Second letter. Not an 'r'. 3. **R** - Third letter. This is an 'r'. (Count: 1) 4. **A** - Fourth letter. Not an 'r'. 5. **W** - Fifth letter. Not an 'r'. 6. **B** - Sixth letter. Not an 'r'. 7. **E** - Seventh letter. Not an 'r'. 8. **R** - Eighth letter. This is an 'r'. (Count: 2) 9. **R** - Ninth letter. This is another 'r'. (Count: 3) 10. **Y** - Tenth letter. Not an 'r'. ### Step 4: Verifying the Count From the breakdown: - The 3rd letter is 'R' - 1 - The 8th letter is 'R' - 2 - The 9th letter is 'R' - 3 No other letters in "strawberry" are 'r's. ### Step 5: Double-Checking Sometimes, it's easy to miscount, especially with similar-looking letters or quick glances. Let me read the word again and highlight the 'r's: S T **R** A W B E **R R** Y Yes, the 'r's are in the 3rd, 8th, and 9th positions. ### Step 6: Considering Upper and Lower Case The word is given in lowercase ('strawberry'), but even if it were uppercase ('STRAWBERRY'), the count would be the same since we're only looking for the letter 'r' regardless of its case. ### Step 7: Final Count After carefully reviewing each letter, the letter 'r' appears **three times** in "strawberry." ### Potential Missteps Initially, one might rush and miss the consecutive 'r's at the end. For example: - Seeing 'berry' and thinking there are two 'r's there (which is correct: 'r' and 'r'), but forgetting the initial 'r' in 'straw'. So, it's crucial to go through each letter methodically to avoid undercounting. ### Conclusion After a thorough examination, the number of 'r's in "strawberry" is: **There are 3 r's in the word 'strawberry'.**

Alright, let's tackle the problem: **How many r's are there in the word 'strawberry'?** ### Step 1: Understanding the Problem First, I need to determine how many times the letter 'r' appears in the word "strawberry." This seems simple enough, but to be thorough, I'll write down the word and count each 'r' one by one. ### Step 2: Writing Down the Word Let's write the word clearly: S T R A W B E R R Y ### Step 3: Identifying Each Letter Now, let's list out each letter in order and note down the 'r's. 1. **S** - First letter. Not an 'r'. 2. **T** - Second letter. Not an 'r'. 3. **R** - Third letter. This is an 'r'. (Count: 1) 4. **A** - Fourth letter. Not an 'r'. 5. **W** - Fifth letter. Not an 'r'. 6. **B** - Sixth letter. Not an 'r'. 7. **E** - Seventh letter. Not an 'r'. 8. **R** - Eighth letter. This is an 'r'. (Count: 2) 9. **R** - Ninth letter. This is another 'r'. (Count: 3) 10. **Y** - Tenth letter. Not an 'r'. ### Step 4: Verifying the Count From the breakdown: - The 3rd letter is 'R' - 1 - The 8th letter is 'R' - 2 - The 9th letter is 'R' - 3 No other letters in "strawberry" are 'r's. ### Step 5: Double-Checking Sometimes, it's easy to miscount, especially with similar-looking letters or quick glances. Let me read the word again and highlight the 'r's: S T **R** A W B E **R R** Y Yes, the 'r's are in the 3rd, 8th, and 9th positions. ### Step 6: Considering Upper and Lower Case The word is given in lowercase ('strawberry'), but even if it were uppercase ('STRAWBERRY'), the count would be the same since we're only looking for the letter 'r' regardless of its case. ### Step 7: Final Count After carefully reviewing each letter, the letter 'r' appears **three times** in "strawberry." ### Potential Missteps Initially, one might rush and miss the consecutive 'r's at the end. For example: - Seeing 'berry' and thinking there are two 'r's there (which is correct: 'r' and 'r'), but forgetting the initial 'r' in 'straw'. So, it's crucial to go through each letter methodically to avoid undercounting. ### Conclusion After a thorough examination, the number of 'r's in "strawberry" is: **There are 3 r's in the word 'strawberry'.**

Alright, let's tackle the problem: **How many r's are there in the word 'strawberry'?** ### Step 1: Understanding the Problem First, I need to determine how many times the letter 'r' appears in the word "strawberry." This seems simple enough, but to be thorough, I'll write down the word and count each 'r' one by one. ### Step 2: Writing Down the Word Let's write the word clearly: S T R A W B E R R Y ### Step 3: Identifying Each Letter Now, let's list out each letter in order and note down the 'r's. 1. **S** - First letter. Not an 'r'. 2. **T** - Second letter. Not an 'r'. 3. **R** - Third letter. This is an 'r'. (Count: 1) 4. **A** - Fourth letter. Not an 'r'. 5. **W** - Fifth letter. Not an 'r'. 6. **B** - Sixth letter. Not an 'r'. 7. **E** - Seventh letter. Not an 'r'. 8. **R** - Eighth letter. This is an 'r'. (Count: 2) 9. **R** - Ninth letter. This is another 'r'. (Count: 3) 10. **Y** - Tenth letter. Not an 'r'. ### Step 4: Verifying the Count From the breakdown: - The 3rd letter is 'R' - 1 - The 8th letter is 'R' - 2 - The 9th letter is 'R' - 3 No other letters in "strawberry" are 'r's. ### Step 5: Double-Checking Sometimes, it's easy to miscount, especially with similar-looking letters or quick glances. Let me read the word again and highlight the 'r's: S T **R** A W B E **R R** Y Yes, the 'r's are in the 3rd, 8th, and 9th positions. ### Step 6: Considering Upper and Lower Case The word is given in lowercase ('strawberry'), but even if it were uppercase ('STRAWBERRY'), the count would be the same since we're only looking for the letter 'r' regardless of its case. ### Step 7: Final Count After carefully reviewing each letter, the letter 'r' appears **three times** in "strawberry." ### Potential Missteps Initially, one might rush and miss the consecutive 'r's at the end. For example: - Seeing 'berry' and thinking there are two 'r's there (which is correct: 'r' and 'r'), but forgetting the initial 'r' in 'straw'. So, it's crucial to go through each letter methodically to avoid undercounting. ### Conclusion After a thorough examination, the number of 'r's in "strawberry" is: **There are 3 r's in the word 'strawberry'.**

The response format is similar to that of any reasoning model CoT. Here are Claude 3.7's CoT traces, which resemble how Deepseek v3 0324 responded.

And this is Deepseek r1's response.

Despite not being reasoning models, the models have been RL-trained for better reasoning performance.

In comparison, here's the response from a non-reasoning models

Claude 3.5 Sonnet

Let me count them explicitly: st(r)awbe(rr)y<br>There are 3 'r's in "strawberry"

Let me count them explicitly: st(r)awbe(rr)y<br>There are 3 'r's in "strawberry"

Let me count them explicitly: st(r)awbe(rr)y<br>There are 3 'r's in "strawberry"

Claude 3.7 Sonnet

I'll count the number of 'r' letters in the word "strawberry": s-t-r-a-w-b-e-r-r-y Going through each letter: 1. First 'r' at position 3 2. Second 'r' at position 7 3. Third 'r' at position 8 There are 3 instances of the letter 'r' in the word "strawberry"

I'll count the number of 'r' letters in the word "strawberry": s-t-r-a-w-b-e-r-r-y Going through each letter: 1. First 'r' at position 3 2. Second 'r' at position 7 3. Third 'r' at position 8 There are 3 instances of the letter 'r' in the word "strawberry"

I'll count the number of 'r' letters in the word "strawberry": s-t-r-a-w-b-e-r-r-y Going through each letter: 1. First 'r' at position 3 2. Second 'r' at position 7 3. Third 'r' at position 8 There are 3 instances of the letter 'r' in the word "strawberry"

From GPT 4.5

There are **3** r's in the word **"strawberry"**.

There are **3** r's in the word **"strawberry"**.

There are **3** r's in the word **"strawberry"**.

The Deepseek v3 0324's responses are closer to a reasoning model than other base models, and I think this is significant. A base model can reason on par with the reasoning model with a fraction of tokens, and the cost is crazy.

Running it locally

Within hours of its release, Awni Hannun made a 4-bit quantised model run on a 512 GB MacBook M3 ultra at 20 tok/sec via mlx-lm and this mlx-community/DeepSeek-V3-0324-4bit 4-bit quantisation, which reduces the on-disk size to 352 GB.

If you have the machine, you can run it using Simon Willison's LLM MLX.

llm mlx download-model mlx-community/DeepSeek-V3-0324-4bit llm chat -m mlx-community/DeepSeek-V3-0324-4bit

llm mlx download-model mlx-community/DeepSeek-V3-0324-4bit llm chat -m mlx-community/DeepSeek-V3-0324-4bit

llm mlx download-model mlx-community/DeepSeek-V3-0324-4bit llm chat -m mlx-community/DeepSeek-V3-0324-4bit

You can soon run it using Ollama, though they don't have the checkpoint yet.

Real-world performance

The most significant indicator of model performance is seeing people building interesting stuff using it.

Reddit User Cpicd found Deepseek v3 0324 outperforming Claude 3.5 Sonnet in his personal code creativity benchmark. He asked the model to "Write a raytracer that renders an interesting scene with many colourful light sources in Python."

The new Deepseek has visibly improved from its predecessor and is on par with the Sonnets.

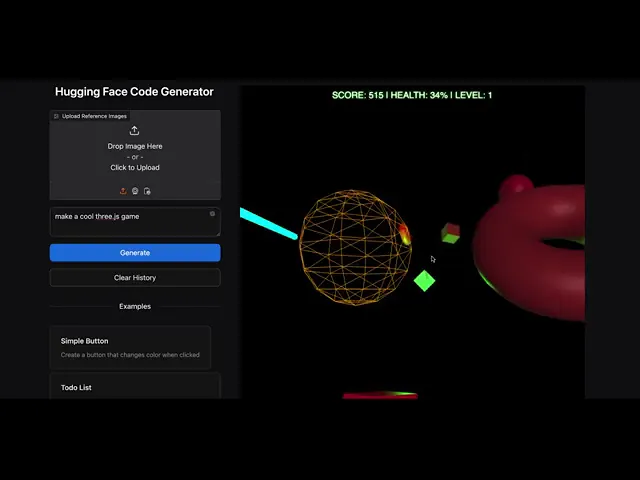

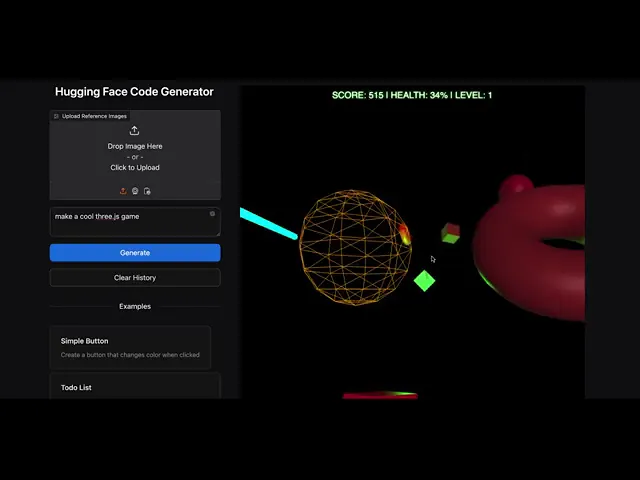

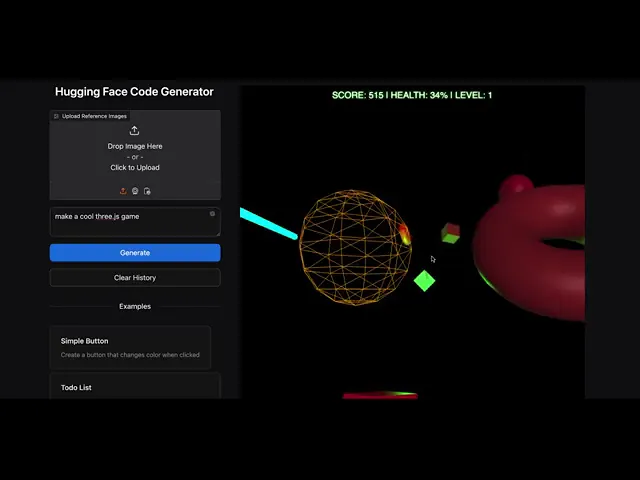

Akhaiq from HuggingFace made a 3JS game in the single prompt.

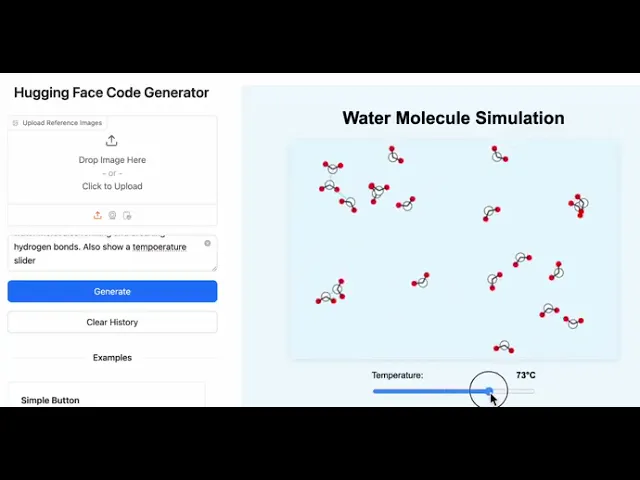

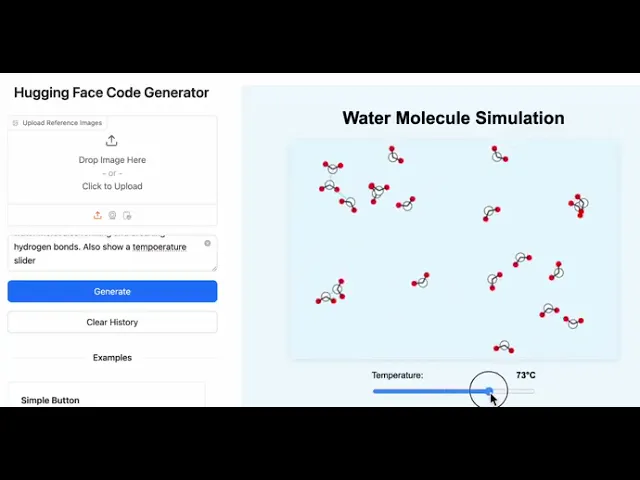

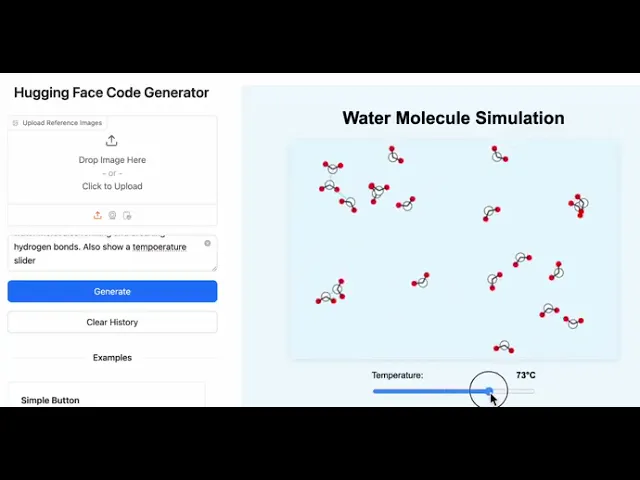

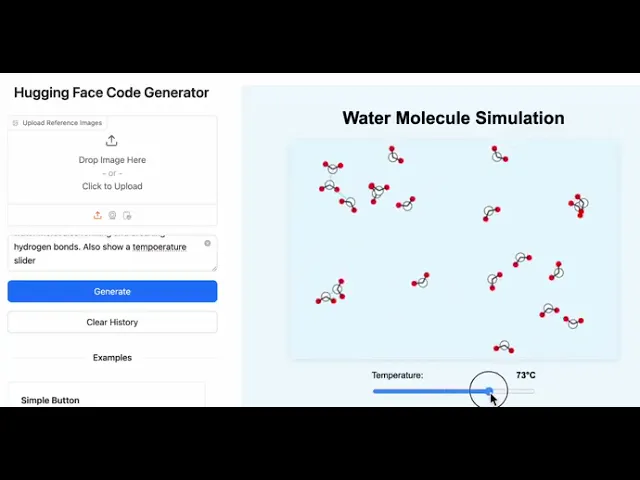

Parul Pandey created a water molecule movement simulation, where molecules form and break hydrogen bonds with temperature adjustments.

Paul Gauthier asked it to improve aider.chat, and it suggested replacing emojis with SVGs. It has some good taste.

The ball rolling inside the hexagon tests is saturated. Teortaxes built a ball-rolling simulator with sliders that allow users to adjust parameters with a single prompt.

But how much of an improvement?

I have used Deepseek v3, r1, Sonnets, and even Grok. Of all, r1 stood out in creativity, Claude 3.5 Sonnet in coding + instruction following, and Grok in Grokking. So, I was as curious as you to know how good the model is.

1. Simon Willison's Pelican SVG test

Simon Willison's Pelican Riding a Bicycle SVG test.

Claude 3.7 was the better, while the Deepseek v3 0324 is a close second. Deepseek r1 and Claude 3.5 Sonnet were pretty bad.

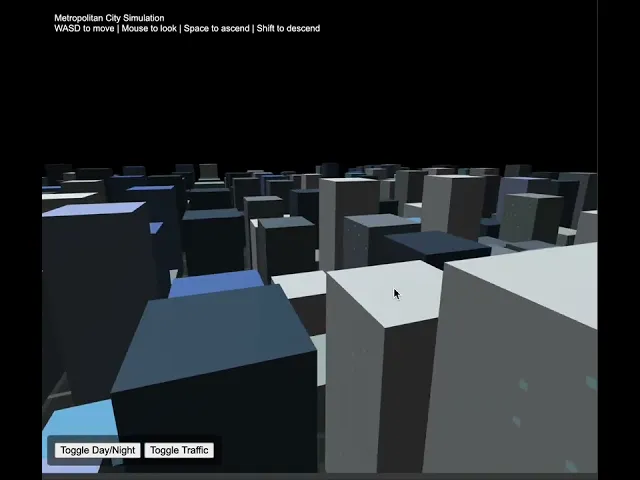

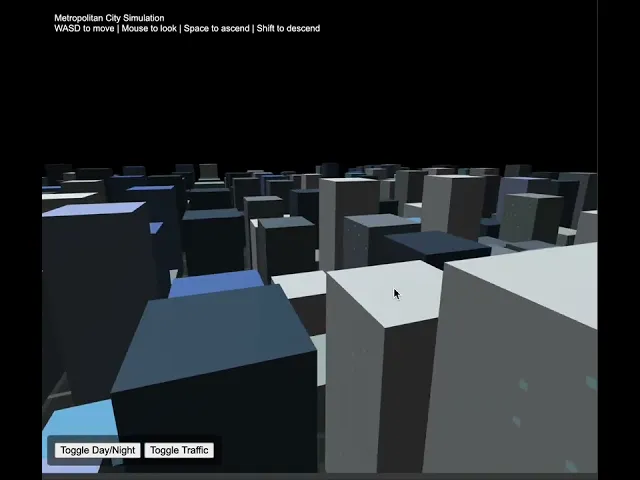

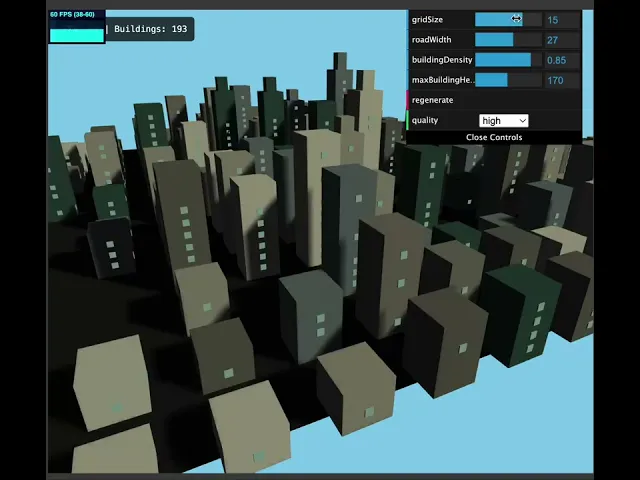

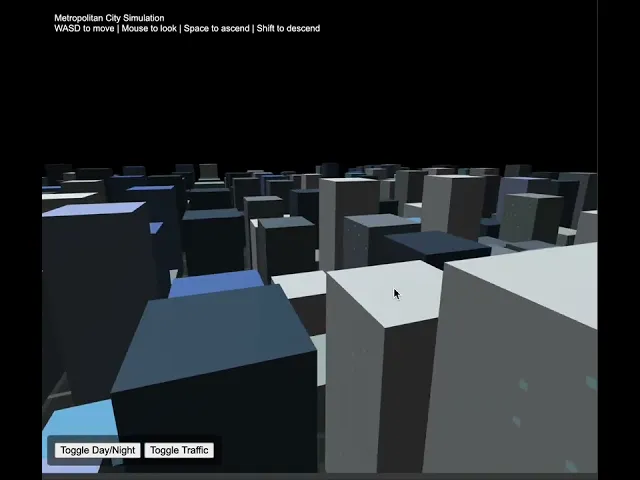

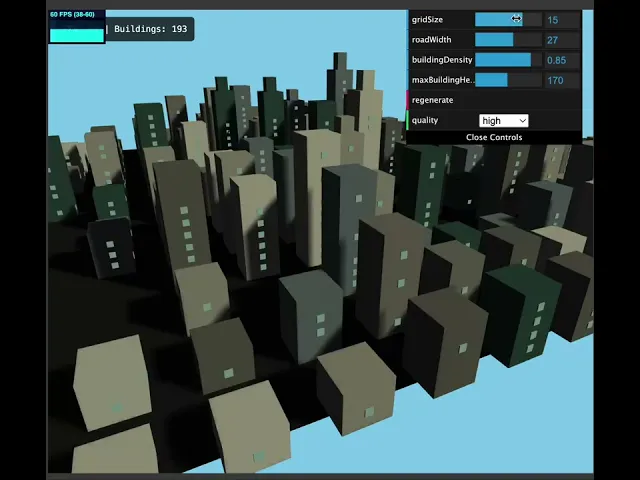

2. Simulation with 3js

I usually use this to get a good idea of how internally creative the model is.

One simple simulation test using 3JS. So, let's see how good the model is.

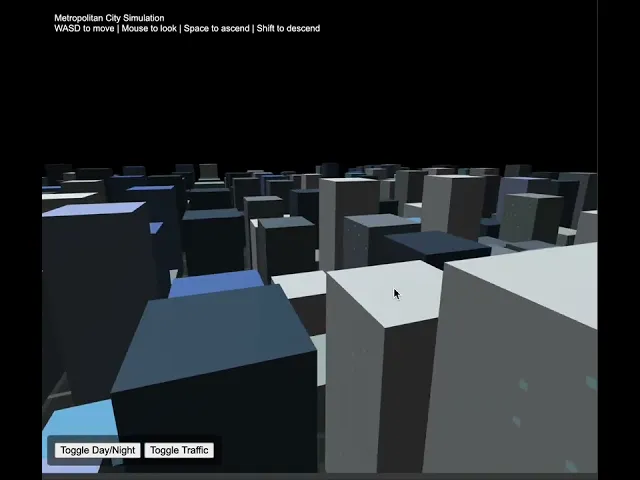

Prompt: write me the complete code in 3js for a metropolitan city landscape simulation. Make it browser-friendly so it can run lag-free

It was generated from the zero-shot prompting. It even added navigation, toggle day and night, and traffic. However, except for traffic, the other toggle options weren't working.

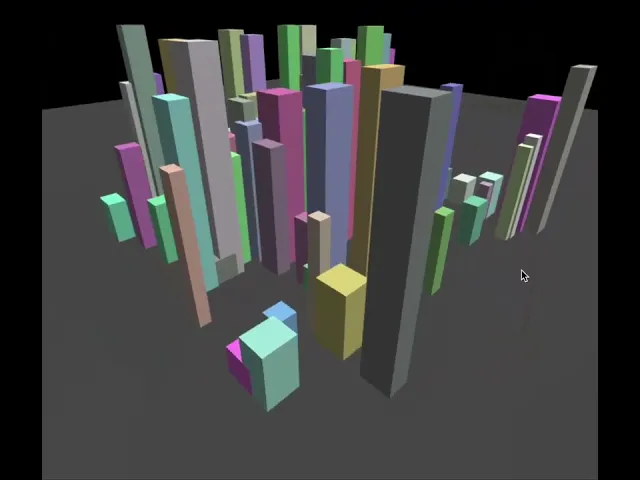

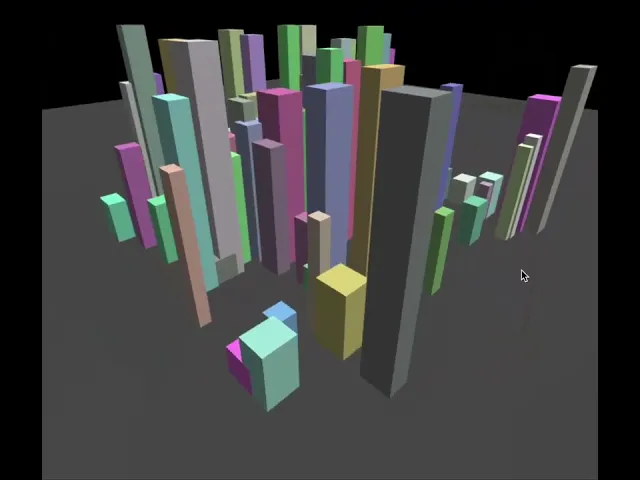

This is the response from Claude 3.5 Sonnet. The initial code was wrong; the imports were messed up after a slight change. This is what it got.

This was not very great. The output was very dull.

Output from Claude 3.7 Sonnet Thinking, which was after asking it to fix the issues, so assume it's two shots.

And this is from the Claude 3.7 Sonnet base.

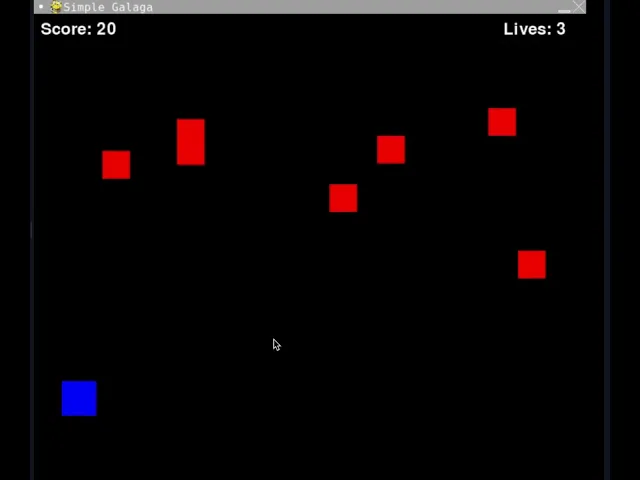

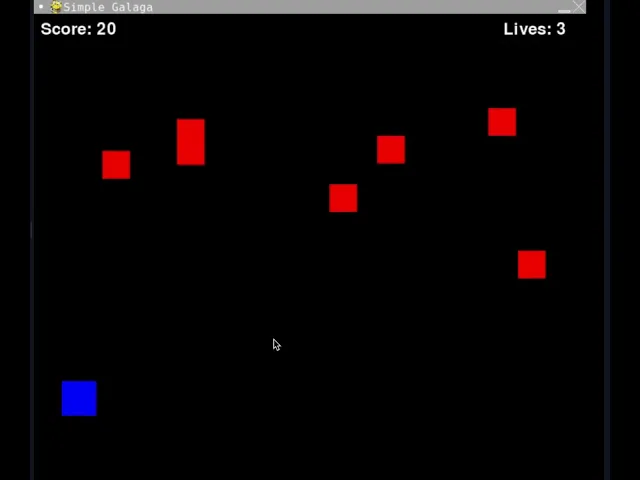

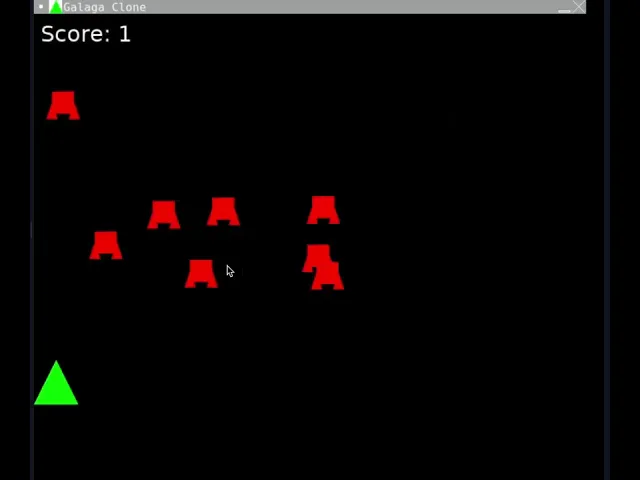

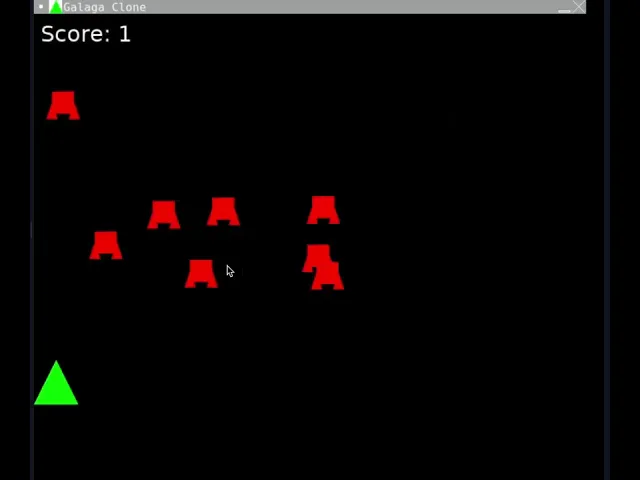

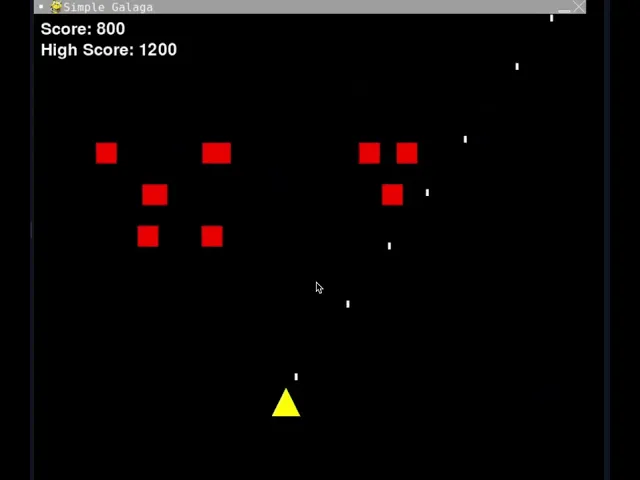

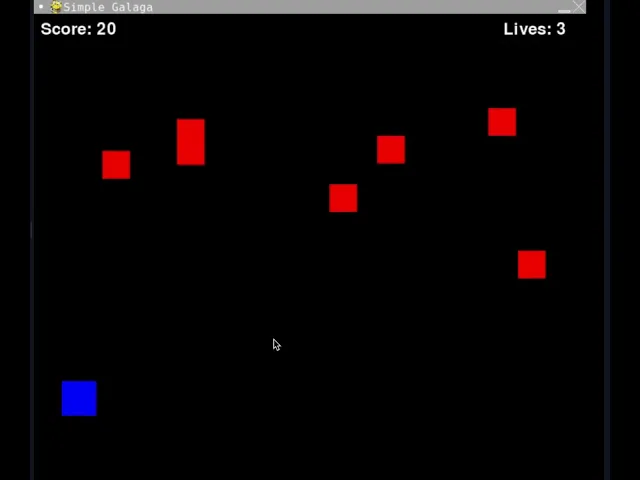

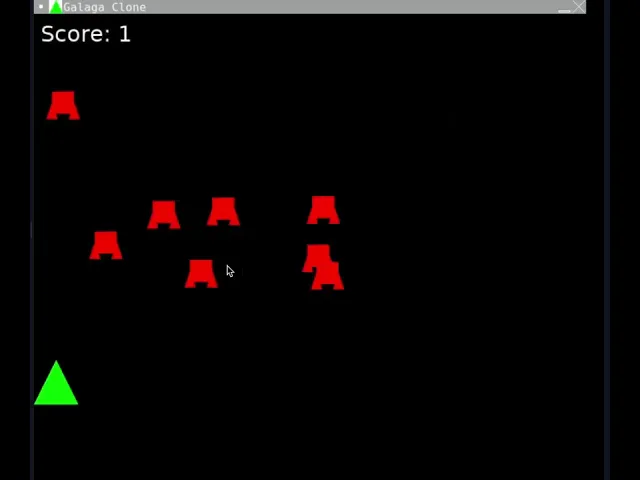

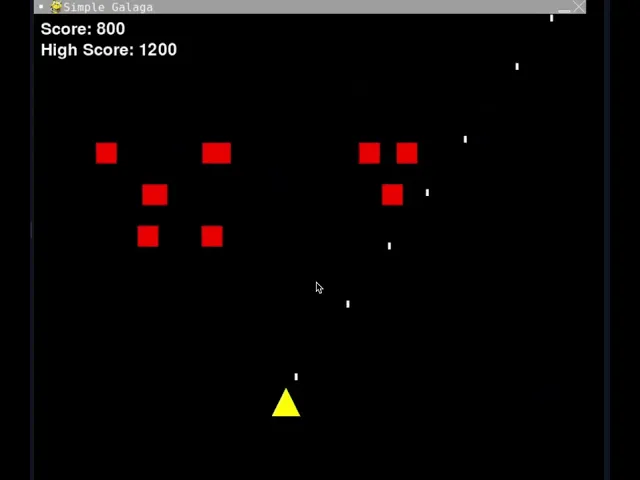

3. Building Galaga in Python

So, I asked it to build the Galaga game using Pygame.

Prompt: Create a simple playable version of Galaga in Python

Claude 3.7 Sonnet

Claude 3.5 Sonnet

Of the three models, Claude 3.5 Sonnet did the best; the game mechanics were better, the flow was good and overall better, followed by Deepseek v3 0324, and Claude 3.7 Sonnet did the worst.

Observations on model performance

The Deepseek v3 0324 understands user intention better than before; I'd say it's better than Claude 3.7 Sonnet base and thinking. 3.5 is still better at this (perhaps the best)

Again, in raw quality code generation, it is better than 3.7, on par with 3.5, and sometimes better.

Great at reasoning, much better than any non-reasoning models available right now.

Better at the instruction following than 3,7 Sonnet but below 3.5 Sonnet.

Conclusion

Deepseek v3 0324 is not just a tiny bump in capability but a massive upgrade over its predecessor. And it's certainly on the level of Claude 3.7 Sonnet and often better. Considering the API pricing and the fact that this is freely available in the Deepseek Chat, it is the best model for most use cases.

Great for code generation, building LLM-powered apps, writing and everything else Claude 3.7 Sonnet can do; you may find Claude 3.5 Sonnet better at real-world tasks. The gap has been completely narrowed.

You might be wrong if you think this will be used as the base for R2. Going by their track record, they wouldn't base R2 on a V3 checkpoint but on a much better model like V3.

The race to AGI will be interesting.

Deepseek v3 o324, a new checkpoint, has been released by Deepseek in silence, with no marketing or hype, just a tweet, a model card and a 641GB MIT-licensed open-weight base model—very Deepseek-like. This, paired with Gemini 2.5 and Chatgpt image generation, caused the entire launch to go under the carpet.

Thinking that they released potentially the best base model and have made a single tweet so far is beyond comprehension; it’s time the Whales hire a great PR team. They could name it Deepseek v3.5 and could’ve wiped another round of billions from Bigtech stocks.

The v3 was released three months ago, around Christmas. Now, we have another base model with increased capability. From the initial vibes, it seems we finally have the Sonnet 3.5 at home (not literally, but you got my point). I blogged about Deepseek v3, and it was a great model and on par with GPT-4o, but this model is a massive upgrade over the last one.

v3 matched GPT-4o, and v3 0324 is on par with Claude 3.5 Sonnet. Eating into the moat of most closed models: A comment from HackerNews perfectly summarizes this, “Sorry, try again with a better moat”.

Table of Contents

Notes on Deepseek v3 0324

Benchmarks that matter

RL training with GRPO for better reasoning

Running it locally

Real-world performance

But how much improvement over others

Simon Willison's Pelican SVG test

3js simulation

Galaga in Python

TL;DR

If you've somewhere else to be, here's a short summary.

It's a massive upgrade over the original v3.

Slightly bigger than v3 but with an MIT license.

Better reasoning, code generation, and a better understanding of user intention thanks to RL training with GRPO.

Performs better than Claude 3.7 Sonnet on many tasks that you would care

Can outperform Claude 3.5 Sonnet on many tasks still, but you may find 3.5 to be better at cases.

It's MIT-licensed, freely available, allows you to opt out of training, and is super cheap.

The best base LLM considering the price to performance.

Notes on Deepseek v3 0324

Unlike the last v3, which had a custom v3 license, the new Deepseek v3 checkpoint comes with an MIT license. The model also has 685b parameters compared to 671b in the earlier v3 model. It should follow the architecture as the original v3, Deepseek Mixture of Experts (MoE) and Multi-head Latent Attention (MLA).

Some of the things that have been improved over the previous model collated from Huggingface

Reasoning capabilities

MMLU-Pro: 75.9 → 81.2 (+5.3)

GPQA: 59.1 → 68.4 (+9.3)

AIME: 39.6 → 59.4 (+19.8)

LiveCodeBench: 39.2 → 49.2 (+10.0)

Front-end development

More aesthetically pleasing websites and game fronts

Better executable code

Function calling ability

Chinese writing ability

Chinese analysis and research capabilities

Exceeding on Benchmarks that matter

Some benchmarks matter more and are better predictors of a model’s capabilities. Not to underestimate the above benchmarks, private benchmarks often give a better picture of models' usability in the real world.

And Deepseek v3 0324 is doing exceedingly well in them as well.

On Xeophon’s private benchmark, Deepseek v3 0324 is now the best non-reasoning model, even beating Claude 3.5 Sonnet.

The model came second to Claude’s 3.5 Sonnet on Paul Gauthier’s Aider benchmark.

On the KCores LLM arena, which tests LLMs for real-world coding performance, Deepseek v3 0324 has surpassed Claude 3.7 Sonnet and is only below Claude 3.5 Sonnet and Claude 3.7 Sonnet (thinking). To think an MIT-licenced model is outperforming the recently released

The Deepseek v3 0324 made a massive 2x jump on the Misguided Attention eval. This is rather impressive, and it is the top non-reasoning model.

As there are no official ARC-AGI results yet, Youtuber YJxAI is doing live testing (as of writing this blog post) on the public ARC-AGI dataset. The 0324 scores 28% on ARC-AGI—above Grok 3 and Gemini 2.0 Pro but below Sonnets 3.5 and 3.7.

RL-tuning with GRPO for better reasoning

During testing, it became evident that Deepseek v3 0324 exhibits signs of reasoning capabilities much better than previous base models, perhaps because most mainline models from Deepseek after Math are RL-trained with GRPO.

What is interesting here is the approach to solving this question by Deepseek v3 0324 and Claude 3.5 and 3.7 Sonnet.

Alright, let's tackle the problem: **How many r's are there in the word 'strawberry'?** ### Step 1: Understanding the Problem First, I need to determine how many times the letter 'r' appears in the word "strawberry." This seems simple enough, but to be thorough, I'll write down the word and count each 'r' one by one. ### Step 2: Writing Down the Word Let's write the word clearly: S T R A W B E R R Y ### Step 3: Identifying Each Letter Now, let's list out each letter in order and note down the 'r's. 1. **S** - First letter. Not an 'r'. 2. **T** - Second letter. Not an 'r'. 3. **R** - Third letter. This is an 'r'. (Count: 1) 4. **A** - Fourth letter. Not an 'r'. 5. **W** - Fifth letter. Not an 'r'. 6. **B** - Sixth letter. Not an 'r'. 7. **E** - Seventh letter. Not an 'r'. 8. **R** - Eighth letter. This is an 'r'. (Count: 2) 9. **R** - Ninth letter. This is another 'r'. (Count: 3) 10. **Y** - Tenth letter. Not an 'r'. ### Step 4: Verifying the Count From the breakdown: - The 3rd letter is 'R' - 1 - The 8th letter is 'R' - 2 - The 9th letter is 'R' - 3 No other letters in "strawberry" are 'r's. ### Step 5: Double-Checking Sometimes, it's easy to miscount, especially with similar-looking letters or quick glances. Let me read the word again and highlight the 'r's: S T **R** A W B E **R R** Y Yes, the 'r's are in the 3rd, 8th, and 9th positions. ### Step 6: Considering Upper and Lower Case The word is given in lowercase ('strawberry'), but even if it were uppercase ('STRAWBERRY'), the count would be the same since we're only looking for the letter 'r' regardless of its case. ### Step 7: Final Count After carefully reviewing each letter, the letter 'r' appears **three times** in "strawberry." ### Potential Missteps Initially, one might rush and miss the consecutive 'r's at the end. For example: - Seeing 'berry' and thinking there are two 'r's there (which is correct: 'r' and 'r'), but forgetting the initial 'r' in 'straw'. So, it's crucial to go through each letter methodically to avoid undercounting. ### Conclusion After a thorough examination, the number of 'r's in "strawberry" is: **There are 3 r's in the word 'strawberry'.**

The response format is similar to that of any reasoning model CoT. Here are Claude 3.7's CoT traces, which resemble how Deepseek v3 0324 responded.

And this is Deepseek r1's response.

Despite not being reasoning models, the models have been RL-trained for better reasoning performance.

In comparison, here's the response from a non-reasoning models

Claude 3.5 Sonnet

Let me count them explicitly: st(r)awbe(rr)y<br>There are 3 'r's in "strawberry"

Claude 3.7 Sonnet

I'll count the number of 'r' letters in the word "strawberry": s-t-r-a-w-b-e-r-r-y Going through each letter: 1. First 'r' at position 3 2. Second 'r' at position 7 3. Third 'r' at position 8 There are 3 instances of the letter 'r' in the word "strawberry"

From GPT 4.5

There are **3** r's in the word **"strawberry"**.

The Deepseek v3 0324's responses are closer to a reasoning model than other base models, and I think this is significant. A base model can reason on par with the reasoning model with a fraction of tokens, and the cost is crazy.

Running it locally

Within hours of its release, Awni Hannun made a 4-bit quantised model run on a 512 GB MacBook M3 ultra at 20 tok/sec via mlx-lm and this mlx-community/DeepSeek-V3-0324-4bit 4-bit quantisation, which reduces the on-disk size to 352 GB.

If you have the machine, you can run it using Simon Willison's LLM MLX.

llm mlx download-model mlx-community/DeepSeek-V3-0324-4bit llm chat -m mlx-community/DeepSeek-V3-0324-4bit

You can soon run it using Ollama, though they don't have the checkpoint yet.

Real-world performance

The most significant indicator of model performance is seeing people building interesting stuff using it.

Reddit User Cpicd found Deepseek v3 0324 outperforming Claude 3.5 Sonnet in his personal code creativity benchmark. He asked the model to "Write a raytracer that renders an interesting scene with many colourful light sources in Python."

The new Deepseek has visibly improved from its predecessor and is on par with the Sonnets.

Akhaiq from HuggingFace made a 3JS game in the single prompt.

Parul Pandey created a water molecule movement simulation, where molecules form and break hydrogen bonds with temperature adjustments.

Paul Gauthier asked it to improve aider.chat, and it suggested replacing emojis with SVGs. It has some good taste.

The ball rolling inside the hexagon tests is saturated. Teortaxes built a ball-rolling simulator with sliders that allow users to adjust parameters with a single prompt.

But how much of an improvement?

I have used Deepseek v3, r1, Sonnets, and even Grok. Of all, r1 stood out in creativity, Claude 3.5 Sonnet in coding + instruction following, and Grok in Grokking. So, I was as curious as you to know how good the model is.

1. Simon Willison's Pelican SVG test

Simon Willison's Pelican Riding a Bicycle SVG test.

Claude 3.7 was the better, while the Deepseek v3 0324 is a close second. Deepseek r1 and Claude 3.5 Sonnet were pretty bad.

2. Simulation with 3js

I usually use this to get a good idea of how internally creative the model is.

One simple simulation test using 3JS. So, let's see how good the model is.

Prompt: write me the complete code in 3js for a metropolitan city landscape simulation. Make it browser-friendly so it can run lag-free

It was generated from the zero-shot prompting. It even added navigation, toggle day and night, and traffic. However, except for traffic, the other toggle options weren't working.

This is the response from Claude 3.5 Sonnet. The initial code was wrong; the imports were messed up after a slight change. This is what it got.

This was not very great. The output was very dull.

Output from Claude 3.7 Sonnet Thinking, which was after asking it to fix the issues, so assume it's two shots.

And this is from the Claude 3.7 Sonnet base.

3. Building Galaga in Python

So, I asked it to build the Galaga game using Pygame.

Prompt: Create a simple playable version of Galaga in Python

Claude 3.7 Sonnet

Claude 3.5 Sonnet

Of the three models, Claude 3.5 Sonnet did the best; the game mechanics were better, the flow was good and overall better, followed by Deepseek v3 0324, and Claude 3.7 Sonnet did the worst.

Observations on model performance

The Deepseek v3 0324 understands user intention better than before; I'd say it's better than Claude 3.7 Sonnet base and thinking. 3.5 is still better at this (perhaps the best)

Again, in raw quality code generation, it is better than 3.7, on par with 3.5, and sometimes better.

Great at reasoning, much better than any non-reasoning models available right now.

Better at the instruction following than 3,7 Sonnet but below 3.5 Sonnet.

Conclusion

Deepseek v3 0324 is not just a tiny bump in capability but a massive upgrade over its predecessor. And it's certainly on the level of Claude 3.7 Sonnet and often better. Considering the API pricing and the fact that this is freely available in the Deepseek Chat, it is the best model for most use cases.

Great for code generation, building LLM-powered apps, writing and everything else Claude 3.7 Sonnet can do; you may find Claude 3.5 Sonnet better at real-world tasks. The gap has been completely narrowed.

You might be wrong if you think this will be used as the base for R2. Going by their track record, they wouldn't base R2 on a V3 checkpoint but on a much better model like V3.

The race to AGI will be interesting.

Recommended Blogs

Recommended Blogs

deepseek, v3, 0324, finally, sonnet, 35, at, home

Connect AI agents to SaaS apps in Minutes

Connect AI agents to SaaS apps in Minutes

We handle auth, tools, triggers, and logs, so you build what matters.

Connect AI agents to SaaS apps in Minutes

We handle auth, tools, triggers, and logs, so you build what matters.

Stay updated.

Stay updated.