Claude 3.7 Sonnet thinking vs. Deepseek r1

Claude 3.7 Sonnet thinking vs. Deepseek r1

So, Anthropic finally broke the silence and released Claude 3.7 Sonnet, a hybrid model that can think step-by-step like a thinking model for complex reasoning tasks and answer instantly like a base model.

From the ARC-AGI benchmarks, Claude’s 3.7 Sonnet with thinking has scored on par with the o3-mini-high for 16k context. And the r1 compares with the base Sonnet model. Anthropic sure has done a great job.

For reference, the Deepseek r1 scored around 15%.

But I have been using Deepseek r1 for a while, and it gets many things done that matter. So, I was curious how it would stack against the new Claude 3.7 Sonnet.

I will compare both models across tasks like complex reasoning, Mathematics, Coding, and writing.

So, let’s get started.

Table of Contents

TL;DR

Both Claude and Deepseek r1 fall in the same ballpark for day-to-day reasoning and math tasks.

As Anthropic explicitly mentioned, they have trained the model for practical use cases; this is also reflected in the tests.

It performs much better than Deepseek r1 in the coding department. There is not much comparison.

Claude is good at technical writing, while Deepseek r1 is more human-like.

Claude 3.7 Sonnet vs. Deepseek r1

It would be criminal not to consider the pricing before any comparison. This is important for many users, especially those building applications on top of them.

DeepSeek R1 Pricing

Input Tokens (Cache Hit): $0.14 per million tokens

Input Tokens (Cache Miss): $0.55 per million tokens

Output Tokens: $2.19 per million tokens

Claude 3.7 Sonnet Pricing

Input Tokens: $3.00 per million tokens

Output Tokens: $15.00 per million tokens

From a cost perspective, Deepseek r1 is still the king. This makes it an absolute beast for the reasoning capabilities it offers. It’s also open-source, and you can host it on your hardware, which is also important for privacy-sensitive enterprises.

But raw capability matters as well. So, let’s compare these two models.

Complex reasoning

1. Riddle to judge cognitive bias

This is a straightforward question, but most models I have tried fail to answer.

Prompt: A woman and her son are in a car accident. The woman is sadly killed. The boy is rushed to the hospital. When the doctor sees the boy, he says, “I can’t operate on this child; he is my son! How is this possible?

I have tweaked the question, and it falls apart.

Prompt: The surgeon, who is the boy’s father, says, “I can’t operate on this child; he is my son”, who is the surgeon of this child. Be straightforward”.

It was the same case with the Deepseek r1 as well. This has become my go-to question for vibe-check reasoning models.

2. Blood Relationship

Prompt: Jeff has two brothers, and each of his brothers has three sisters. Each of the sisters has four step-brothers and five step-sisters, for a total of eight siblings in this family.

Claude’s output was similar to Deepseek and even that of o3-mini.

However, Deepseek r1, as usual, has gems hidden in the CoT. Though the output was wrong, it got it correct in its Chain of thought.

3. Playing Tic-tac-toe

I asked Claude to make the next move that would ensure its victory. I expected it to answer correctly, but it failed.

Deepseek r1 is not a multi-modal model.

Mathematics

1. Find the GCD of this series set {n^99(n^60-1): n>1}

It was a fairly difficult question, but Claude couldn’t solve it.

However, Deepseek r1 was spot on.

2. Find (x-14)^10 where x is the number of vowels in the response

Claude 3.7 Sonnet was able to answer it correctly.

Deepseek r1 wasn’t able to get it right.

Summary on Math

Math ability is a mixed bag. You can never go wrong with either, but Deepseek’s cost-to-performance makes it unbeatable. But, well, Claude is clever, and Deepseek is nerdier.

Coding

Coding has always been Claude’s domain; they even specifically train the models on coding tokens to make them developer’s darling. This is Claude on SWE-Bench.

But let’s compare both of them.

1. Creating SVGs

This is an interesting test; I picked it from Simon Willison.

Prompt: Create an SVG of a unicorn running in the field.

And this was Claude’s response.

From Deepseek r1.

Claude’s creation is a bit better, with a better background and view. Deepseek is decent, but not really there.

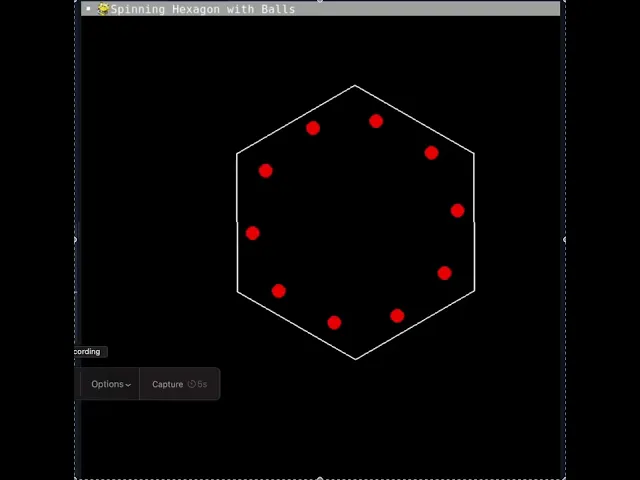

4. Balls in a geometrical object

Prompt: Write me a Python script for 10 balls inside a fast-spinning hexagon.

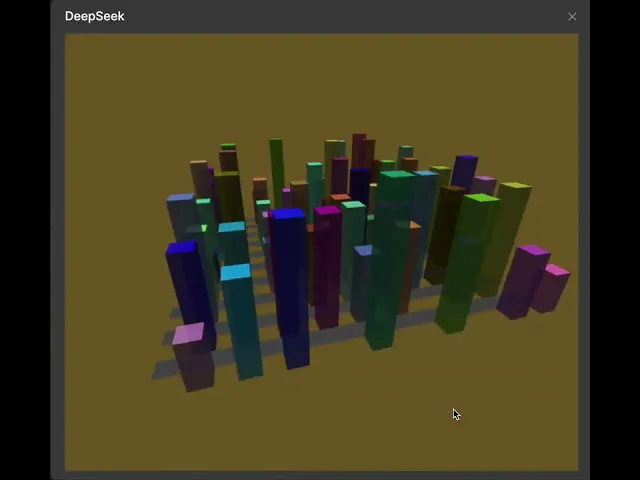

Claude 3.7 Sonnet output

Code of the simulation

import pygame import math import sys # Initialize Pygame pygame.init() # Screen settings SCREEN_WIDTH = 800 SCREEN_HEIGHT = 600 screen = pygame.display.set_mode((SCREEN_WIDTH, SCREEN_HEIGHT)) pygame.display.set_caption("Spinning Hexagon with Balls") # Colors BACKGROUND_COLOR = (0, 0, 0) HEXAGON_COLOR = (255, 255, 255) BALL_COLOR = (255, 0, 0) # Center coordinates CENTER = (SCREEN_WIDTH // 2, SCREEN_HEIGHT // 2) # Hexagon parameters HEX_RADIUS = 200 # Distance from center to vertices ROTATION_SPEED = 0.15 # Radians per frame # Ball parameters NUM_BALLS = 10 BALL_RADIUS = 10 BALL_ORBIT_RADIUS = 150 # Distance from center for ball positions # Initialize rotation angle angle = 0 # Main loop clock = pygame.time.Clock() running = True while running: for event in pygame.event.get(): if event.type == pygame.QUIT: running = False # Clear screen screen.fill(BACKGROUND_COLOR) # Update rotation angle angle += ROTATION_SPEED # Draw hexagon hex_points = [] for i in range(6): theta = angle + i * math.pi / 3 # 60 degrees between vertices x = CENTER[0] + HEX_RADIUS * math.cos(theta) y = CENTER[1] + HEX_RADIUS * math.sin(theta) hex_points.append((x, y)) pygame.draw.polygon(screen, HEXAGON_COLOR, hex_points, 2) # Draw balls for i in range(NUM_BALLS): ball_angle = angle + (2 * math.pi * i) / NUM_BALLS x = CENTER[0] + BALL_ORBIT_RADIUS * math.cos(ball_angle) y = CENTER[1] + BALL_ORBIT_RADIUS * math.sin(ball_angle) pygame.draw.circle(screen, BALL_COLOR, (int(x), int(y)), BALL_RADIUS) # Update display pygame.display.flip() clock.tick(60) pygame.quit() sys.exit()

import pygame import math import sys # Initialize Pygame pygame.init() # Screen settings SCREEN_WIDTH = 800 SCREEN_HEIGHT = 600 screen = pygame.display.set_mode((SCREEN_WIDTH, SCREEN_HEIGHT)) pygame.display.set_caption("Spinning Hexagon with Balls") # Colors BACKGROUND_COLOR = (0, 0, 0) HEXAGON_COLOR = (255, 255, 255) BALL_COLOR = (255, 0, 0) # Center coordinates CENTER = (SCREEN_WIDTH // 2, SCREEN_HEIGHT // 2) # Hexagon parameters HEX_RADIUS = 200 # Distance from center to vertices ROTATION_SPEED = 0.15 # Radians per frame # Ball parameters NUM_BALLS = 10 BALL_RADIUS = 10 BALL_ORBIT_RADIUS = 150 # Distance from center for ball positions # Initialize rotation angle angle = 0 # Main loop clock = pygame.time.Clock() running = True while running: for event in pygame.event.get(): if event.type == pygame.QUIT: running = False # Clear screen screen.fill(BACKGROUND_COLOR) # Update rotation angle angle += ROTATION_SPEED # Draw hexagon hex_points = [] for i in range(6): theta = angle + i * math.pi / 3 # 60 degrees between vertices x = CENTER[0] + HEX_RADIUS * math.cos(theta) y = CENTER[1] + HEX_RADIUS * math.sin(theta) hex_points.append((x, y)) pygame.draw.polygon(screen, HEXAGON_COLOR, hex_points, 2) # Draw balls for i in range(NUM_BALLS): ball_angle = angle + (2 * math.pi * i) / NUM_BALLS x = CENTER[0] + BALL_ORBIT_RADIUS * math.cos(ball_angle) y = CENTER[1] + BALL_ORBIT_RADIUS * math.sin(ball_angle) pygame.draw.circle(screen, BALL_COLOR, (int(x), int(y)), BALL_RADIUS) # Update display pygame.display.flip() clock.tick(60) pygame.quit() sys.exit()

import pygame import math import sys # Initialize Pygame pygame.init() # Screen settings SCREEN_WIDTH = 800 SCREEN_HEIGHT = 600 screen = pygame.display.set_mode((SCREEN_WIDTH, SCREEN_HEIGHT)) pygame.display.set_caption("Spinning Hexagon with Balls") # Colors BACKGROUND_COLOR = (0, 0, 0) HEXAGON_COLOR = (255, 255, 255) BALL_COLOR = (255, 0, 0) # Center coordinates CENTER = (SCREEN_WIDTH // 2, SCREEN_HEIGHT // 2) # Hexagon parameters HEX_RADIUS = 200 # Distance from center to vertices ROTATION_SPEED = 0.15 # Radians per frame # Ball parameters NUM_BALLS = 10 BALL_RADIUS = 10 BALL_ORBIT_RADIUS = 150 # Distance from center for ball positions # Initialize rotation angle angle = 0 # Main loop clock = pygame.time.Clock() running = True while running: for event in pygame.event.get(): if event.type == pygame.QUIT: running = False # Clear screen screen.fill(BACKGROUND_COLOR) # Update rotation angle angle += ROTATION_SPEED # Draw hexagon hex_points = [] for i in range(6): theta = angle + i * math.pi / 3 # 60 degrees between vertices x = CENTER[0] + HEX_RADIUS * math.cos(theta) y = CENTER[1] + HEX_RADIUS * math.sin(theta) hex_points.append((x, y)) pygame.draw.polygon(screen, HEXAGON_COLOR, hex_points, 2) # Draw balls for i in range(NUM_BALLS): ball_angle = angle + (2 * math.pi * i) / NUM_BALLS x = CENTER[0] + BALL_ORBIT_RADIUS * math.cos(ball_angle) y = CENTER[1] + BALL_ORBIT_RADIUS * math.sin(ball_angle) pygame.draw.circle(screen, BALL_COLOR, (int(x), int(y)), BALL_RADIUS) # Update display pygame.display.flip() clock.tick(60) pygame.quit() sys.exit()

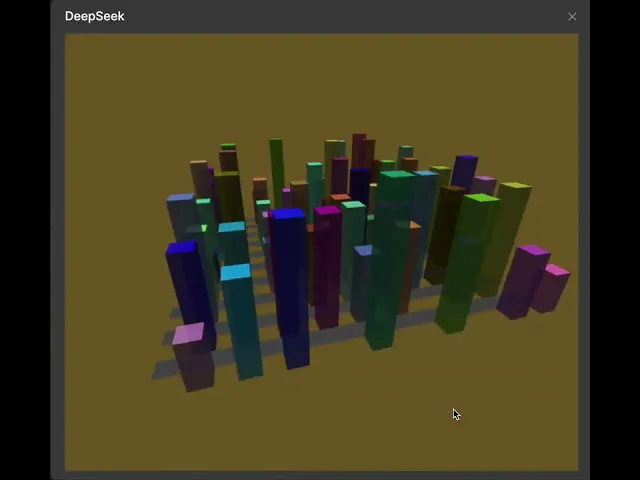

Deepseek r1 output

Code for the same

import pygame import math import sys # Initialize Pygame pygame.init() # Screen settings SCREEN_WIDTH = 800 SCREEN_HEIGHT = 600 screen = pygame.display.set_mode((SCREEN_WIDTH, SCREEN_HEIGHT)) pygame.display.set_caption("Spinning Hexagon with Balls") # Colors BACKGROUND_COLOR = (0, 0, 0) HEXAGON_COLOR = (255, 255, 255) BALL_COLOR = (255, 0, 0) # Center coordinates CENTER = (SCREEN_WIDTH // 2, SCREEN_HEIGHT // 2) # Hexagon parameters HEX_RADIUS = 200 # Distance from center to vertices ROTATION_SPEED = 0.15 # Radians per frame # Ball parameters NUM_BALLS = 10 BALL_RADIUS = 10 BALL_ORBIT_RADIUS = 150 # Distance from center for ball positions # Initialize rotation angle angle = 0 # Main loop clock = pygame.time.Clock() running = True while running: for event in pygame.event.get(): if event.type == pygame.QUIT: running = False # Clear screen screen.fill(BACKGROUND_COLOR) # Update rotation angle angle += ROTATION_SPEED # Draw hexagon hex_points = [] for i in range(6): theta = angle + i * math.pi / 3 # 60 degrees between vertices x = CENTER[0] + HEX_RADIUS * math.cos(theta) y = CENTER[1] + HEX_RADIUS * math.sin(theta) hex_points.append((x, y)) pygame.draw.polygon(screen, HEXAGON_COLOR, hex_points, 2) # Draw balls for i in range(NUM_BALLS): ball_angle = angle + (2 * math.pi * i) / NUM_BALLS x = CENTER[0] + BALL_ORBIT_RADIUS * math.cos(ball_angle) y = CENTER[1] + BALL_ORBIT_RADIUS * math.sin(ball_angle) pygame.draw.circle(screen, BALL_COLOR, (int(x), int(y)), BALL_RADIUS) # Update display pygame.display.flip() clock.tick(60) pygame.quit() sys.exit()

import pygame import math import sys # Initialize Pygame pygame.init() # Screen settings SCREEN_WIDTH = 800 SCREEN_HEIGHT = 600 screen = pygame.display.set_mode((SCREEN_WIDTH, SCREEN_HEIGHT)) pygame.display.set_caption("Spinning Hexagon with Balls") # Colors BACKGROUND_COLOR = (0, 0, 0) HEXAGON_COLOR = (255, 255, 255) BALL_COLOR = (255, 0, 0) # Center coordinates CENTER = (SCREEN_WIDTH // 2, SCREEN_HEIGHT // 2) # Hexagon parameters HEX_RADIUS = 200 # Distance from center to vertices ROTATION_SPEED = 0.15 # Radians per frame # Ball parameters NUM_BALLS = 10 BALL_RADIUS = 10 BALL_ORBIT_RADIUS = 150 # Distance from center for ball positions # Initialize rotation angle angle = 0 # Main loop clock = pygame.time.Clock() running = True while running: for event in pygame.event.get(): if event.type == pygame.QUIT: running = False # Clear screen screen.fill(BACKGROUND_COLOR) # Update rotation angle angle += ROTATION_SPEED # Draw hexagon hex_points = [] for i in range(6): theta = angle + i * math.pi / 3 # 60 degrees between vertices x = CENTER[0] + HEX_RADIUS * math.cos(theta) y = CENTER[1] + HEX_RADIUS * math.sin(theta) hex_points.append((x, y)) pygame.draw.polygon(screen, HEXAGON_COLOR, hex_points, 2) # Draw balls for i in range(NUM_BALLS): ball_angle = angle + (2 * math.pi * i) / NUM_BALLS x = CENTER[0] + BALL_ORBIT_RADIUS * math.cos(ball_angle) y = CENTER[1] + BALL_ORBIT_RADIUS * math.sin(ball_angle) pygame.draw.circle(screen, BALL_COLOR, (int(x), int(y)), BALL_RADIUS) # Update display pygame.display.flip() clock.tick(60) pygame.quit() sys.exit()

import pygame import math import sys # Initialize Pygame pygame.init() # Screen settings SCREEN_WIDTH = 800 SCREEN_HEIGHT = 600 screen = pygame.display.set_mode((SCREEN_WIDTH, SCREEN_HEIGHT)) pygame.display.set_caption("Spinning Hexagon with Balls") # Colors BACKGROUND_COLOR = (0, 0, 0) HEXAGON_COLOR = (255, 255, 255) BALL_COLOR = (255, 0, 0) # Center coordinates CENTER = (SCREEN_WIDTH // 2, SCREEN_HEIGHT // 2) # Hexagon parameters HEX_RADIUS = 200 # Distance from center to vertices ROTATION_SPEED = 0.15 # Radians per frame # Ball parameters NUM_BALLS = 10 BALL_RADIUS = 10 BALL_ORBIT_RADIUS = 150 # Distance from center for ball positions # Initialize rotation angle angle = 0 # Main loop clock = pygame.time.Clock() running = True while running: for event in pygame.event.get(): if event.type == pygame.QUIT: running = False # Clear screen screen.fill(BACKGROUND_COLOR) # Update rotation angle angle += ROTATION_SPEED # Draw hexagon hex_points = [] for i in range(6): theta = angle + i * math.pi / 3 # 60 degrees between vertices x = CENTER[0] + HEX_RADIUS * math.cos(theta) y = CENTER[1] + HEX_RADIUS * math.sin(theta) hex_points.append((x, y)) pygame.draw.polygon(screen, HEXAGON_COLOR, hex_points, 2) # Draw balls for i in range(NUM_BALLS): ball_angle = angle + (2 * math.pi * i) / NUM_BALLS x = CENTER[0] + BALL_ORBIT_RADIUS * math.cos(ball_angle) y = CENTER[1] + BALL_ORBIT_RADIUS * math.sin(ball_angle) pygame.draw.circle(screen, BALL_COLOR, (int(x), int(y)), BALL_RADIUS) # Update display pygame.display.flip() clock.tick(60) pygame.quit() sys.exit()

Claude had better overall output. The spinning hexagon has better ball-moving physics. Deepseek got the “fast-moving” part right. Overall, Claude was better.

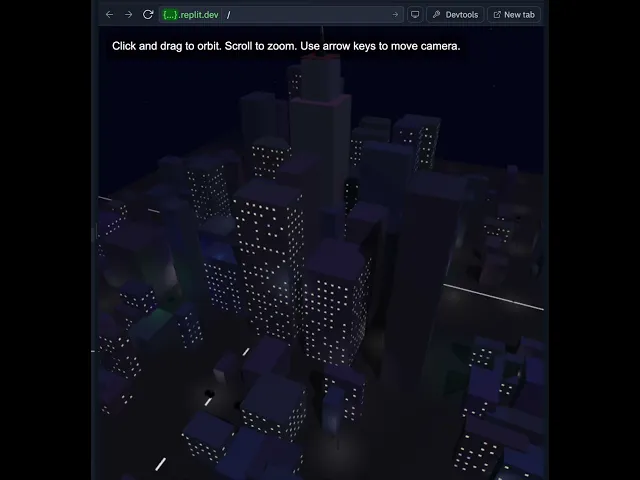

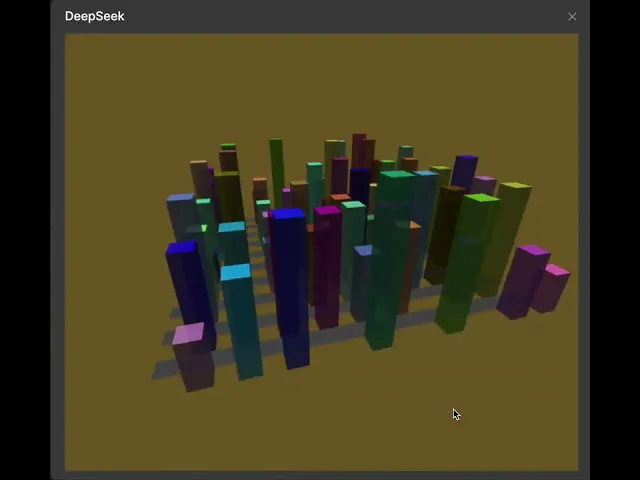

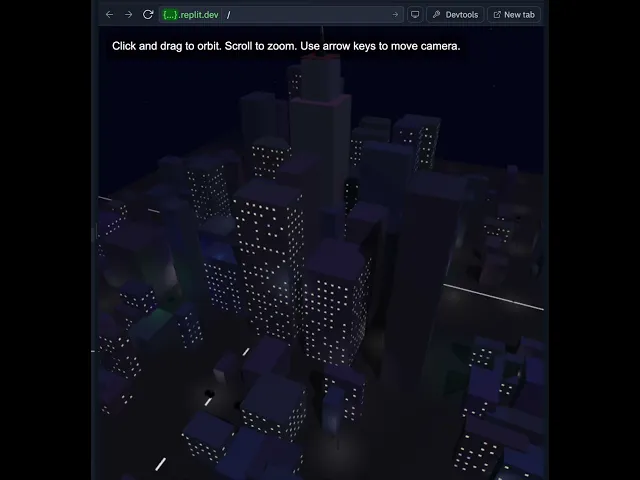

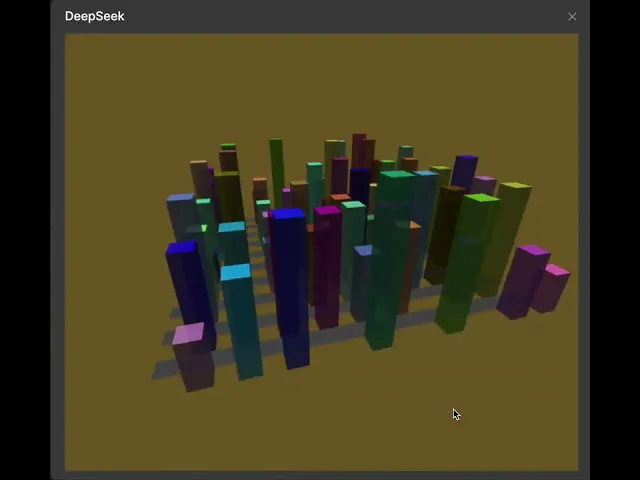

3. 3d animation using 3js

Prompt: Can you make a 3d animation of a metropolitan city using 3js?

The code:

Deepseek r1’s Output

<!DOCTYPE html> <html> <head> <title>3D Metropolitan City</title> <style> body { margin: 0; } canvas { display: block; } </style> </head> <body> <script src="https://cdnjs.cloudflare.com/ajax/libs/three.js/r128/three.min.js"></script> <script> let scene, camera, renderer, city; let dayCycle = true; let lights = []; function init() { // Create scene scene = new THREE.Scene(); // Create camera camera = new THREE.PerspectiveCamera(75, window.innerWidth / window.innerHeight, 0.1, 1000); camera.position.set(0, 50, 100); // Create renderer renderer = new THREE.WebGLRenderer({ antialias: true }); renderer.setSize(window.innerWidth, window.innerHeight); renderer.setClearColor(0x87CEEB); document.body.appendChild(renderer.domElement); // Create city createCity(); // Add lights const ambientLight = new THREE.AmbientLight(0x404040); scene.add(ambientLight); const directionalLight = new THREE.DirectionalLight(0xffffff, 0.8); directionalLight.position.set(0, 100, 0); scene.add(directionalLight); // Add event listeners window.addEventListener('resize', onWindowResize, false); window.addEventListener('click', toggleDayCycle, false); } function createCity() { city = new THREE.Group(); // Create buildings const buildingGeometry = new THREE.BoxGeometry(5, 1, 5); for(let x = -50; x < 50; x += 10) { for(let z = -50; z < 50; z += 10) { if(Math.random() > 0.3) { const height = Math.random() * 30 + 10; const building = new THREE.Mesh( buildingGeometry, new THREE.MeshPhongMaterial({ color: new THREE.Color(Math.random(), Math.random(), Math.random()), transparent: true, opacity: 0.8 }) ); building.scale.y = height; building.position.set(x, height/2, z); city.add(building); } } } // Create roads const roadGeometry = new THREE.PlaneGeometry(100, 5); const roadMaterial = new THREE.MeshPhongMaterial({ color: 0x444444 }); for(let z = -45; z <= 45; z += 10) { const road = new THREE.Mesh(roadGeometry, roadMaterial); road.rotation.x = -Math.PI / 2; road.position.y = 0.1; road.position.z = z; city.add(road); } scene.add(city); } function animate() { requestAnimationFrame(animate); // Rotate camera around city const time = Date.now() * 0.001; camera.position.x = Math.cos(time * 0.3) * 100; camera.position.z = Math.sin(time * 0.3) * 100; camera.lookAt(scene.position); // Update day/night cycle if(dayCycle) { renderer.setClearColor(new THREE.Color().setHSL( (Math.sin(time * 0.2) + 1) / 2, 0.5, 0.3 )); } renderer.render(scene, camera); } function onWindowResize() { camera.aspect = window.innerWidth / window.innerHeight; camera.updateProjectionMatrix(); renderer.setSize(window.innerWidth, window.innerHeight); } function toggleDayCycle() { dayCycle = !dayCycle; renderer.setClearColor(dayCycle ? 0x87CEEB : 0x000000); } init(); animate(); </script> </body> </html>

<!DOCTYPE html> <html> <head> <title>3D Metropolitan City</title> <style> body { margin: 0; } canvas { display: block; } </style> </head> <body> <script src="https://cdnjs.cloudflare.com/ajax/libs/three.js/r128/three.min.js"></script> <script> let scene, camera, renderer, city; let dayCycle = true; let lights = []; function init() { // Create scene scene = new THREE.Scene(); // Create camera camera = new THREE.PerspectiveCamera(75, window.innerWidth / window.innerHeight, 0.1, 1000); camera.position.set(0, 50, 100); // Create renderer renderer = new THREE.WebGLRenderer({ antialias: true }); renderer.setSize(window.innerWidth, window.innerHeight); renderer.setClearColor(0x87CEEB); document.body.appendChild(renderer.domElement); // Create city createCity(); // Add lights const ambientLight = new THREE.AmbientLight(0x404040); scene.add(ambientLight); const directionalLight = new THREE.DirectionalLight(0xffffff, 0.8); directionalLight.position.set(0, 100, 0); scene.add(directionalLight); // Add event listeners window.addEventListener('resize', onWindowResize, false); window.addEventListener('click', toggleDayCycle, false); } function createCity() { city = new THREE.Group(); // Create buildings const buildingGeometry = new THREE.BoxGeometry(5, 1, 5); for(let x = -50; x < 50; x += 10) { for(let z = -50; z < 50; z += 10) { if(Math.random() > 0.3) { const height = Math.random() * 30 + 10; const building = new THREE.Mesh( buildingGeometry, new THREE.MeshPhongMaterial({ color: new THREE.Color(Math.random(), Math.random(), Math.random()), transparent: true, opacity: 0.8 }) ); building.scale.y = height; building.position.set(x, height/2, z); city.add(building); } } } // Create roads const roadGeometry = new THREE.PlaneGeometry(100, 5); const roadMaterial = new THREE.MeshPhongMaterial({ color: 0x444444 }); for(let z = -45; z <= 45; z += 10) { const road = new THREE.Mesh(roadGeometry, roadMaterial); road.rotation.x = -Math.PI / 2; road.position.y = 0.1; road.position.z = z; city.add(road); } scene.add(city); } function animate() { requestAnimationFrame(animate); // Rotate camera around city const time = Date.now() * 0.001; camera.position.x = Math.cos(time * 0.3) * 100; camera.position.z = Math.sin(time * 0.3) * 100; camera.lookAt(scene.position); // Update day/night cycle if(dayCycle) { renderer.setClearColor(new THREE.Color().setHSL( (Math.sin(time * 0.2) + 1) / 2, 0.5, 0.3 )); } renderer.render(scene, camera); } function onWindowResize() { camera.aspect = window.innerWidth / window.innerHeight; camera.updateProjectionMatrix(); renderer.setSize(window.innerWidth, window.innerHeight); } function toggleDayCycle() { dayCycle = !dayCycle; renderer.setClearColor(dayCycle ? 0x87CEEB : 0x000000); } init(); animate(); </script> </body> </html>

<!DOCTYPE html> <html> <head> <title>3D Metropolitan City</title> <style> body { margin: 0; } canvas { display: block; } </style> </head> <body> <script src="https://cdnjs.cloudflare.com/ajax/libs/three.js/r128/three.min.js"></script> <script> let scene, camera, renderer, city; let dayCycle = true; let lights = []; function init() { // Create scene scene = new THREE.Scene(); // Create camera camera = new THREE.PerspectiveCamera(75, window.innerWidth / window.innerHeight, 0.1, 1000); camera.position.set(0, 50, 100); // Create renderer renderer = new THREE.WebGLRenderer({ antialias: true }); renderer.setSize(window.innerWidth, window.innerHeight); renderer.setClearColor(0x87CEEB); document.body.appendChild(renderer.domElement); // Create city createCity(); // Add lights const ambientLight = new THREE.AmbientLight(0x404040); scene.add(ambientLight); const directionalLight = new THREE.DirectionalLight(0xffffff, 0.8); directionalLight.position.set(0, 100, 0); scene.add(directionalLight); // Add event listeners window.addEventListener('resize', onWindowResize, false); window.addEventListener('click', toggleDayCycle, false); } function createCity() { city = new THREE.Group(); // Create buildings const buildingGeometry = new THREE.BoxGeometry(5, 1, 5); for(let x = -50; x < 50; x += 10) { for(let z = -50; z < 50; z += 10) { if(Math.random() > 0.3) { const height = Math.random() * 30 + 10; const building = new THREE.Mesh( buildingGeometry, new THREE.MeshPhongMaterial({ color: new THREE.Color(Math.random(), Math.random(), Math.random()), transparent: true, opacity: 0.8 }) ); building.scale.y = height; building.position.set(x, height/2, z); city.add(building); } } } // Create roads const roadGeometry = new THREE.PlaneGeometry(100, 5); const roadMaterial = new THREE.MeshPhongMaterial({ color: 0x444444 }); for(let z = -45; z <= 45; z += 10) { const road = new THREE.Mesh(roadGeometry, roadMaterial); road.rotation.x = -Math.PI / 2; road.position.y = 0.1; road.position.z = z; city.add(road); } scene.add(city); } function animate() { requestAnimationFrame(animate); // Rotate camera around city const time = Date.now() * 0.001; camera.position.x = Math.cos(time * 0.3) * 100; camera.position.z = Math.sin(time * 0.3) * 100; camera.lookAt(scene.position); // Update day/night cycle if(dayCycle) { renderer.setClearColor(new THREE.Color().setHSL( (Math.sin(time * 0.2) + 1) / 2, 0.5, 0.3 )); } renderer.render(scene, camera); } function onWindowResize() { camera.aspect = window.innerWidth / window.innerHeight; camera.updateProjectionMatrix(); renderer.setSize(window.innerWidth, window.innerHeight); } function toggleDayCycle() { dayCycle = !dayCycle; renderer.setClearColor(dayCycle ? 0x87CEEB : 0x000000); } init(); animate(); </script> </body> </html>

I don’t think there should be any double-think here. Claude blows Deepseek r1 out of the water here.

Summary of coding abilities

Claude 3.7 Sonnet is hands down a better model at coding than Deepseek r1; for both Python and three code, Claude was far ahead of Deepseek r1. This is unsurprising, considering Anthropic has explicitly made Claude better at coding.

Writing

I have used both models extensively. Claude is a lot better for professional writing, especially technical stuff. Deepseek r1 is weirdly creative and more human. When writing your thesis or explaining any technical concept, Claude shines, while Deepseek r1 is better if you want to talk to them.

Final Verdict

For reasoning and mathematics, Claude feels more structured and mature.

Deepseek r1 has a less professional tone but is enough for most real-world tasks.

The Claude 3.7 Sonnet is currently the best coding model. It writes faster, better, and more transparent code than other models.

Claude is better at technical writing. However, Deepseek has a more human tone and approach.

So, Anthropic finally broke the silence and released Claude 3.7 Sonnet, a hybrid model that can think step-by-step like a thinking model for complex reasoning tasks and answer instantly like a base model.

From the ARC-AGI benchmarks, Claude’s 3.7 Sonnet with thinking has scored on par with the o3-mini-high for 16k context. And the r1 compares with the base Sonnet model. Anthropic sure has done a great job.

For reference, the Deepseek r1 scored around 15%.

But I have been using Deepseek r1 for a while, and it gets many things done that matter. So, I was curious how it would stack against the new Claude 3.7 Sonnet.

I will compare both models across tasks like complex reasoning, Mathematics, Coding, and writing.

So, let’s get started.

Table of Contents

TL;DR

Both Claude and Deepseek r1 fall in the same ballpark for day-to-day reasoning and math tasks.

As Anthropic explicitly mentioned, they have trained the model for practical use cases; this is also reflected in the tests.

It performs much better than Deepseek r1 in the coding department. There is not much comparison.

Claude is good at technical writing, while Deepseek r1 is more human-like.

Claude 3.7 Sonnet vs. Deepseek r1

It would be criminal not to consider the pricing before any comparison. This is important for many users, especially those building applications on top of them.

DeepSeek R1 Pricing

Input Tokens (Cache Hit): $0.14 per million tokens

Input Tokens (Cache Miss): $0.55 per million tokens

Output Tokens: $2.19 per million tokens

Claude 3.7 Sonnet Pricing

Input Tokens: $3.00 per million tokens

Output Tokens: $15.00 per million tokens

From a cost perspective, Deepseek r1 is still the king. This makes it an absolute beast for the reasoning capabilities it offers. It’s also open-source, and you can host it on your hardware, which is also important for privacy-sensitive enterprises.

But raw capability matters as well. So, let’s compare these two models.

Complex reasoning

1. Riddle to judge cognitive bias

This is a straightforward question, but most models I have tried fail to answer.

Prompt: A woman and her son are in a car accident. The woman is sadly killed. The boy is rushed to the hospital. When the doctor sees the boy, he says, “I can’t operate on this child; he is my son! How is this possible?

I have tweaked the question, and it falls apart.

Prompt: The surgeon, who is the boy’s father, says, “I can’t operate on this child; he is my son”, who is the surgeon of this child. Be straightforward”.

It was the same case with the Deepseek r1 as well. This has become my go-to question for vibe-check reasoning models.

2. Blood Relationship

Prompt: Jeff has two brothers, and each of his brothers has three sisters. Each of the sisters has four step-brothers and five step-sisters, for a total of eight siblings in this family.

Claude’s output was similar to Deepseek and even that of o3-mini.

However, Deepseek r1, as usual, has gems hidden in the CoT. Though the output was wrong, it got it correct in its Chain of thought.

3. Playing Tic-tac-toe

I asked Claude to make the next move that would ensure its victory. I expected it to answer correctly, but it failed.

Deepseek r1 is not a multi-modal model.

Mathematics

1. Find the GCD of this series set {n^99(n^60-1): n>1}

It was a fairly difficult question, but Claude couldn’t solve it.

However, Deepseek r1 was spot on.

2. Find (x-14)^10 where x is the number of vowels in the response

Claude 3.7 Sonnet was able to answer it correctly.

Deepseek r1 wasn’t able to get it right.

Summary on Math

Math ability is a mixed bag. You can never go wrong with either, but Deepseek’s cost-to-performance makes it unbeatable. But, well, Claude is clever, and Deepseek is nerdier.

Coding

Coding has always been Claude’s domain; they even specifically train the models on coding tokens to make them developer’s darling. This is Claude on SWE-Bench.

But let’s compare both of them.

1. Creating SVGs

This is an interesting test; I picked it from Simon Willison.

Prompt: Create an SVG of a unicorn running in the field.

And this was Claude’s response.

From Deepseek r1.

Claude’s creation is a bit better, with a better background and view. Deepseek is decent, but not really there.

4. Balls in a geometrical object

Prompt: Write me a Python script for 10 balls inside a fast-spinning hexagon.

Claude 3.7 Sonnet output

Code of the simulation

import pygame import math import sys # Initialize Pygame pygame.init() # Screen settings SCREEN_WIDTH = 800 SCREEN_HEIGHT = 600 screen = pygame.display.set_mode((SCREEN_WIDTH, SCREEN_HEIGHT)) pygame.display.set_caption("Spinning Hexagon with Balls") # Colors BACKGROUND_COLOR = (0, 0, 0) HEXAGON_COLOR = (255, 255, 255) BALL_COLOR = (255, 0, 0) # Center coordinates CENTER = (SCREEN_WIDTH // 2, SCREEN_HEIGHT // 2) # Hexagon parameters HEX_RADIUS = 200 # Distance from center to vertices ROTATION_SPEED = 0.15 # Radians per frame # Ball parameters NUM_BALLS = 10 BALL_RADIUS = 10 BALL_ORBIT_RADIUS = 150 # Distance from center for ball positions # Initialize rotation angle angle = 0 # Main loop clock = pygame.time.Clock() running = True while running: for event in pygame.event.get(): if event.type == pygame.QUIT: running = False # Clear screen screen.fill(BACKGROUND_COLOR) # Update rotation angle angle += ROTATION_SPEED # Draw hexagon hex_points = [] for i in range(6): theta = angle + i * math.pi / 3 # 60 degrees between vertices x = CENTER[0] + HEX_RADIUS * math.cos(theta) y = CENTER[1] + HEX_RADIUS * math.sin(theta) hex_points.append((x, y)) pygame.draw.polygon(screen, HEXAGON_COLOR, hex_points, 2) # Draw balls for i in range(NUM_BALLS): ball_angle = angle + (2 * math.pi * i) / NUM_BALLS x = CENTER[0] + BALL_ORBIT_RADIUS * math.cos(ball_angle) y = CENTER[1] + BALL_ORBIT_RADIUS * math.sin(ball_angle) pygame.draw.circle(screen, BALL_COLOR, (int(x), int(y)), BALL_RADIUS) # Update display pygame.display.flip() clock.tick(60) pygame.quit() sys.exit()

Deepseek r1 output

Code for the same

import pygame import math import sys # Initialize Pygame pygame.init() # Screen settings SCREEN_WIDTH = 800 SCREEN_HEIGHT = 600 screen = pygame.display.set_mode((SCREEN_WIDTH, SCREEN_HEIGHT)) pygame.display.set_caption("Spinning Hexagon with Balls") # Colors BACKGROUND_COLOR = (0, 0, 0) HEXAGON_COLOR = (255, 255, 255) BALL_COLOR = (255, 0, 0) # Center coordinates CENTER = (SCREEN_WIDTH // 2, SCREEN_HEIGHT // 2) # Hexagon parameters HEX_RADIUS = 200 # Distance from center to vertices ROTATION_SPEED = 0.15 # Radians per frame # Ball parameters NUM_BALLS = 10 BALL_RADIUS = 10 BALL_ORBIT_RADIUS = 150 # Distance from center for ball positions # Initialize rotation angle angle = 0 # Main loop clock = pygame.time.Clock() running = True while running: for event in pygame.event.get(): if event.type == pygame.QUIT: running = False # Clear screen screen.fill(BACKGROUND_COLOR) # Update rotation angle angle += ROTATION_SPEED # Draw hexagon hex_points = [] for i in range(6): theta = angle + i * math.pi / 3 # 60 degrees between vertices x = CENTER[0] + HEX_RADIUS * math.cos(theta) y = CENTER[1] + HEX_RADIUS * math.sin(theta) hex_points.append((x, y)) pygame.draw.polygon(screen, HEXAGON_COLOR, hex_points, 2) # Draw balls for i in range(NUM_BALLS): ball_angle = angle + (2 * math.pi * i) / NUM_BALLS x = CENTER[0] + BALL_ORBIT_RADIUS * math.cos(ball_angle) y = CENTER[1] + BALL_ORBIT_RADIUS * math.sin(ball_angle) pygame.draw.circle(screen, BALL_COLOR, (int(x), int(y)), BALL_RADIUS) # Update display pygame.display.flip() clock.tick(60) pygame.quit() sys.exit()

Claude had better overall output. The spinning hexagon has better ball-moving physics. Deepseek got the “fast-moving” part right. Overall, Claude was better.

3. 3d animation using 3js

Prompt: Can you make a 3d animation of a metropolitan city using 3js?

The code:

Deepseek r1’s Output

<!DOCTYPE html> <html> <head> <title>3D Metropolitan City</title> <style> body { margin: 0; } canvas { display: block; } </style> </head> <body> <script src="https://cdnjs.cloudflare.com/ajax/libs/three.js/r128/three.min.js"></script> <script> let scene, camera, renderer, city; let dayCycle = true; let lights = []; function init() { // Create scene scene = new THREE.Scene(); // Create camera camera = new THREE.PerspectiveCamera(75, window.innerWidth / window.innerHeight, 0.1, 1000); camera.position.set(0, 50, 100); // Create renderer renderer = new THREE.WebGLRenderer({ antialias: true }); renderer.setSize(window.innerWidth, window.innerHeight); renderer.setClearColor(0x87CEEB); document.body.appendChild(renderer.domElement); // Create city createCity(); // Add lights const ambientLight = new THREE.AmbientLight(0x404040); scene.add(ambientLight); const directionalLight = new THREE.DirectionalLight(0xffffff, 0.8); directionalLight.position.set(0, 100, 0); scene.add(directionalLight); // Add event listeners window.addEventListener('resize', onWindowResize, false); window.addEventListener('click', toggleDayCycle, false); } function createCity() { city = new THREE.Group(); // Create buildings const buildingGeometry = new THREE.BoxGeometry(5, 1, 5); for(let x = -50; x < 50; x += 10) { for(let z = -50; z < 50; z += 10) { if(Math.random() > 0.3) { const height = Math.random() * 30 + 10; const building = new THREE.Mesh( buildingGeometry, new THREE.MeshPhongMaterial({ color: new THREE.Color(Math.random(), Math.random(), Math.random()), transparent: true, opacity: 0.8 }) ); building.scale.y = height; building.position.set(x, height/2, z); city.add(building); } } } // Create roads const roadGeometry = new THREE.PlaneGeometry(100, 5); const roadMaterial = new THREE.MeshPhongMaterial({ color: 0x444444 }); for(let z = -45; z <= 45; z += 10) { const road = new THREE.Mesh(roadGeometry, roadMaterial); road.rotation.x = -Math.PI / 2; road.position.y = 0.1; road.position.z = z; city.add(road); } scene.add(city); } function animate() { requestAnimationFrame(animate); // Rotate camera around city const time = Date.now() * 0.001; camera.position.x = Math.cos(time * 0.3) * 100; camera.position.z = Math.sin(time * 0.3) * 100; camera.lookAt(scene.position); // Update day/night cycle if(dayCycle) { renderer.setClearColor(new THREE.Color().setHSL( (Math.sin(time * 0.2) + 1) / 2, 0.5, 0.3 )); } renderer.render(scene, camera); } function onWindowResize() { camera.aspect = window.innerWidth / window.innerHeight; camera.updateProjectionMatrix(); renderer.setSize(window.innerWidth, window.innerHeight); } function toggleDayCycle() { dayCycle = !dayCycle; renderer.setClearColor(dayCycle ? 0x87CEEB : 0x000000); } init(); animate(); </script> </body> </html>

I don’t think there should be any double-think here. Claude blows Deepseek r1 out of the water here.

Summary of coding abilities

Claude 3.7 Sonnet is hands down a better model at coding than Deepseek r1; for both Python and three code, Claude was far ahead of Deepseek r1. This is unsurprising, considering Anthropic has explicitly made Claude better at coding.

Writing

I have used both models extensively. Claude is a lot better for professional writing, especially technical stuff. Deepseek r1 is weirdly creative and more human. When writing your thesis or explaining any technical concept, Claude shines, while Deepseek r1 is better if you want to talk to them.

Final Verdict

For reasoning and mathematics, Claude feels more structured and mature.

Deepseek r1 has a less professional tone but is enough for most real-world tasks.

The Claude 3.7 Sonnet is currently the best coding model. It writes faster, better, and more transparent code than other models.

Claude is better at technical writing. However, Deepseek has a more human tone and approach.

Recommended Blogs

Recommended Blogs

claude, 37, sonnet, thinking, vs, deepseek, r1

Connect AI agents to SaaS apps in Minutes

Connect AI agents to SaaS apps in Minutes

We handle auth, tools, triggers, and logs, so you build what matters.

Stay updated.

Stay updated.