Building a voice agent to command all your apps

Building a voice agent to command all your apps

I am the Voice from the Outer World! I will lead you to PARADISE

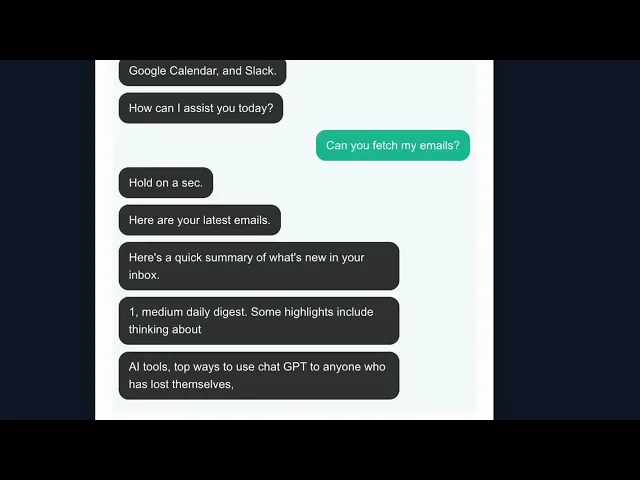

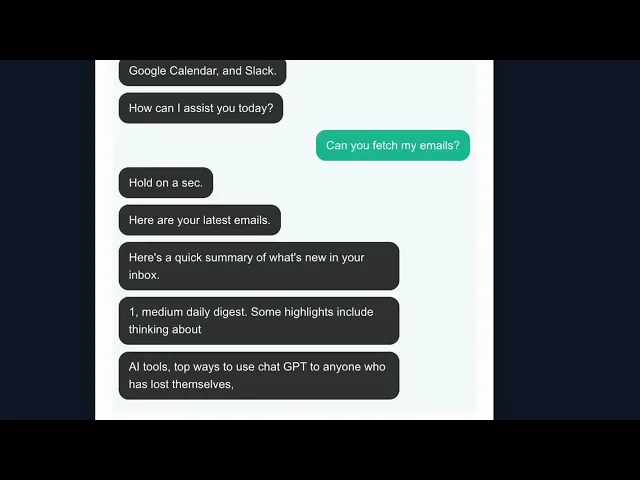

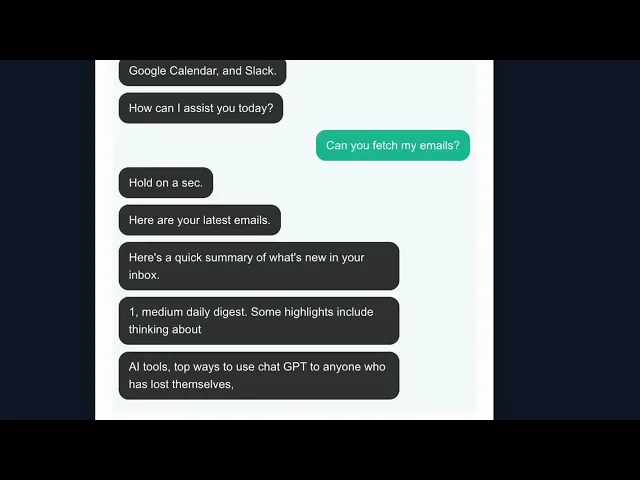

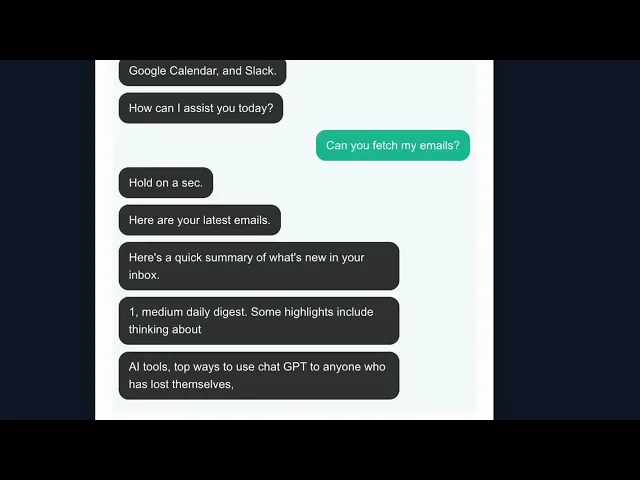

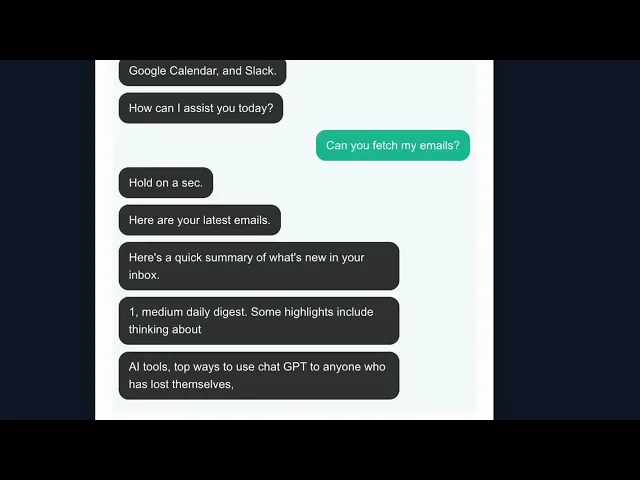

Paul Atreides uses the Voice as a tool for control and assertion. Imagine commandeering an AI agent with this voice. I built an AI agent using Vapi, OpenAI TTS, and Composio, integrated with Gmail, Slack, and Google Calendar. It can summarise emails, schedule meetings, and search for Slack messages. Your entire morning routine is stress-free. You can further expand it to add more tools as many as you want.

This was built using Claude Code inside the Cursor IDE.

The problem in Arrakis

Checking Slack and Gmail is a morning ritual I religiously follow, but comprehending each message while still half-asleep feels like swimming through molasses. Voice agents excel at this exact problem - you can ask them to summarise the critical stuff, explain confusing threads, or drill into specific details while you make coffee. The impressions in the screenshot below also indicate that I’m not alone in facing this problem.

Cultivating the Spice

Started with a Next.js app and immediately hit the latency wall that kills most voice projects. Voice demands conversational flow - unlike text interfaces, where users tolerate waiting, voice agents need to respond instantly or the illusion breaks. My initial approach was embarrassingly naive: STT → LLM → Tool Call → TTS. Sequential processing meant 3-5 seconds of awkward silence after each command.

Then I discovered Vapi, which handles the entire voice pipeline elegantly - parallel processing, model swapping, automatic interruption handling. It turned my clunky prototype into something that actually feels conversational.

For integrations, Composio was the obvious choice - it abstracts away the OAuth complexity and gives you clean, reliable connections to Gmail, Calendar, and Slack without writing boilerplate for each API.

On the development side, I'm convinced that running Claude Code inside Cursor is the optimal setup. Standalone Claude Code in terminal lacks proper diffs - you're flying blind with file changes. Cursor alone has good DX but weaker code generation. But Claude Code inside Cursor? You get Claude's superior coding ability with Cursor's visual diffs, giving you a lot more control and visibility over the changes being made.

Where the Spice flows

User → Vapi Widget: User clicks “Talk to Assistant” to start the voice session.

Widget → LLM: The widget starts a call, sending the system prompt, model, voice, and the tool catalogue from vapiToolsConfig with concrete server URLs.

LLM → Widget: The LLM streams speech and final transcripts back; the widget updates speaking/listening indicators and the transcript UI.

LLM → API Route: When an action is needed (e.g., send email), the LLM triggers a tool call: an HTTP POST to the matching /api/tools/... route.

API self-work (route-helpers): The route extracts the toolCallId and arguments, races execution against a 30-second timeout, and normalises errors/success into Vapi’s expected response shape.

API → Composio: The route calls the relevant wrapper in lib/composio.ts, which invokes composio.tools.execute(...).

Composio → Provider: Composio talks to Gmail/Calendar/Slack APIs and returns a ComposioToolResult.

API → LLM: The API responds with { results: \[{ toolCallId, result }\] }. The LLM consumes this and continues the conversation with updated context.

LLM/Widget → User: The widget reflects new messages/results in the transcript and UI state.

Following Shai Hulud

Claude Code's recent performance has been frustrating, and I'm not alone in noticing the decline in quality. Despite feeding it comprehensive documentation from Composio and Vapi, it consistently reverted to outdated API patterns. I'd explicitly show it how to implement routes using Vapi's specific request/response schemas, and it would acknowledge understanding, then immediately generate code using deprecated methods. Its debugging process became almost comical - fix one error, create three new ones, then insist the original fix was perfect while ignoring the fresh breakage. The silver lining? It nailed the core architecture, cleanly separating Composio actions into individual route files with a centralised wrapper.

The UI challenge revealed another Claude Code quirk: without explicit visual direction, it defaults to the same tired template every time - hero section, three feature cards, call it a day. Voice interfaces are surprisingly hard to find inspiration for; most hide behind wake words or bury the actual interaction. Thankfully, Vapi's documentation included a pre-built voice widget that I could feed directly to Claude Code as a starting point.

Results

The agent currently handles nine core actions across three platforms: Gmail (fetch, send, and draft), Slack (create channels, list conversations, and send messages), and Google Calendar (create events and find conflicts). Each action executes with sub-500ms latency - fast enough that conversation never breaks flow.

The real power is Composio's extensibility. Adding new tools requires just a few lines of configuration rather than wrestling with OAuth flows and API quirks. Want Notion for meeting notes? Linear for task creation? Each addition makes the assistant exponentially more useful. The vision is simple: reduce the mechanical parts of knowledge work to voice commands.

Vapi’s observability on the dashboard is extremely helpful when trying to debug behaviours with voice agents because, unlike text, you can’t directly get into the trenches. Metrics and call logs provide a clear understanding of the agent’s behaviour.

Next on the roadmap: MCP (Model Context Protocol) support for smarter tool coordination, improved response handling to make conversations feel more natural rather than command-response, and a UI that actually shows what's happening under the hood. The current interface works, but it should feel like magic - visual feedback for active tools, confidence scores for actions, and a preview of what's about to happen before confirmation. The foundation is solid; now it's time to make it shine.

I am the Voice from the Outer World! I will lead you to PARADISE

Paul Atreides uses the Voice as a tool for control and assertion. Imagine commandeering an AI agent with this voice. I built an AI agent using Vapi, OpenAI TTS, and Composio, integrated with Gmail, Slack, and Google Calendar. It can summarise emails, schedule meetings, and search for Slack messages. Your entire morning routine is stress-free. You can further expand it to add more tools as many as you want.

This was built using Claude Code inside the Cursor IDE.

The problem in Arrakis

Checking Slack and Gmail is a morning ritual I religiously follow, but comprehending each message while still half-asleep feels like swimming through molasses. Voice agents excel at this exact problem - you can ask them to summarise the critical stuff, explain confusing threads, or drill into specific details while you make coffee. The impressions in the screenshot below also indicate that I’m not alone in facing this problem.

Cultivating the Spice

Started with a Next.js app and immediately hit the latency wall that kills most voice projects. Voice demands conversational flow - unlike text interfaces, where users tolerate waiting, voice agents need to respond instantly or the illusion breaks. My initial approach was embarrassingly naive: STT → LLM → Tool Call → TTS. Sequential processing meant 3-5 seconds of awkward silence after each command.

Then I discovered Vapi, which handles the entire voice pipeline elegantly - parallel processing, model swapping, automatic interruption handling. It turned my clunky prototype into something that actually feels conversational.

For integrations, Composio was the obvious choice - it abstracts away the OAuth complexity and gives you clean, reliable connections to Gmail, Calendar, and Slack without writing boilerplate for each API.

On the development side, I'm convinced that running Claude Code inside Cursor is the optimal setup. Standalone Claude Code in terminal lacks proper diffs - you're flying blind with file changes. Cursor alone has good DX but weaker code generation. But Claude Code inside Cursor? You get Claude's superior coding ability with Cursor's visual diffs, giving you a lot more control and visibility over the changes being made.

Where the Spice flows

User → Vapi Widget: User clicks “Talk to Assistant” to start the voice session.

Widget → LLM: The widget starts a call, sending the system prompt, model, voice, and the tool catalogue from vapiToolsConfig with concrete server URLs.

LLM → Widget: The LLM streams speech and final transcripts back; the widget updates speaking/listening indicators and the transcript UI.

LLM → API Route: When an action is needed (e.g., send email), the LLM triggers a tool call: an HTTP POST to the matching /api/tools/... route.

API self-work (route-helpers): The route extracts the toolCallId and arguments, races execution against a 30-second timeout, and normalises errors/success into Vapi’s expected response shape.

API → Composio: The route calls the relevant wrapper in lib/composio.ts, which invokes composio.tools.execute(...).

Composio → Provider: Composio talks to Gmail/Calendar/Slack APIs and returns a ComposioToolResult.

API → LLM: The API responds with { results: \[{ toolCallId, result }\] }. The LLM consumes this and continues the conversation with updated context.

LLM/Widget → User: The widget reflects new messages/results in the transcript and UI state.

Following Shai Hulud

Claude Code's recent performance has been frustrating, and I'm not alone in noticing the decline in quality. Despite feeding it comprehensive documentation from Composio and Vapi, it consistently reverted to outdated API patterns. I'd explicitly show it how to implement routes using Vapi's specific request/response schemas, and it would acknowledge understanding, then immediately generate code using deprecated methods. Its debugging process became almost comical - fix one error, create three new ones, then insist the original fix was perfect while ignoring the fresh breakage. The silver lining? It nailed the core architecture, cleanly separating Composio actions into individual route files with a centralised wrapper.

The UI challenge revealed another Claude Code quirk: without explicit visual direction, it defaults to the same tired template every time - hero section, three feature cards, call it a day. Voice interfaces are surprisingly hard to find inspiration for; most hide behind wake words or bury the actual interaction. Thankfully, Vapi's documentation included a pre-built voice widget that I could feed directly to Claude Code as a starting point.

Results

The agent currently handles nine core actions across three platforms: Gmail (fetch, send, and draft), Slack (create channels, list conversations, and send messages), and Google Calendar (create events and find conflicts). Each action executes with sub-500ms latency - fast enough that conversation never breaks flow.

The real power is Composio's extensibility. Adding new tools requires just a few lines of configuration rather than wrestling with OAuth flows and API quirks. Want Notion for meeting notes? Linear for task creation? Each addition makes the assistant exponentially more useful. The vision is simple: reduce the mechanical parts of knowledge work to voice commands.

Vapi’s observability on the dashboard is extremely helpful when trying to debug behaviours with voice agents because, unlike text, you can’t directly get into the trenches. Metrics and call logs provide a clear understanding of the agent’s behaviour.

Next on the roadmap: MCP (Model Context Protocol) support for smarter tool coordination, improved response handling to make conversations feel more natural rather than command-response, and a UI that actually shows what's happening under the hood. The current interface works, but it should feel like magic - visual feedback for active tools, confidence scores for actions, and a preview of what's about to happen before confirmation. The foundation is solid; now it's time to make it shine.

Recommended Blogs

Recommended Blogs

Connect AI agents to SaaS apps in Minutes

Connect AI agents to SaaS apps in Minutes

We handle auth, tools, triggers, and logs, so you build what matters.

Stay updated.

Stay updated.