DeepSeek recently released Deep Seek V3.2 Speciale - a reasoning-first model built for agents.

As a developer who uses both open- and closed-source models for his builds, I decided to test the new model (Deep Seek V3.2) with my most-used model, Claude Sonnet 4.5.

Also, during the building process, I used Rube MCP, a single server that gives you access to apps like GitHub, Linear, Supabase, etc and loads tools dynamically to avoid context bloat. This helps us get a clear picture of models' tool-calling ability and also gives me an excuse to use dog food for the product.

So, I coded a complete web app using the model to stress-test its capacity and found some fascinating insights.

This post covers my build process, prompts, exact builds and a few insights. Let’s dive in!

tldr;

I tested DeepSeek V3.2 and Claude Sonnet 4.5 on a full-stack feature-voting app.

DeepSeek V3.2 built parts of the app but stumbled on execution, speed, and reliability despite solid reasoning steps.

Claude Sonnet 4.5 powered through the whole build with cleaner planning and better tooling, just at a much higher cost.

Both models followed the workflow, but Claude handled ambiguity, testing, and end-to-end flow way more gracefully.

DeepSeek impressed with memory and tool usage, but required manual fixes and felt noticeably slower.

Overall: DeepSeek is great for cost-efficient tinkering, Claude shines for dependable multi-step builds. So, pick based on your use case and cost.

App Overview

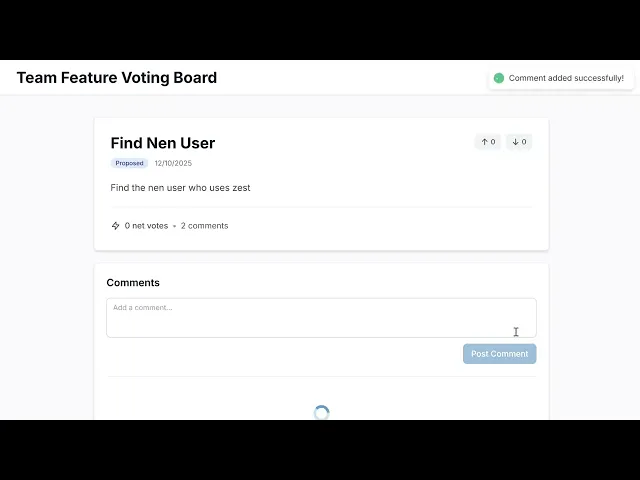

The app is the Team Feature Voting Board App., which internal product teams can use to:

Submit feature ideas.

Upvote/downvote features.

Comment on features.

While the board will:

Show vote counts, status, and basic metadata.

Filter by status (e.g. “Backlog”, “In Progress”, “Shipped”).

Prevent duplicate voting by the same user on the same feature.

For this, I will use Rube MCP as a provider, as it unlocks access to 500+ tools with one-click setup + automated tool selection, planning and execution.

Apart from that, for claude-sonnet-4.5 - I will go with Claude Code, and for Deep Seek V3.2 - I will use open code with open router as the model provider.

Testing Methodology

Used Claude 4.5 Sonnet with Claude Code and Deepseek 3.2 Speciale with Opencode.

I started from the basic flow:

Setting up app overview.

Writing the project brief + scope.

Creating Linear epic + tickets.

Designing UI frames in Figma.

Defining Supabase schema + migrations.

Designing API endpoints + interactions.

Planning frontend architecture + components.

Implementing backend logic (votes, comments, features).

Building frontend UI screens + state handling.

Creating GitHub Commit + PR.

For this, I wrote an instruction-based prompt and kept it in prompt.txt at the project level.

For both terminals, I also added a terminal prompt to add an overview of the project.

Terminal Prompt (same for both)

You can check out the prompt I wrote in prompt.txt.

Results

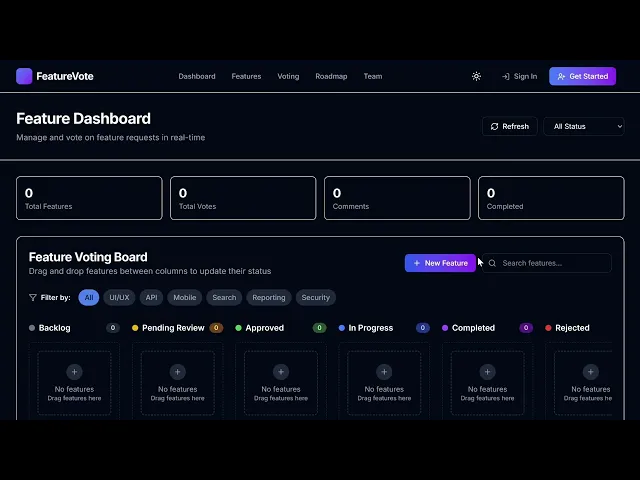

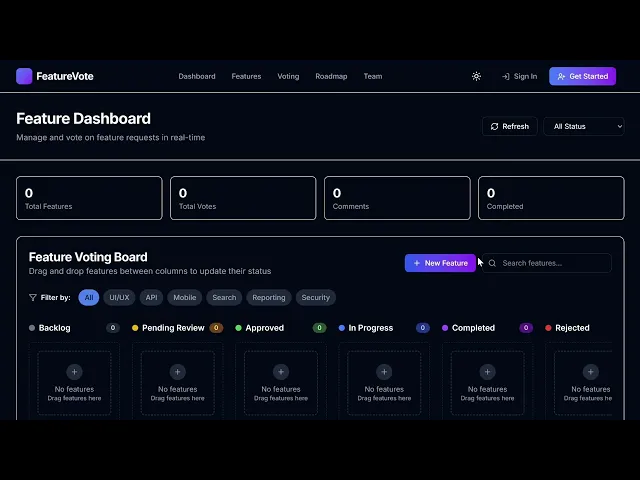

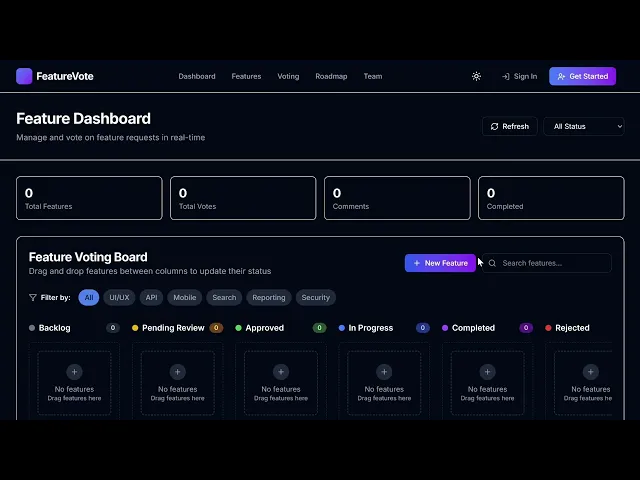

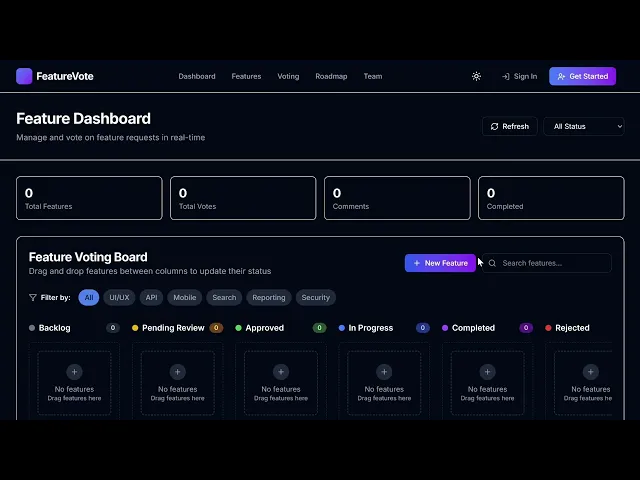

Here is the output of both!

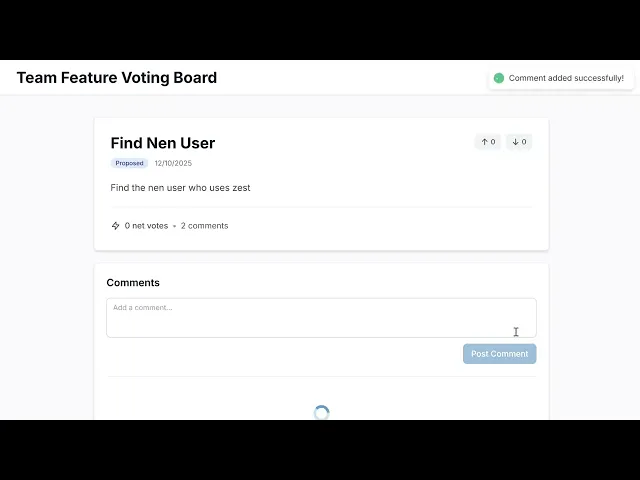

Deep Seek V3.2

Deep Seek V3.2 built a partially functional app, even with reference to the Figma file I provided, which has much of the functionality broken. For ref: I had to manually fix the partial code for DB and RPA and update the NextJS and TypeScript config files.

It followed the following flow:

Went step by step,

fetched all the relevant tools using rube_mcp.

Remembered DB URLs, RLS rules, and, in fact, the DB ID itself.

Created backend and frontend with multiple ask prompts,

broke the high-level task into a to-do list and went one by one.

Relatively slow compared to Claude 4.5 Sonnet, with

no commits.

Overall, did the work partially & speed is a concern:

Cost: ~2$

Tokens: ~8.4M with auto-compact.

Time: ~ 1.45 hours - with additional prompt guidance.

Quality: Mid-level, partially functional.

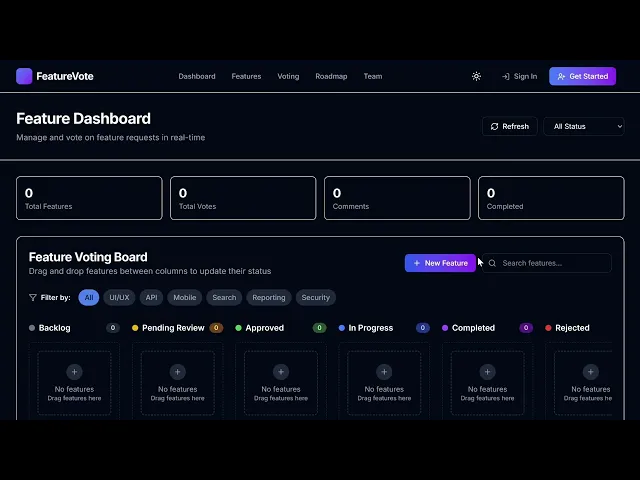

Claude Sonnet 4.5

Claude's code built a fully functional app with a simple UI (as reference url , Figma failed to fetch), which worked fine. It followed the following flow:

Planned API to fix rate limits.

Understood the provided URL is a Figma make file, not a design one & guided step by step to find the one.

Wrote a Python script internally to commit all the files in chunks.

Fetched Figma design files, failed and then recreated them own

One shot created the backend and the frontend.

Ran multiple tests after each new functionality, ensured it worked, then moved forward in the to-do list.

Got stuck with the final commit, so had to do it manually.

Overall, did the work well, but cost is a concern:

Cost: 10$

Tokens: ~14M tokens with auto compact enabled.

Time: ~ 50 minutes - one shot with multiple ask prompts.

Quality: Mid-level, fully functional. Considering it generated the frontend itself using the Figma design schema.

Final Thoughts

DeepSeek V3.2 and Claude Sonnet 4.5 are both general LLMs optimised for reasoning, coding, and tool/agent use, but their usage differs:

DeepSeek V3.2 - use when you need cost-efficient reasoning and open-source flexibility; usually for personal projects.

Sonnet 4.5 - use when you want stable multi-step planning, stronger execution, and enterprise-ready workflows and are ready to deal with cost.

But the best bet would be to combine both of them for your use case.

DeepSeek recently released Deep Seek V3.2 Speciale - a reasoning-first model built for agents.

As a developer who uses both open- and closed-source models for his builds, I decided to test the new model (Deep Seek V3.2) with my most-used model, Claude Sonnet 4.5.

Also, during the building process, I used Rube MCP, a single server that gives you access to apps like GitHub, Linear, Supabase, etc and loads tools dynamically to avoid context bloat. This helps us get a clear picture of models' tool-calling ability and also gives me an excuse to use dog food for the product.

So, I coded a complete web app using the model to stress-test its capacity and found some fascinating insights.

This post covers my build process, prompts, exact builds and a few insights. Let’s dive in!

tldr;

I tested DeepSeek V3.2 and Claude Sonnet 4.5 on a full-stack feature-voting app.

DeepSeek V3.2 built parts of the app but stumbled on execution, speed, and reliability despite solid reasoning steps.

Claude Sonnet 4.5 powered through the whole build with cleaner planning and better tooling, just at a much higher cost.

Both models followed the workflow, but Claude handled ambiguity, testing, and end-to-end flow way more gracefully.

DeepSeek impressed with memory and tool usage, but required manual fixes and felt noticeably slower.

Overall: DeepSeek is great for cost-efficient tinkering, Claude shines for dependable multi-step builds. So, pick based on your use case and cost.

App Overview

The app is the Team Feature Voting Board App., which internal product teams can use to:

Submit feature ideas.

Upvote/downvote features.

Comment on features.

While the board will:

Show vote counts, status, and basic metadata.

Filter by status (e.g. “Backlog”, “In Progress”, “Shipped”).

Prevent duplicate voting by the same user on the same feature.

For this, I will use Rube MCP as a provider, as it unlocks access to 500+ tools with one-click setup + automated tool selection, planning and execution.

Apart from that, for claude-sonnet-4.5 - I will go with Claude Code, and for Deep Seek V3.2 - I will use open code with open router as the model provider.

Testing Methodology

Used Claude 4.5 Sonnet with Claude Code and Deepseek 3.2 Speciale with Opencode.

I started from the basic flow:

Setting up app overview.

Writing the project brief + scope.

Creating Linear epic + tickets.

Designing UI frames in Figma.

Defining Supabase schema + migrations.

Designing API endpoints + interactions.

Planning frontend architecture + components.

Implementing backend logic (votes, comments, features).

Building frontend UI screens + state handling.

Creating GitHub Commit + PR.

For this, I wrote an instruction-based prompt and kept it in prompt.txt at the project level.

For both terminals, I also added a terminal prompt to add an overview of the project.

Terminal Prompt (same for both)

You can check out the prompt I wrote in prompt.txt.

Results

Here is the output of both!

Deep Seek V3.2

Deep Seek V3.2 built a partially functional app, even with reference to the Figma file I provided, which has much of the functionality broken. For ref: I had to manually fix the partial code for DB and RPA and update the NextJS and TypeScript config files.

It followed the following flow:

Went step by step,

fetched all the relevant tools using rube_mcp.

Remembered DB URLs, RLS rules, and, in fact, the DB ID itself.

Created backend and frontend with multiple ask prompts,

broke the high-level task into a to-do list and went one by one.

Relatively slow compared to Claude 4.5 Sonnet, with

no commits.

Overall, did the work partially & speed is a concern:

Cost: ~2$

Tokens: ~8.4M with auto-compact.

Time: ~ 1.45 hours - with additional prompt guidance.

Quality: Mid-level, partially functional.

Claude Sonnet 4.5

Claude's code built a fully functional app with a simple UI (as reference url , Figma failed to fetch), which worked fine. It followed the following flow:

Planned API to fix rate limits.

Understood the provided URL is a Figma make file, not a design one & guided step by step to find the one.

Wrote a Python script internally to commit all the files in chunks.

Fetched Figma design files, failed and then recreated them own

One shot created the backend and the frontend.

Ran multiple tests after each new functionality, ensured it worked, then moved forward in the to-do list.

Got stuck with the final commit, so had to do it manually.

Overall, did the work well, but cost is a concern:

Cost: 10$

Tokens: ~14M tokens with auto compact enabled.

Time: ~ 50 minutes - one shot with multiple ask prompts.

Quality: Mid-level, fully functional. Considering it generated the frontend itself using the Figma design schema.

Final Thoughts

DeepSeek V3.2 and Claude Sonnet 4.5 are both general LLMs optimised for reasoning, coding, and tool/agent use, but their usage differs:

DeepSeek V3.2 - use when you need cost-efficient reasoning and open-source flexibility; usually for personal projects.

Sonnet 4.5 - use when you want stable multi-step planning, stronger execution, and enterprise-ready workflows and are ready to deal with cost.

But the best bet would be to combine both of them for your use case.

Recommended Blogs

Recommended Blogs

Connect AI agents to SaaS apps in Minutes

Connect AI agents to SaaS apps in Minutes

We handle auth, tools, triggers, and logs, so you build what matters.

Connect AI agents to SaaS apps in Minutes

We handle auth, tools, triggers, and logs, so you build what matters.

Stay updated.

Stay updated.