Claude 3.5: Function Calling and Tool Use

Here are the topics covered in this blog

- What is function calling in LLMs?

- Function calling in Claude models.

- Function calling format in Claude (both request and response)

- Real-world examples of building agents using the Claude function calling and Composio.

Introduction to Claude 3.5 Function Calling

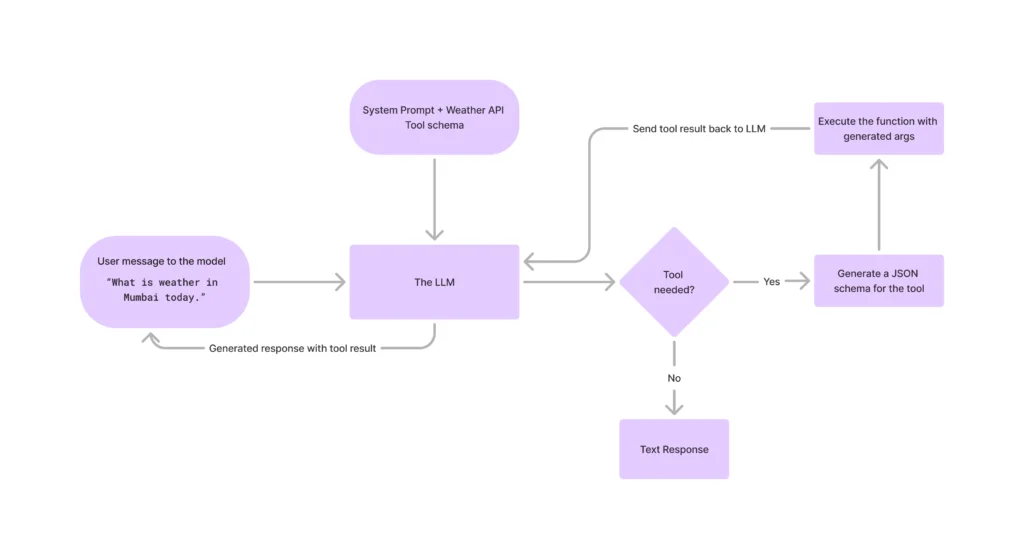

Large Language Models cannot access external information by themselves, and one way to do so is via function calling. It enables LLMs to interact with external apps and services. You can wrap an API call inside a function and have the LLM call it. This way, you can easily automate real-world tasks like sending emails, updating Sheets, scheduling events, searching the web, and more.

Despite the name, LLMs don’t directly call functions themselves. Instead, when they determine that a request requires a tool, they generate a structured schema specifying the appropriate tool and the necessary parameters.

For instance, assume an LLM can access internet browsing tools that accept a text parameter. So, when a user asks it to fetch information about a recent event, the LLM, instead of generating texts, will now generate a JSON schema of the Internet tool, which you can call the function/tool.

Here is a schematic diagram that showcases how tool calling works.

Let us now understand how Claude’s function works.

How Claude Function Calling Works

As established, Claude doesn’t execute external code directly. Instead, it participates in a multi-step conversation where it signals its intent to use a predefined tool, waits for the result, and then formulates a final response based on that result. This interaction relies on specific structures within the Anthropic Messages API.

Here’s a breakdown of the typical workflow:

- User Request with Tools: You send a request to the Claude Messages API. This request includes the user’s query and a list of available tools (functions) that Claude can potentially use. Each tool is defined with a name, description, and input schema.

- Claude Identifies Tool Need: Claude processes the user’s query and the available tool descriptions. If it determines that one of the tools can help fulfill the request, it doesn’t generate a final text answer. Instead, its response indicates which tool it wants to use and what inputs to provide to that tool.

- Application Executes Tool: Your application receives Claude’s response. Seeing that Claude wants to use a tool (indicated by a specific stop_reason), your code parses the tool name and input parameters. You then execute the corresponding function or API call in your own environment (e.g., call a weather API, query a database, access a booking system).

- Send Tool Result Back: Once your tool/function execution is complete (successfully or with an error), you send another request to the Claude Messages API. This request includes the original conversation history plus a new message containing the result (or error message) obtained from executing the tool.

- Claude Generates Final Response: Claude receives the tool’s result. It now has the necessary external information. It processes this result in the context of the original query and generates a final, natural language response for the end-user.

Let’s look at the API specifics for each step.

Function Calling Format in Claude (API Details)

Claude’s function calling capabilities are accessed via the standard Messages API (/v1/messages). The key is how you structure the messages array and use the tools parameter.

1. Initial Request: User Query + Tool Definitions

You initiate the process by sending a POST request to the /v1/messages endpoint. You provide the user’s message and define the tools Claude can use via the top-level tools parameter.

Request Format:

POST /v1/messages

{

"model": "claude-3-5-sonnet-20240620", // Or another supported model

"max_tokens": 1024,

"messages": [

{

"role": "user",

"content": "What's the weather like in London?"

}

],

"tools": [

{

"name": "get_weather",

"description": "Get the current weather conditions for a specific location.",

"input_schema": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "The city and state/country, e.g., San Francisco, CA or London, UK"

}

},

"required": ["location"]

}

},

{

"name": "get_stock_price",

"description": "Get the current stock price for a given ticker symbol.",

"input_schema": {

"type": "object",

"properties": {

"ticker_symbol": {

"type": "string",

"description": "The stock ticker symbol, e.g., GOOGL, AMZN."

}

},

"required": ["ticker_symbol"]

}

}

// Add more tools here if needed

]

}content_copydownloadUse code with caution.Json

- model: Specify a Claude model that supports function calling (like Claude 3.5 Sonnet, Claude 3 Opus, etc.).

- messages: The standard conversation history. Starts with the user’s query.

- tools: A list of available tools. Each tool object has:

- name: A unique identifier for the tool.

- description: A clear explanation of what the tool does. This is crucial for Claude to understand when to use it.

- input_schema: A JSON Schema object defining the parameters the tool accepts. This helps Claude generate the correct input structure.

2. Claude’s Response: Requesting Tool Use

If Claude decides to use a tool, the API response will not contain the final answer. Instead, it will have a stop_reason of tool_use and the content block will specify the tool call details.

Response Format (When Claude requests a tool):

{

"id": "msg_12345",

"type": "message",

"role": "assistant",

"model": "claude-3-5-sonnet-20240620",

"content": [

{

"type": "tool_use",

"id": "toolu_abc123", // Unique ID for this specific tool use request

"name": "get_weather",

"input": {

"location": "London, UK"

}

}

// There might be multiple tool_use blocks if Claude wants to call several tools

],

"stop_reason": "tool_use",

"stop_sequence": null,

"usage": {

"input_tokens": 50,

"output_tokens": 35

}

}content_copydownloadUse code with caution.Json

- stop_reason: “tool_use”: This signals that Claude is pausing to wait for tool results.

- content: An array containing one or more blocks of type: “tool_use”.

- tool_use block:

- type: Always “tool_use”.

- id: A unique identifier generated by Anthropic for this specific tool use instance. You’ll need this ID when you send the result back.

- name: The name of the tool Claude wants to use (matching one from your request).

- input: A JSON object containing the parameters Claude generated based on the input_schema and the user query. Your application should use these parameters to execute the tool.

3. Subsequent Request: Providing Tool Result

After your application executes the tool (e.g., calls the get_weather function/API with location: “London, UK”), you send the result back to Claude in a new API call. You must include the entire conversation history up to this point, plus a new message with role: “user” containing the tool result.

Request Format (Sending tool result back):

POST /v1/messages

{

"model": "claude-3-5-sonnet-20240620",

"max_tokens": 1024,

"messages": [

// --- Previous conversation history ---

{

"role": "user",

"content": "What's the weather like in London?"

},

{

"role": "assistant",

"content": [

{

"type": "tool_use",

"id": "toolu_abc123", // The ID from Claude's previous response

"name": "get_weather",

"input": {

"location": "London, UK"

}

}

]

},

// --- New message containing the tool result ---

{

"role": "user", // MUST be role: user for tool results

"content": [

{

"type": "tool_result",

"tool_use_id": "toolu_abc123", // Match the ID of the tool use request

"content": "The weather in London, UK is currently 15°C and mostly cloudy.",

// Or you can provide structured content:

// "content": [{"type": "json", "json": {"temperature": 15, "unit": "C", "condition": "Mostly Cloudy"}}]

// Optionally include if an error occurred:

// "is_error": true,

// "content": "API request failed with status 500"

}

]

}

],

"tools": [

// You might need to include the tool definitions again,

// depending on the specific SDK/library or if you anticipate further tool calls.

// Best practice is often to include them.

{

"name": "get_weather", /* ...schema... */ },

{

"name": "get_stock_price", /* ...schema... */ }

]

}content_copydownloadUse code with caution.Json

- messages: Append the history. Crucially, add a new message object:

- role: Must be “user”. Tool results are provided back to Claude as if they are user input for the next turn.

- content: An array containing one or more blocks of type: “tool_result”.

- tool_result block:

- type: Always “tool_result”.

- tool_use_id: The unique id from the corresponding tool_use block in Claude’s previous response. This links the result to the specific request.

- content: The output from your tool execution. This can be a simple string or structured JSON (using [{“type”: “json”, “json”: {…}}]). Provide the most relevant information.

- is_error (optional): Set to true if the tool execution failed. The content should then describe the error.

4. Claude’s Final Response: Using the Tool Result

Claude processes the tool_result within the context of the conversation and generates the final answer for the end user.

Response Format (Final answer after tool use):

{

"id": "msg_67890",

"type": "message",

"role": "assistant",

"model": "claude-3-5-sonnet-20240620",

"content": [

{

"type": "text",

"text": "Okay, I found the weather for you. It's currently 15°C and mostly cloudy in London, UK."

}

],

"stop_reason": "end_turn", // Or potentially "max_tokens"

"stop_sequence": null,

"usage": {

"input_tokens": 110, // Includes history + tool result

"output_tokens": 25

}

}content_copydownloadUse code with caution.Json

- stop_reason: “end_turn”: Indicates Claude has finished its turn and provided a complete response.

- content: An array typically containing a single block of type: “text” with the final, user-facing answer.

This multi-step process allows Claude to leverage external capabilities securely and effectively, coordinated by your application logic. The clear API structure makes it relatively straightforward to integrate this powerful feature.

Building a simple Calculator App

Start by installing the Anthropic SDK.

pip install anthropicfrom anthropic import Anthropic

client = Anthropic()

MODEL_NAME = "claude-3-opus-20240229"Define a simple Calculator function.

import re

def calculate(expression):

# Remove any non-digit or non-operator characters from the expression

expression = re.sub(r'[^0-9+\-*/().]', '', expression)

try:

# Evaluate the expression using the built-in eval() function

result = eval(expression)

return str(result)

except (SyntaxError, ZeroDivisionError, NameError, TypeError, OverflowError):

return "Error: Invalid expression"

tools = [

{

"name": "calculator",

"description": "A simple calculator that performs basic arithmetic operations.",

"input_schema": {

"type": "object",

"properties": {

"expression": {

"type": "string",

"description": "The mathematical expression to evaluate (e.g., '2 + 3 * 4')."

}

},

"required": ["expression"]

}

}

]In this example, we define a calculate function that takes a mathematical expression as input, removes any non-digit or non-operator characters using a regular expression, and then evaluates the expression using the built-in eval() function. If the evaluation is successful, the result is returned as a string. If an error occurs during evaluation, an error message is returned.

Now, interact with the Claude.

def process_tool_call(tool_name, tool_input):

if tool_name == "calculator":

return calculate(tool_input["expression"])

def chat_with_claude(user_message):

print(f"\n{'='*50}\nUser Message: {user_message}\n{'='*50}")

message = client.messages.create(

model=MODEL_NAME,

max_tokens=4096,

messages=[{"role": "user", "content": user_message}],

tools=tools,

)

print(f"\nInitial Response:")

print(f"Stop Reason: {message.stop_reason}")

print(f"Content: {message.content}")

if message.stop_reason == "tool_use":

tool_use = next(block for block in message.content if block.type == "tool_use")

tool_name = tool_use.name

tool_input = tool_use.input

print(f"\nTool Used: {tool_name}")

print(f"Tool Input: {tool_input}")

tool_result = process_tool_call(tool_name, tool_input)

print(f"Tool Result: {tool_result}")

response = client.messages.create(

model=MODEL_NAME,

max_tokens=4096,

messages=[

{"role": "user", "content": user_message},

{"role": "assistant", "content": message.content},

{

"role": "user",

"content": [

{

"type": "tool_result",

"tool_use_id": tool_use.id,

"content": tool_result,

}

],

},

],

tools=tools,

)

else:

response = message

final_response = next(

(block.text for block in response.content if hasattr(block, "text")),

None,

)

print(response.content)

print(f"\nFinal Response: {final_response}")

return final_responseFinally try it out

chat_with_claude("What is the result of 1,984,135 * 9,343,116?")

chat_with_claude("Calculate (12851 - 593) * 301 + 76")

chat_with_claude("What is 15910385 divided by 193053?")Once you run this function, you will receive the response from the Claude.

OpenAI SDK Compatibility

You can also use Claude models from OpenAI SDK, this is helpful if you are already using OpenAI SDK and want to minimise development time. You only have to update the base URL and model name and you can go ahead.

from openai import OpenAI

client = OpenAI(

api_key="ANTHROPIC_API_KEY", # Your Anthropic API key

base_url="https://api.anthropic.com/v1/" # Anthropic's API endpoint

)

response = client.chat.completions.create(

model="claude-3-7-sonnet-20250219", # Anthropic model name

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Who are you?"}

],

)

print(response.choices[0].message.content)Here’s how to use a weather tool with OpenAI SDK.

from openai import OpenAI

client = OpenAI(api_key="ANTHROPIC_API_KEY", # Your Anthropic API key

base_url="https://api.anthropic.com/v1/" # Anthropic's API endpoint)

tools = [{

"type": "function",

"function": {

"name": "get_weather",

"description": "Get current temperature for a given location.",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "City and country e.g. Bogotá, Colombia"

}

},

"required": [

"location"

],

"additionalProperties": False

},

"strict": True

}

}]

completion = client.chat.completions.create(

model="claude-3-7-sonnet-20250219",

messages=[{"role": "user", "content": "What is the weather like in Paris today?"}],

tools=tools

)

print(completion.choices[0].message.tool_calls)Advanced usecases with Composio

Install Composio

pip install composio-openai openaiImport Libraries & Initialize ComposioToolSet & LLM

from openai import OpenAI

from composio_openai import ComposioToolSet, Action

openai_client = OpenAI()

composio_toolset = ComposioToolSet()Connect Your GitHub Account

You need to have an active GitHub Integration. Learn how to do this here

request = composio_toolset.initiate_connection(app=App.GITHUB)

print(f"Open this URL to authenticate: {request.redirectUrl}")Don’t forget to set your COMPOSIO_API_KEY and OPENAI_API_KEY in your environment variables.

Get All Github Tools

You can get all the tools for a given app as shown below, but you can get specific actions and filter actions using usecase & tags. Learn more here

tools = composio_toolset.get_tools(apps=[App.GITHUB])Define the Assistant

assistant_instruction = "You are a super intelligent personal assistant"

assistant = openai_client.beta.assistants.create(

name="Personal Assistant",

instructions=assistant_instruction,

model="gpt-4-turbo-preview",

tools=tools,

)

thread = openai_client.beta.threads.create()

my_task = "Star a repo composiohq/composio on GitHub"

message = openai_client.beta.threads.messages.create(thread_id=thread.id,role="user",content=my_task)

run = openai_client.beta.threads.runs.create(thread_id=thread.id,assistant_id=assistant.id)

response_after_tool_calls = composio_toolset.wait_and_handle_assistant_tool_calls(

client=openai_client,

run=run,

thread=thread,

)Execute the Agent

print(response_after_tool_calls)This is a demonstration of how you can accomplish complex automation for real-world tasks.

Improving Function Calling Performance: Tips and Best Practices

While function calling is incredibly powerful, getting LLMs like Claude to reliably use tools in the intended way often requires careful design and iteration. If you find Claude isn’t calling functions when expected, calls the wrong function, or provides incorrect parameters, here are several strategies to improve performance:

- 1. Optimize Tool Definitions: This is often the most crucial area for improvement.

- Clear and Specific Descriptions: The description field is vital. Clearly explain exactly what the tool does, when it should be used, and potentially when it shouldn’t be used. Use keywords the LLM might associate with the task. Include simple examples within the description if appropriate (e.g., “Use this for currency conversion like ‘convert 100 USD to EUR'”).

- Descriptive Naming: Use intuitive and distinct names (name) for your tools. Avoid overly generic names if multiple tools perform similar tasks.

- Precise Parameter Schemas (input_schema):

- Parameter Descriptions: Clearly describe each parameter within the properties of the input_schema. Explain what the parameter represents and the expected format (e.g., “The city name, optionally including state or country like ‘London, UK’ or ‘Paris'”).

- Use Specific Types: Define type correctly (e.g., string, number, integer, boolean).

- Leverage enum: If a parameter can only accept a fixed set of values (e.g., units like “C” or “F”, categories like “urgent” or “normal”), use the enum keyword within the parameter’s schema. This strongly guides the model to provide valid inputs.

- Mark Required Parameters: Clearly define which parameters are essential using the required array.

- Granularity: Consider breaking down complex tools into smaller, more focused ones. A single tool trying to do too many things can confuse the model. For instance, instead of one database_manager tool, have separate get_user_details, update_order_status, and add_new_product tools.

- 2. Refine Prompting Strategies:

- Clear User Queries: Ensure the user’s request is unambiguous. Sometimes rephrasing the user’s input can make the need for a specific tool much clearer to the model.

- System Prompts: Use system prompts to provide high-level instructions or context about the available tools and how the LLM should behave. You can remind it to use tools when necessary for specific types of information or actions.

- 3. Handle Tool Execution and Results Effectively:

- Robust Error Handling (Your Code): Ensure your actual tool execution code (e.g., your Python calculate or get_weather function) handles potential errors gracefully (like network issues, invalid inputs it receives, division by zero, etc.).

- Informative Error Feedback (To LLM): When your tool does encounter an error, send a clear and informative error message back to Claude within the content of the tool_result block. For Anthropic, also set “is_error”: true. This helps Claude understand why the tool failed and potentially try again or inform the user correctly. Don’t just return a generic “Failed”. Instead, return something like “Error: Location ‘Atlantis’ not found in weather database.”

- Validate LLM Inputs: Before executing a tool based on Claude’s request, consider adding a validation layer in your application to check if the parameters provided by the LLM seem reasonable and match the expected schema. This prevents wasted API calls or unexpected behavior in your tools.

- Format Results Clearly: Provide the tool’s results (content in the tool_result) in a format that’s easy for the LLM to parse and understand. For simple results, a clear string is often sufficient. For complex data, returning structured JSON (“content”: [{“type”: “json”, “json”: {“temp”: 15, “unit”: “C”}}]) is generally more reliable than embedding complex information in a sentence.

- 4. Choose the Right Model: Newer and more capable models (like Claude 3.5 Sonnet, Claude 3 Opus, GPT-4o) generally have better instruction following and reasoning capabilities, leading to more reliable tool use compared to older or smaller models.

- 5. Iterate and Test:

- Log Interactions: Keep detailed logs of the entire function calling flow: the user query, the tools provided, Claude’s tool_use request (including parameters), your application’s tool execution result (or error), and Claude’s final response.

- Analyze Failures: When function calling doesn’t work as expected, review the logs to pinpoint the issue. Did Claude misunderstand the description? Was the schema unclear? Did your tool return a confusing result?

- Refine and Retest: Based on your analysis, refine the tool definitions, prompts, or result formatting, and test again with the problematic queries and variations. Function calling often requires this kind of iterative refinement.

Conclusion

Function calling can enable LLMs like Claude to achieve much more complex and practical tasks than generating text alone. By providing Claude with a well-defined set of tools, you bridge the gap between the model’s conversational intelligence and the vast capabilities of external APIs, databases, and custom code.

As we’ve explored, the process involves a structured conversation: defining tools with clear schemas, letting Claude identify when to use them and with what inputs, executing the corresponding function in your application environment, and feeding the results back to Claude for final synthesis. The Anthropic Messages API provides a clear mechanism for this interaction using the tools, tool_use, and tool_result structures.